Documente Academic

Documente Profesional

Documente Cultură

Listen and Look: Audio-Visual Matching Assisted Speech Source Separation

Încărcat de

MANE POOJATitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Listen and Look: Audio-Visual Matching Assisted Speech Source Separation

Încărcat de

MANE POOJADrepturi de autor:

Formate disponibile

Listen and Look: Audio-Visual Matching Assisted Speech Source

Separation

Mane Pooja (M190442EC)

July 21, 2020

National Institute of Technology, Calicut

M. Tech, Signal Processing

Mane Pooja (M190442EC) NITC AV match July 21, 2020 1 / 30

Overview

1 Introduction

2 Prior Methods

Deep Clustering

3 Proposed Method

Architecture and Methodology

Mask Correction With Audio–Visual Matching

4 Experiments and Results

Separation results on 2 speaker mixtures

5 Conclusion

6 References

Mane Pooja (M190442EC) NITC AV match July 21, 2020 2 / 30

Introduction

Mane Pooja (M190442EC) NITC AV match July 21, 2020 3 / 30

Speech Source Separation: Flowchart

Figure: Flowchart of Speech Separation Methods

Mane Pooja (M190442EC) NITC AV match July 21, 2020 4 / 30

Audio-Visual Match

AV Match is Audio Visual Deep approach.

AIM: To separate individual voices from an audio mixture of multiple simultaneous talkers.

Audio and Visual signals of speech (e.g., lip movements), can be used to learn better feature

representations for speech separation.

Alleviates Source Permutaion problem.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 5 / 30

Applications to speech separation:

Automatic Speech Recognition (ASR): It includes translation of spoken language into text

by computers.

Dialogue systems: A conversational agent is a computer system intended to converse with

a human.

Multimedia retrieval: To extract semantic information (like words, phrases, signs, and

symbols) from multimedia data sources.

Hearing aids: A hearing aid is a device designed to improve hearing to a person with hearing

loss.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 6 / 30

Terms to be known

Speech Signal/Acoustic Signal: It is a 1-D function of time emerged from a speaker’s

mouth which has specific meaning.

Spectrogram : A spectrogram is a visual representation of the spectrum of frequencies of a

signal as it varies with time.

Source Permutaion problem:Assigning separated signal snippets to wrong sources over

time.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 7 / 30

Prior Methods

Mane Pooja (M190442EC) NITC AV match July 21, 2020 8 / 30

Deep Methods:

Deep methods usually approach the problem through spectrogram mask estimation

1 Classification-based methods: Classify time-frequency (TF) bins to distinct speakers.

Disadvantage: Failed under speaker-independent case due to the source permutation

problem.

2 Clustering using supervised training: Predict the T-F bins and assign at once, without the

need of frame-by-frame assignment. eg: DC and uPIT

Disadvantage:Assigning T-F bins to sources at once is main cause of permutation problem.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 9 / 30

Audio-based Deep Approach:

Deep Clustering :

Deals with the source permutation problem.

Deep Clustering method projects each T-F bin of the mixture’s spectrogram into a

high-dimensional space where embeddings of the same speaker can be clustered to form its

separation mask.

Limitation:Permutation problem still exists when two speakers are of same gender.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 10 / 30

Proposed Method

Mane Pooja (M190442EC) NITC AV match July 21, 2020 11 / 30

Listen and Look: Audio–Visual Matching Assisted Speech Source

Separation

Design a Neural Network to learn speaker-independent audio–visual matching.

We use DC as the “Audio Only Separation Model” in our method.

We calculate similarities between the separated audio and visual streams with our proposed

“AV Match" model.

We use these similarities to correct masks predicted by the audio-only model.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 12 / 30

AV Match Framework

Figure: Proposed AV Match speech separation framework

Mane Pooja (M190442EC) NITC AV match July 21, 2020 13 / 30

Methodology

We calculate audio only embeddings using audio network(DC model).

We calculate visual only embeddings using visual network.

We find audio-visual embeddings using AV match model.

We compute similarities of audio and visual embeddings by inner product.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 14 / 30

AV Match Architecture

Mane Pooja (M190442EC) NITC Figure: Proposed AVAV

Match

matchNetwork Architecture July 21, 2020 15 / 30

CNN Architecture: Types of Layers

Convolutional layer: A “filter” passes over the image, scanning a few pixels at a time and

creating a feature map, that predicts the class to which feature belongs.

Max-Pooling layer (downsampling): Reduces the amount of information in each feature

obtained in the convolutional layer, maintaining the most important information.

Batch Normalisation: Allows each layer of a network to learn by itself independently of other

layers.

Activation Function: Relu Activation function is used to reduce Non-linearity.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 16 / 30

CNN Architecture: Types of Layers

Fully connected input layer: Takes the output of the previous layers, turns them into a

single vector that can be an input for the next stage.

Fully connected output layer: Gives the final probabilities for each label.

Fully connected layer: Takes the inputs from the feature analysis and applies weights to

predict the correct label.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 17 / 30

Bidirectional Long Short-Term Memory

BLSTM: The output layer can get information from past (backwards) and future (forward)

states simultaneously.

Do not require input data to be fixed. Their future input information is reachable from the

current state.

BLSTM are especially useful to extract features using the context of input .

Mane Pooja (M190442EC) NITC AV match July 21, 2020 18 / 30

Mask Correction With Audio–Visual Matching

Similarities obtained by the predicted masks are denoted as

Relative similarity of audio and visual streams obtained by applying the triplet loss for training

and set m = 1 empirically

A binary sequence decides whether we permute masks predicted by the audio-only model

can be obtained by

Mane Pooja (M190442EC) NITC AV match July 21, 2020 19 / 30

Experiments and Results

Mane Pooja (M190442EC) NITC AV match July 21, 2020 20 / 30

Experiments:

We carry out experiments on 2-speaker mixtures of the WSJ0 and GRID datasets.

We also quantitatively and qualitatively show the benefits of the proposed AV Match model in

obtaining high performance even for same-gender mixtures .

Mane Pooja (M190442EC) NITC AV match July 21, 2020 21 / 30

Separation results on 2 speaker mixtures

fig3:Results on 2 Speaker Mixtures

Mane Pooja (M190442EC) NITC AV match July 21, 2020 22 / 30

Conclusions based on above table:

The proposed AVDC model outperform the audio-based DC on both the GRID and WSJO

datasets.

It is clear that the proposed method improves the separation quality by a large margin on the

GRID dataset.

In same-gender mixtures, we can better trace the speakers given visual information of the lip

regions,thus relieving the source permutation problem.

We also observe that median filtering improves the performance.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 23 / 30

Separation results on 2 speaker mixtures

fig3:Improvement on SDR of different settings of AV Match against the DC baseline.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 24 / 30

Separation results on 2 speaker mixtures

fig3:Results on GRID-Extreme Dataset

Mane Pooja (M190442EC) NITC AV match July 21, 2020 25 / 30

Conclusion

Mane Pooja (M190442EC) NITC AV match July 21, 2020 26 / 30

Conclusion

Proposed AV Match model successfully corrects the permutation problem in the masks.

Proposed approach is effective when the performance of audio-only separation is poor.

The training procedure of AV Match model is independent of the audio-only separation model.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 27 / 30

References

Mane Pooja (M190442EC) NITC AV match July 21, 2020 28 / 30

References

J. R. Hershey, Z. Chen, J. Le Roux, and S. Watanabe, “Deep clustering:Discriminative

embeddings for segmentation and separation,” in Proc.41st Int. Conf. Acoust., Speech,

Signal Process., 2016, pp. 31–35.

Y. Isik, J. L. Roux, Z. Chen, S. Watanabe, and J. R. Hershey, “Single channel multi-speaker

separation using deep clustering,” in Proc. Interspeech,2016, pp. 545–549.

A. Torfi, S. M. Iranmanesh, N. Nasrabadi, and J. Dawson, “3D convolutional neural networks

for cross audio-visual matching recognition,” IEEE Access, vol. 5, pp. 22081–22091, 2017.

J. S. Chung, A. Senior, O. Vinyals, and A. Zisserman, “Lip reading sentences in the wild,” in

Proc. IEEE Conf. Comput. Vis. Pattern Recognit.,2017, pp. 3444–3453.

Mane Pooja (M190442EC) NITC AV match July 21, 2020 29 / 30

Thank You

Mane Pooja (M190442EC) NITC AV match July 21, 2020 30 / 30

S-ar putea să vă placă și

- Listen and Look: Audio-Visual Matching Assisted Speech Source SeparationDocument14 paginiListen and Look: Audio-Visual Matching Assisted Speech Source SeparationMANE POOJAÎncă nu există evaluări

- Stereo VisionDocument214 paginiStereo Visionvandung2290Încă nu există evaluări

- Vision Transformer Based Audio Classification Using Patch-Level Feature FusionDocument5 paginiVision Transformer Based Audio Classification Using Patch-Level Feature FusioncacaÎncă nu există evaluări

- MHD202-Semantic Segmentation and Mapping of Traffic Scene-Presentation SlidesDocument32 paginiMHD202-Semantic Segmentation and Mapping of Traffic Scene-Presentation SlidesBHAVESH S (Alumni)Încă nu există evaluări

- Government College of Engineering Aurangabad: Submitted BYDocument22 paginiGovernment College of Engineering Aurangabad: Submitted BYPrathamesh PendamÎncă nu există evaluări

- Digital Image Noise Estimation Using DWT TIP.2021.3049961Document11 paginiDigital Image Noise Estimation Using DWT TIP.2021.3049961VijayKumar LokanadamÎncă nu există evaluări

- Subsequent Frame ProphecyDocument10 paginiSubsequent Frame ProphecyIJRASETPublicationsÎncă nu există evaluări

- Development of Video Fusion Algorithm at Frame Level For Removal of Impulse NoiseDocument6 paginiDevelopment of Video Fusion Algorithm at Frame Level For Removal of Impulse NoiseIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Jurnal Teknologi: P W M I F Fpga H L L CDocument5 paginiJurnal Teknologi: P W M I F Fpga H L L CBalaji VenkataramanÎncă nu există evaluări

- Improved Methodology For Squeak & Rattle Analysis With Abaqus and Correlation With Test ResultsDocument11 paginiImproved Methodology For Squeak & Rattle Analysis With Abaqus and Correlation With Test ResultsJuan PerezÎncă nu există evaluări

- Image Fusion of San Francisco Bay SAR Images Based On ADWT With Optimization TechniqueDocument6 paginiImage Fusion of San Francisco Bay SAR Images Based On ADWT With Optimization TechniqueDOKKU LAKSHMI PAVANIÎncă nu există evaluări

- Image Denoising Using Ica Technique: IPASJ International Journal of Electronics & Communication (IIJEC)Document5 paginiImage Denoising Using Ica Technique: IPASJ International Journal of Electronics & Communication (IIJEC)International Journal of Application or Innovation in Engineering & ManagementÎncă nu există evaluări

- Mobile CommunicationDocument22 paginiMobile CommunicationPriyanka DuttaÎncă nu există evaluări

- Updated PPT presentation-ISA-1 Phase-2Document27 paginiUpdated PPT presentation-ISA-1 Phase-2Aditya KumarÎncă nu există evaluări

- A Novel Wavelet Based Threshold Selection Technique For Image DenoisingDocument4 paginiA Novel Wavelet Based Threshold Selection Technique For Image DenoisingjebileeÎncă nu există evaluări

- Machine Learning Based Speech Aid For Silent CommunicationDocument31 paginiMachine Learning Based Speech Aid For Silent CommunicationLaxman JakatiÎncă nu există evaluări

- Acoustic Scene ClassificationDocument6 paginiAcoustic Scene ClassificationArunkumar KanthiÎncă nu există evaluări

- Image De-Noising Using Convolutional Variational AutoencodersDocument10 paginiImage De-Noising Using Convolutional Variational AutoencodersIJRASETPublicationsÎncă nu există evaluări

- JCT3V-G1100 (CTC)Document7 paginiJCT3V-G1100 (CTC)Mario CordinaÎncă nu există evaluări

- Indian Currency Detection For The Blind Using Matlab IJERTCONV3IS05034Document6 paginiIndian Currency Detection For The Blind Using Matlab IJERTCONV3IS05034Bhaskar Rao PÎncă nu există evaluări

- Detection of Power Grid Synchronization Failure by Sensing Bad Voltage and FrequencyDocument5 paginiDetection of Power Grid Synchronization Failure by Sensing Bad Voltage and FrequencyAnonymous kw8Yrp0R5rÎncă nu există evaluări

- Sequence Matching TechniquesDocument8 paginiSequence Matching TechniquesselimarikanÎncă nu există evaluări

- A Hybrid Transformation Technique For Advanced Video Coding: M. Ezhilarasan, P. ThambiduraiDocument7 paginiA Hybrid Transformation Technique For Advanced Video Coding: M. Ezhilarasan, P. ThambiduraiUsman TariqÎncă nu există evaluări

- Sensors 23 03282 v2Document22 paginiSensors 23 03282 v2corneli@Încă nu există evaluări

- Convolutional Autoencoder For Image DenoisingDocument11 paginiConvolutional Autoencoder For Image DenoisingUMT Artificial Intelligence Review (UMT-AIR)Încă nu există evaluări

- Discrete Wavelet Transform Based Image Fusion and De-Noising in FpgaDocument10 paginiDiscrete Wavelet Transform Based Image Fusion and De-Noising in FpgaRudresh RakeshÎncă nu există evaluări

- Fmed 09 864879Document11 paginiFmed 09 864879804390887Încă nu există evaluări

- Stereo Vision PHD ThesisDocument7 paginiStereo Vision PHD Thesisheidiperrypittsburgh100% (1)

- 2014 - Howison - GPU-accelerated Denoise of 3D Magnetic Resonant ImagesDocument12 pagini2014 - Howison - GPU-accelerated Denoise of 3D Magnetic Resonant ImagesMeo MeoÎncă nu există evaluări

- Jot 88 2 83Document4 paginiJot 88 2 83Shit ShitÎncă nu există evaluări

- Vision Transformer Adapter For Dense PredictionsDocument20 paginiVision Transformer Adapter For Dense Predictionsmarkus.aureliusÎncă nu există evaluări

- Deep Affine Motion Compensation Network For Inter Prediction in VVCDocument11 paginiDeep Affine Motion Compensation Network For Inter Prediction in VVCMateus AraújoÎncă nu există evaluări

- Crack Detection of Wall Using MATLABDocument5 paginiCrack Detection of Wall Using MATLABVIVA-TECH IJRIÎncă nu există evaluări

- A Proposed Framework To De-Noise Medical Images Based On Convolution Neural NetworkDocument7 paginiA Proposed Framework To De-Noise Medical Images Based On Convolution Neural NetworkNIET Journal of Engineering & Technology(NIETJET)Încă nu există evaluări

- IJIIDSDocument15 paginiIJIIDSEvent DecemberÎncă nu există evaluări

- Possion Noise Removal in MRI Data SetsDocument35 paginiPossion Noise Removal in MRI Data Setskpkarthi80Încă nu există evaluări

- Automated Detection of Welding Defects in Pipelines From 2017 NDT E InternDocument7 paginiAutomated Detection of Welding Defects in Pipelines From 2017 NDT E InternAndres CasteloÎncă nu există evaluări

- 2018 Winter CGR 3I - M - 22318 - CODocument25 pagini2018 Winter CGR 3I - M - 22318 - CO91.Tejaswini PatilÎncă nu există evaluări

- Question Paper Code:: Reg. No.Document3 paginiQuestion Paper Code:: Reg. No.ShanmugapriyaVinodkumarÎncă nu există evaluări

- Nietjet 0602S 2018 003Document5 paginiNietjet 0602S 2018 003NIET Journal of Engineering & Technology(NIETJET)Încă nu există evaluări

- Advances in Colour Transfer: Francois PitiéDocument19 paginiAdvances in Colour Transfer: Francois PitiéaaÎncă nu există evaluări

- Synopsis PDF Batch40Document11 paginiSynopsis PDF Batch40vgmanjunathaÎncă nu există evaluări

- Gopika Internship ReportDocument30 paginiGopika Internship ReportC. SwethaÎncă nu există evaluări

- Alpatov 2020Document4 paginiAlpatov 2020ujjwalÎncă nu există evaluări

- HoT - 8 Motion Estimation-RefaelDocument32 paginiHoT - 8 Motion Estimation-RefaelPriya GayathriÎncă nu există evaluări

- Setup Patterns For Display Measurements-Version 1.0: NISTIR 6758Document15 paginiSetup Patterns For Display Measurements-Version 1.0: NISTIR 6758afkq99861Încă nu există evaluări

- Improvement in Outdoor Sound Source Detection Using A Quadrotor-Embedded Microphone ArrayDocument6 paginiImprovement in Outdoor Sound Source Detection Using A Quadrotor-Embedded Microphone ArrayOSCARIN1001Încă nu există evaluări

- Multimedia System (New Additional) Amar PanchalDocument35 paginiMultimedia System (New Additional) Amar Panchalnishit91Încă nu există evaluări

- 1 s2.0 S0957417423011302 MainDocument11 pagini1 s2.0 S0957417423011302 Mainranjani093Încă nu există evaluări

- Development of Quick Algorithm For Wipe TransitionDocument8 paginiDevelopment of Quick Algorithm For Wipe TransitionInternational Journal of Application or Innovation in Engineering & ManagementÎncă nu există evaluări

- Applicability of Scaling Laws To Vision Encoding ModelsDocument7 paginiApplicability of Scaling Laws To Vision Encoding ModelsDaniele MarconiÎncă nu există evaluări

- Experiment No. 3Document5 paginiExperiment No. 3L3 03 Vedant BhagwanÎncă nu există evaluări

- 1318 قانعیDocument6 pagini1318 قانعیm1.nourianÎncă nu există evaluări

- Musical Tones Classification Using Machine LearningDocument6 paginiMusical Tones Classification Using Machine LearningIJRASETPublicationsÎncă nu există evaluări

- EOC 1 (Q) - Sesi 2 2021-2022Document3 paginiEOC 1 (Q) - Sesi 2 2021-2022HAAVINESHÎncă nu există evaluări

- Restoration of Fast Moving Objects: Under The Guidance of Ms.P.DEVI Assistant ProfessorDocument50 paginiRestoration of Fast Moving Objects: Under The Guidance of Ms.P.DEVI Assistant ProfessorParvathareddy NagaVarun kumarÎncă nu există evaluări

- Projectreport (2) - 1 - 111Document56 paginiProjectreport (2) - 1 - 111Melinda BerbÎncă nu există evaluări

- General Information: Zte - PTP - Synce - Test-2 - OltDocument4 paginiGeneral Information: Zte - PTP - Synce - Test-2 - OltAsela BamunuarachchiÎncă nu există evaluări

- GeneratingVisualDynamics fromSoundandContextDocument40 paginiGeneratingVisualDynamics fromSoundandContextDenis BeliÎncă nu există evaluări

- Design and Implementation of Portable Impedance AnalyzersDe la EverandDesign and Implementation of Portable Impedance AnalyzersÎncă nu există evaluări

- Top Business Directories in Lajpat Nagar - Best Directory Business Delhi Near Me - JustdialDocument7 paginiTop Business Directories in Lajpat Nagar - Best Directory Business Delhi Near Me - Justdialblr.visheshÎncă nu există evaluări

- IA Compressor & SystemDocument51 paginiIA Compressor & SystemKazi Irfan100% (1)

- Mock Exam Answers enDocument23 paginiMock Exam Answers enluiskÎncă nu există evaluări

- DP PaperDocument31 paginiDP Paperaymane.oubellaÎncă nu există evaluări

- Problem Gambling Worldwide: An Update and Systematic Review of Empirical Research (2000 - 2015)Document22 paginiProblem Gambling Worldwide: An Update and Systematic Review of Empirical Research (2000 - 2015)Adre CactussoÎncă nu există evaluări

- Group 3 - SRM Project ReportDocument10 paginiGroup 3 - SRM Project ReportAkash IyappanÎncă nu există evaluări

- C-Bus Basics Training Manual - Vol 1Document64 paginiC-Bus Basics Training Manual - Vol 1jakkyjeryÎncă nu există evaluări

- Preparation For Speaking 2Document3 paginiPreparation For Speaking 2Em bé MậpÎncă nu există evaluări

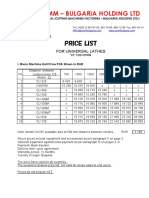

- ZMM - Bulgaria Holding LTD: Price ListDocument6 paginiZMM - Bulgaria Holding LTD: Price ListJacques LopezÎncă nu există evaluări

- NBK TA Brochure 2016-EmailDocument11 paginiNBK TA Brochure 2016-EmailPietrus NimbusÎncă nu există evaluări

- 47 - Renuka C - Bamboo Assignment 2Document14 pagini47 - Renuka C - Bamboo Assignment 2Renuka ChalikwarÎncă nu există evaluări

- Hearing Aid Amplification: Sandlin's Textbook ofDocument10 paginiHearing Aid Amplification: Sandlin's Textbook ofAmit SinghÎncă nu există evaluări

- Curriculum SAP GRC Risk Management - Consultant TrainingDocument2 paginiCurriculum SAP GRC Risk Management - Consultant TraininglawalÎncă nu există evaluări

- Management: Organization StructureDocument26 paginiManagement: Organization StructureAlvin OroscoÎncă nu există evaluări

- Buoyant FoundationDocument2 paginiBuoyant FoundationKaushal MoreÎncă nu există evaluări

- 5 Catalogue Winway DBB Valve-MinDocument19 pagini5 Catalogue Winway DBB Valve-MinAsyadullah Al-FatihÎncă nu există evaluări

- 18me710 - Industrial Engineering: Hybrid LayoutDocument21 pagini18me710 - Industrial Engineering: Hybrid LayoutHALO MC NOBLE ACTUALÎncă nu există evaluări

- How To Jailbreak PS3Document21 paginiHow To Jailbreak PS3sandystaysÎncă nu există evaluări

- Computer Training Institute: Project Report ofDocument9 paginiComputer Training Institute: Project Report ofEMMANUEL TV INDIAÎncă nu există evaluări

- S. Loganathan, P. Balaji-2020Document8 paginiS. Loganathan, P. Balaji-2020Mayang SandyÎncă nu există evaluări

- Electrical Equipment in Hazardous Areas (Eeha) Inspection StandardDocument10 paginiElectrical Equipment in Hazardous Areas (Eeha) Inspection StandardSaber AbdelaalÎncă nu există evaluări

- G.12 Mon ExamDocument8 paginiG.12 Mon ExamEmmanuel Maria0% (1)

- Directional (Yaw) Stability AnalysisDocument7 paginiDirectional (Yaw) Stability AnalysisHarshini AichÎncă nu există evaluări

- BOQ 5400MT - Measurement.Document6 paginiBOQ 5400MT - Measurement.Danish JavedÎncă nu există evaluări

- MIT6 004s09 Tutor04 SolDocument14 paginiMIT6 004s09 Tutor04 SolAbhijith RanguduÎncă nu există evaluări

- Hackster News - Hackster - IoDocument4 paginiHackster News - Hackster - IomrjunoonÎncă nu există evaluări

- Basic ElectronicsDocument1 paginăBasic ElectronicsBibin K VijayanÎncă nu există evaluări

- Honda PCX Manual EN PDFDocument142 paginiHonda PCX Manual EN PDFbutata02Încă nu există evaluări

- Formal and Informal Expressions: English by Jaideep SirDocument6 paginiFormal and Informal Expressions: English by Jaideep SirAdesh RajÎncă nu există evaluări

- Boss GT 1000 Sound List en 70163Document10 paginiBoss GT 1000 Sound List en 70163muhibudiafrizaalÎncă nu există evaluări