Documente Academic

Documente Profesional

Documente Cultură

Naivebayes Tute

Încărcat de

Amit ChandakDescriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Naivebayes Tute

Încărcat de

Amit ChandakDrepturi de autor:

Formate disponibile

CSE5230 Tutorial: The Naı̈ve Bayes Classifier 1

CSE5230 Tutorial: The Naı̈ve Bayes Classifier

M ONASH U NIVERSITY

Faculty of Information Technology

CSE5230 Data Mining

Semester 2, 2004

The aim of this exercise is to learn how to construct and use a Naı̈ve Bayes Classifier for data with

categorical attributes.

1 The weather data

In this tutorial, we will use the same weather dataset that was used in the handouts on the ID3 algorithm

and the Naı̈ve Bayes Classifier. The data is shown in Table 1.

outlook temperature humidity windy play

sunny hot high false no

sunny hot high true no

overcast hot high false yes

rainy mild high false yes

rainy cool normal false yes

rainy cool normal true no

overcast cool normal true yes

sunny mild high false no

sunny cool normal false yes

rainy mild normal false yes

sunny mild normal true yes

overcast mild high true yes

overcast hot normal false yes

rainy mild high true no

Table 1: The weather data (Witten and Frank; 1999, p. 9).

In this dataset, there are five categorical attributes outlook, temperature, humidity, windy, and play. We

are interested in building a system which will enable us to decide whether or not to play the game on the

basis of the weather conditions, i.e. we wish to classify the data into two classes, one where the attribute

play has the value “yes”, and the other where it has the value “no”. This classification will be based on

the values of the attributes outlook, temperature, humidity, and windy.

David McG. Squire, August 19, 2004

CSE5230 Tutorial: The Naı̈ve Bayes Classifier 2

2 The Naı̈ve Bayes classifier

Recall the explanation of the Naı̈ve Bayes classifier given in lecture 5. We consider each data instance

to be an n-dimensional vector of attribute values:

X = (x1 , x2 , x3 , . . . , xn ). (1)

For the weather data, n = 4. The first data instance in Table 1 would be written X = (sunny, hot, high, false).

In a Bayesian classifier which assigns each data instance to one of m classes C1 , C2 , . . . , Cm , a data

instance X is assigned to the class for which it has the highest posterior probability conditioned on X,

i.e. the class which is most probable given the prior probabilities of the classes and the data X (Duda

et al.; 2000). That is to say, X is assigned to class Ci if and only if

P (Ci |X) > P (Cj |X) for all j such that 1 ≤ j ≤ m, j 6= i. (2)

For the weather data, m = 2, since there are two classes.

According to Bayes Theorem

P (X|Ci )P (Ci )

P (Ci |X) = . (3)

P (X)

Since P (X) is a normalising factor which is equal for all classes, we need only maximise the numerator

P (X|Ci )P (Ci ) in order to do the classification.

We can estimate both the values we need, P (X|Ci ) and P (Ci ), from the data used to build the classifier.

2.1 Estimating the class prior probabilities

We can estimate the prior probabilities of the classes from their frequencies in the training data. Consider

the weather data in Table 1. Let us label the class where play has the value “yes” as C1 , and the class

where it has the value “no” as C2 . From the frequencies in the data, we estimate:

9

P (yes) = P (C1 ) =

14

5

P (no) = P (C2 ) =

14

These are the prior probabilities of the classes, i.e. the probabilities before we know anything about a

given data instance.

2.2 Estimating the probability of the data given the class

In general, it can be very computationally expensive to compute the P (X|Ci ). If each component xk of

X can have one of r values, there are rn combinations to consider for each of the m classes.

In order to simplify the calculation, the assumption of class conditional independence is made, i.e. that

for each class, the attributes are assumed to be independent. The classifier resulting from this assumption

is known as the Naı̈ve Bayes classifier. The assumption allows us to write

n

Y

P (X|Ci ) = P (xk |Ci ), (4)

k=1

David McG. Squire, August 19, 2004

CSE5230 Tutorial: The Naı̈ve Bayes Classifier 3

i.e. the product of the probabilities of each of the values of the attributes of X for the given class Ci .

To see how this works, let us consider an example. What is the probability of outlook = sunny given

that play = no? Of the five cases where play = no, there are three where outlook = sunny, thus

P (outlook = sunny|play = no) = 3/5. In the notation of Equation 4, we may write

3

P (x1 = sunny|C2 ) = .

5

Now we will consider how to put these attribute value probabilities together to calculate a P (X|Ci )

according to Equation 4. Let us consider the probability of the first data instance in Table 1, given the

class C2 (i.e. given that play = no). We have

P (X = (sunny, hot, high, false)|C2 ) = P (x1 = sunny|C2 ) × P (x2 = hot|C2 ) ×

P (x3 = high|C2 ) × P (x4 = false|C2 )

3 2 4 2

= × × ×

5 5 5 5

48

=

625

We can put this together with our known prior probability for class C2 to obtain, using Equation 3,

P (X = (sunny, hot, high, false)|C2 )P (C2 )

P (C2 |X = (sunny, hot, high, false)) =

P (X)

48 5

625 × 14

=

P (X)

240

= ÷ P (X).

8750

Remember that we don’t need to calculate P (X) since it is constant for all classes.

3 Questions

You may answer the following questions using calculations done by hand, as above. If you wish, you

may set up an Excel spreadsheet to help, or even write a small program in the language of your choice.

Question 1 Calculate P (C1 |X = (sunny, hot, high, false)). How would the Naı̈ve Bayes

classifier classify the data instance X = (sunny, hot, high, false)?

Question 2 Does this agree with the classification given in Table 1 for the data instance

X = (sunny, hot, high, false)?

Question 3 Consider a new data instance X 0 = (overcast, cool, high, true). How would

the Naı̈ve Bayes classifier classify X 0 ?

Question 4 Some algorithms (e.g. ID3) are able to produce a classifier that classifies the

data in Table 1 without errors. Does the Naı̈ve Bayes classifier achieve the

same performance? (n.b. This will take some time to compute by hand.)

David McG. Squire, August 19, 2004

CSE5230 Tutorial: The Naı̈ve Bayes Classifier 4

References

Duda, R. O., Hart, P. E. and Stork, D. G. (2000). Pattern Classification, 2nd edn, Wiley, New York, NY,

USA.

Witten, I. H. and Frank, E. (1999). Data Mining: Practical Machine Learning Tools and Techniques

with Java Implementations, Morgan Kaufmann, San Francisco, CA, USA.

URL: http://www.cs.waikato.ac.nz/˜ml/weka/book.html

David McG. Squire, August 19, 2004

S-ar putea să vă placă și

- A-level Maths Revision: Cheeky Revision ShortcutsDe la EverandA-level Maths Revision: Cheeky Revision ShortcutsEvaluare: 3.5 din 5 stele3.5/5 (8)

- Module 1 First WeekDocument5 paginiModule 1 First WeekJellamae Arreza100% (1)

- Psychological Statistics SyllabusDocument4 paginiPsychological Statistics SyllabusGrace Cadag100% (1)

- Stanford University CS 229, Autumn 2014 Midterm ExaminationDocument23 paginiStanford University CS 229, Autumn 2014 Midterm ExaminationErico ArchetiÎncă nu există evaluări

- Practice Midterm 2010Document4 paginiPractice Midterm 2010Erico ArchetiÎncă nu există evaluări

- Unit 3-Anova - Ancova Manova-MancovaDocument11 paginiUnit 3-Anova - Ancova Manova-MancovaRuchi SharmaÎncă nu există evaluări

- Statistical Learning MethodsDocument28 paginiStatistical Learning MethodsGomathi ShanmugamÎncă nu există evaluări

- Midterm 2014Document23 paginiMidterm 2014Zeeshan Ali SayyedÎncă nu există evaluări

- Binomial Theorem NEW BOOKDocument79 paginiBinomial Theorem NEW BOOKPJSIR42Încă nu există evaluări

- Cluster Analysis For Researcher - Charles Romesburg PDFDocument244 paginiCluster Analysis For Researcher - Charles Romesburg PDFanonkumarÎncă nu există evaluări

- Classification and RegressionDocument15 paginiClassification and RegressionBhagirath PrajapatiÎncă nu există evaluări

- 01 Naiv BayesDocument25 pagini01 Naiv BayesZakiatun NufusÎncă nu există evaluări

- INF264 - Exercise 2: 1 InstructionsDocument4 paginiINF264 - Exercise 2: 1 InstructionsSuresh Kumar MukhiyaÎncă nu există evaluări

- Decision Tree MethodsDocument34 paginiDecision Tree Methodsjemal yahyaaÎncă nu există evaluări

- CS 188 Introduction To Artificial Intelligence Spring 2019 Note 6Document14 paginiCS 188 Introduction To Artificial Intelligence Spring 2019 Note 6hellosunshine95Încă nu există evaluări

- Multivariate Probability: 1 Discrete Joint DistributionsDocument10 paginiMultivariate Probability: 1 Discrete Joint DistributionshamkarimÎncă nu există evaluări

- RD05 StatisticsDocument7 paginiRD05 StatisticsأحمدآلزهوÎncă nu există evaluări

- Lectures On Group TheoryDocument58 paginiLectures On Group TheoryUdit VarmaÎncă nu există evaluări

- PSet 8Document2 paginiPSet 8Alexander QuÎncă nu există evaluări

- Lecture 5 Bayesian ClassificationDocument16 paginiLecture 5 Bayesian ClassificationAhsan AsimÎncă nu există evaluări

- 20210913115710D3708 - Session 09-12 Bayes ClassifierDocument30 pagini20210913115710D3708 - Session 09-12 Bayes ClassifierAnthony HarjantoÎncă nu există evaluări

- PMO National Written PDFDocument6 paginiPMO National Written PDFCedrixe MadridÎncă nu există evaluări

- W12SGFEDocument3 paginiW12SGFEhanglusuÎncă nu există evaluări

- Machine Learning and Pattern Recognition Week 3 Intro - ClassificationDocument5 paginiMachine Learning and Pattern Recognition Week 3 Intro - ClassificationzeliawillscumbergÎncă nu există evaluări

- Naive Bayes & Introduction To GaussiansDocument10 paginiNaive Bayes & Introduction To GaussiansMafer ReveloÎncă nu există evaluări

- Unit 6Document126 paginiUnit 6skraoÎncă nu există evaluări

- NotesDocument97 paginiNotesaparna1407Încă nu există evaluări

- ML BayesionBeliefNetwork Lect12 14Document99 paginiML BayesionBeliefNetwork Lect12 14adminÎncă nu există evaluări

- CERN Academic Training Lectures - Practical Statistics For LHC Physicists by Prosper PDFDocument283 paginiCERN Academic Training Lectures - Practical Statistics For LHC Physicists by Prosper PDFkevinchu021195Încă nu există evaluări

- Tutorial3 Q&A 2023-09-08 04 - 44 - 25Document6 paginiTutorial3 Q&A 2023-09-08 04 - 44 - 25darrenseah5530Încă nu există evaluări

- Assign 1Document5 paginiAssign 1darkmanhiÎncă nu există evaluări

- Data Mining and Machine Learning: Fundamental Concepts and AlgorithmsDocument29 paginiData Mining and Machine Learning: Fundamental Concepts and Algorithmss8nd11d UNIÎncă nu există evaluări

- Finite Probability Spaces Lecture NotesDocument13 paginiFinite Probability Spaces Lecture NotesMadhu ShankarÎncă nu există evaluări

- Class Group and Class Number: ! W W L Chen, 1984, 2013Document5 paginiClass Group and Class Number: ! W W L Chen, 1984, 2013Ehab AhmedÎncă nu există evaluări

- Naive BayesDocument25 paginiNaive BayesWina FadhilahÎncă nu există evaluări

- Stats, Mle, and Other Stuff: 1 SevssdDocument10 paginiStats, Mle, and Other Stuff: 1 SevssdJONATHAN LINÎncă nu există evaluări

- Homework 1: Background Test: Due 12 A.M. Tuesday, September 06, 2020Document4 paginiHomework 1: Background Test: Due 12 A.M. Tuesday, September 06, 2020anwer fadelÎncă nu există evaluări

- Ash - Algebraic Number Theory.2003.draftDocument95 paginiAsh - Algebraic Number Theory.2003.draftBobÎncă nu există evaluări

- Ejercicio RaicesDocument9 paginiEjercicio RaicesIVANÎncă nu există evaluări

- Mathematical Tripos: Complete Answers Are Preferred To FragmentsDocument21 paginiMathematical Tripos: Complete Answers Are Preferred To FragmentsKrishna OochitÎncă nu există evaluări

- Notes01 PDFDocument9 paginiNotes01 PDFhabtemariam mollaÎncă nu există evaluări

- Learning From Data: Jörg SchäferDocument33 paginiLearning From Data: Jörg SchäferHamed AryanfarÎncă nu există evaluări

- Classification - Naive BayesDocument17 paginiClassification - Naive BayesDiễm Quỳnh TrầnÎncă nu există evaluări

- HW 1Document7 paginiHW 1Lakhdari AbdelhalimÎncă nu există evaluări

- CS 70 Discrete Mathematics and Probability Theory Spring 2016 Rao and Walrand Note 2 1 ProofsDocument8 paginiCS 70 Discrete Mathematics and Probability Theory Spring 2016 Rao and Walrand Note 2 1 ProofsAyesha NayyerÎncă nu există evaluări

- Sunny Warm Warm Y Rain Cool Warm N Sunny Warm Warm Y Sunny Cool Warm NDocument3 paginiSunny Warm Warm Y Rain Cool Warm N Sunny Warm Warm Y Sunny Cool Warm NCNTT-1C-17 Vương Thị Diệu LinhÎncă nu există evaluări

- PaperII 3Document23 paginiPaperII 3eReader.LeaderÎncă nu există evaluări

- AYESIAN DECISION THEORY ProblemsDocument15 paginiAYESIAN DECISION THEORY ProblemsmohamadÎncă nu există evaluări

- Mathematical Techniques: Revision Notes: DR A. J. BevanDocument5 paginiMathematical Techniques: Revision Notes: DR A. J. BevanRoy VeseyÎncă nu există evaluări

- DM Unit II CH 1finalDocument91 paginiDM Unit II CH 1finalKhaja AhmedÎncă nu există evaluări

- hw1 PDFDocument3 paginihw1 PDF何明涛Încă nu există evaluări

- Homework 1Document7 paginiHomework 1Muhammad MurtazaÎncă nu există evaluări

- Counterproof of The Daddy ConjectureDocument8 paginiCounterproof of The Daddy ConjectureSergio RicossaÎncă nu există evaluări

- Machine Learning and Pattern Recognition Background Selftest AnswersDocument5 paginiMachine Learning and Pattern Recognition Background Selftest AnswerszeliawillscumbergÎncă nu există evaluări

- Class11 PrepDocument7 paginiClass11 Prepverybad19Încă nu există evaluări

- Statistical Data Analysis: PH4515: 1 Course StructureDocument5 paginiStatistical Data Analysis: PH4515: 1 Course StructurePhD LIVEÎncă nu există evaluări

- Machine Learning Lecture 4Document4 paginiMachine Learning Lecture 4chelseaÎncă nu există evaluări

- Unit 5 Problem SetDocument2 paginiUnit 5 Problem SetKaren LuÎncă nu există evaluări

- Maxwell's Distribution For Physics Olympiads: Murad BashirovDocument15 paginiMaxwell's Distribution For Physics Olympiads: Murad BashirovLolTheBobÎncă nu există evaluări

- Naive BayesDocument7 paginiNaive BayesdebanwitaÎncă nu există evaluări

- 05 Nearest Neighbor and Bayesian ClassifiersDocument33 pagini05 Nearest Neighbor and Bayesian ClassifiersOscar WongÎncă nu există evaluări

- Part Two 4 Bayesian StatisticsDocument10 paginiPart Two 4 Bayesian Statisticslolopopo28Încă nu există evaluări

- Tutorial 5 and 6Document5 paginiTutorial 5 and 6Ahmad HaikalÎncă nu există evaluări

- Korchia Calcul RhoDocument12 paginiKorchia Calcul RhosamichaouachiÎncă nu există evaluări

- 02 Time-Based Track Quality IndexDocument16 pagini02 Time-Based Track Quality IndexmuthurajanÎncă nu există evaluări

- Chapter1 5 FVDocument33 paginiChapter1 5 FVDanica Dela CruzÎncă nu există evaluări

- Session 1-Discriminant Analysis-1Document52 paginiSession 1-Discriminant Analysis-1devaki reddyÎncă nu există evaluări

- Resignation Letter of Former Chief Statistician Wayne R. SmithDocument1 paginăResignation Letter of Former Chief Statistician Wayne R. SmithTyler McLeanÎncă nu există evaluări

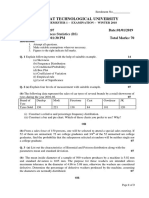

- Gujarat Technological University: Subject Code: Date:01/01/2019 Subject Name: Time: 10:30 AM To 01:30 PM Total Marks: 70Document3 paginiGujarat Technological University: Subject Code: Date:01/01/2019 Subject Name: Time: 10:30 AM To 01:30 PM Total Marks: 70harold_gravity9885Încă nu există evaluări

- Two Cultures of Ecology - HollingDocument4 paginiTwo Cultures of Ecology - HollingEdwinSandovalLeonÎncă nu există evaluări

- Chapter 01 PowerPointDocument18 paginiChapter 01 PowerPointsyafrijalÎncă nu există evaluări

- Research Paper For McaDocument8 paginiResearch Paper For Mcagw1qjeww100% (1)

- Inferential StatisticsDocument29 paginiInferential StatisticsJaico DictaanÎncă nu există evaluări

- Modeling The Frequency and Severity of Auto InsuraDocument24 paginiModeling The Frequency and Severity of Auto InsuraDarwin DcÎncă nu există evaluări

- In Research WritingDocument186 paginiIn Research WritingNichole AlbaracinÎncă nu există evaluări

- Advanced Deep Learning and Transformers - CirrincioneDocument3 paginiAdvanced Deep Learning and Transformers - CirrincioneMohamed Chiheb Ben chaâbaneÎncă nu există evaluări

- Network Traf Fic Classification Using Multiclass Classi FierDocument10 paginiNetwork Traf Fic Classification Using Multiclass Classi FierPrabh KÎncă nu există evaluări

- Latihan Analisis VariansDocument4 paginiLatihan Analisis VariansJessica Loong100% (1)

- Passivity and MotivationDocument10 paginiPassivity and MotivationemysamehÎncă nu există evaluări

- 03 Statistical Inference v0 2 05062022 050648pmDocument18 pagini03 Statistical Inference v0 2 05062022 050648pmSaif ali KhanÎncă nu există evaluări

- F Stat and One Way ANOVADocument22 paginiF Stat and One Way ANOVAana mejicoÎncă nu există evaluări

- R Cheat SheetDocument6 paginiR Cheat SheetReneeXuÎncă nu există evaluări

- Classification ProblemsDocument25 paginiClassification Problemssushanth100% (1)

- LP Chapter 4 Lesson 3 Confidence Interval Estimate of The Population MeanDocument2 paginiLP Chapter 4 Lesson 3 Confidence Interval Estimate of The Population MeanAldrin Dela CruzÎncă nu există evaluări

- Reflection Paper On Frequency DistributionDocument2 paginiReflection Paper On Frequency DistributionRodel EstebanÎncă nu există evaluări

- Assignments of TAD NewDocument11 paginiAssignments of TAD NewNguyễn Hùng CườngÎncă nu există evaluări

- Comp Scaling ExamDocument4 paginiComp Scaling ExamAlexandre GumÎncă nu există evaluări

- Cox Proportional Hazard ModelDocument34 paginiCox Proportional Hazard ModelRIZKA FIDYA PERMATASARI 06211940005004Încă nu există evaluări

- Corporate Governance and Environmental Management: A Study of Quoted Industrial Goods Companies in NigeriaDocument9 paginiCorporate Governance and Environmental Management: A Study of Quoted Industrial Goods Companies in NigeriaIJAR JOURNALÎncă nu există evaluări