Documente Academic

Documente Profesional

Documente Cultură

Correlation in Random Variables

Încărcat de

Madhu Babu SikhaDescriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Correlation in Random Variables

Încărcat de

Madhu Babu SikhaDrepturi de autor:

Formate disponibile

Correlation in Random Variables

Lecture 11

Spring 2002

Correlation in Random Variables

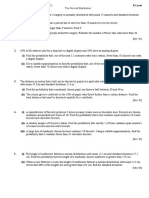

Suppose that an experiment

produces two random vari-

ables, X and Y . What can we

say about the relationship be-

tween them?

One of the best ways to visu-

alize the possible relationship

is to plot the (X, Y ) pair that

is produced by several trials of

the experiment. An example

of correlated samples is shown

at the right

Lecture 11 1

Joint Density Function

The joint behavior of X and Y is fully captured in the joint probability

distribution. For a continuous distribution

E[X

m

Y

n

] =

__

x

m

y

n

f

XY

(x, y)dxdy

For discrete distributions

E[X

m

Y

n

] =

xSx

ySy

x

m

y

n

P(x, y)

Lecture 11 2

Covariance Function

The covariance function is a number that measures the common

variation of X and Y. It is dened as

cov(X, Y ) = E[(X E[X])(Y E[Y ])]

= E[XY ] E[X]E[Y ]

The covariance is determined by the dierence in E[XY ] and E[X]E[Y ].

If X and Y were statistically independent then E[XY ] would equal

E[X]E[Y ] and the covariance would be zero.

The covariance of a random variable with itself is equal to its vari-

ance. cov[X, X] = E[(X E[X])

2

] = var[X]

Lecture 11 3

Correlation Coecient

The covariance can be normalized to produce what is known as the

correlation coecient, .

=

cov(X, Y)

_

var(X)var(Y)

The correlation coecient is bounded by 1 1. It will have

value = 0 when the covariance is zero and value = 1 when X

and Y are perfectly correlated or anti-correlated.

Lecture 11 4

Autocorrelation Function

The autocorrelation function is very similar to the covariance func-

tion. It is dened as

R(X, Y ) = E[XY ] = cov(X, Y ) +E[X]E[Y ]

It retains the mean values in the calculation of the value. The

random variables are orthogonal if R(X, Y ) = 0.

Lecture 11 5

Normal Distribution

f

XY

(x, y) =

1

2

x

y

_

1

2

exp

_

_

_

_

_

_

xx

x

_

2

2

_

xx

x

_

_

yy

y

_

+

_

yy

y

_

2

2(1

2

)

_

_

_

_

_

Lecture 11 6

Normal Distribution

The orientation of the elliptical contours is along the line y = x if

> 0 and along the line y = x if < 0. The contours are a circle,

and the variables are uncorrelated, if = 0. The center of the ellipse

is (

x

,

y) .

Lecture 11 7

Linear Estimation

The task is to construct a rule for the prediction

Y of Y based on

an observation of X.

If the random variables are correlated then this should yield a better

result, on the average, than just guessing. We are encouraged to

select a linear rule when we note that the sample points tend to fall

about a sloping line.

Y = aX +b

where a and b are parameters to be chosen to provide the best

results. We would expect a to correspond to the slope and b to the

intercept.

Lecture 11 8

Minimize Prediction Error

To nd a means of calculating the coecients from a set of sample

points, construct the predictor error

= E[(Y

Y )

2

]

We want to choose a and b to minimize . Therefore, compute the

appropriate derivatives and set them to zero.

a

= 2E[(Y

Y )

Y

a

] = 0

b

= 2E[(Y

Y )

Y

b

] = 0

These can be solved for a and b in terms of the expected values.

The expected values can be themselves estimated from the sample

set.

Lecture 11 9

Prediction Error Equations

The above conditions on a and b are equivalent to

E[(Y

Y )X] = 0

E[Y

Y ] = 0

The prediction error Y

Y must be orthogonal to X and the expected

prediction error must be zero.

Substituting

Y = aX + b leads to a pair of equations to be solved

for a and b.

E[(Y aX b)X] = E[XY ] aE[X

2

] bE[X] = 0

E[Y aX b] = E[Y ] aE[X] b = 0

_

E[X

2

] E[X]

E[X] 1

_ _

a

b

_

=

_

E[XY ]

E[Y ]

_

Lecture 11 10

Prediction Error

a =

cov(X, Y)

var(X)

b = E[Y ]

cov(X, Y)

var(X)

E[X]

The prediction error with these parameter values is

= (1

2

)var(Y)

When the correlation coecient = 1 the error is zero, meaning

that perfect prediction can be made.

When = 0 the variance in the prediction is as large as the variation

in Y, and the predictor is of no help at all.

For intermediate values of , whether positive or negative, the pre-

dictor reduces the error.

Lecture 11 11

Linear Predictor Program

The program lp.pro computes the coecients [a, b] as well as the

covariance matrix C and the correlation coecient, . The covari-

ance matrix is

C =

_

var[X] cov[X, Y ]

cov[X, Y ] var[Y ]

_

Usage example:

N=100

X=Randomn(seed,N)

Z=Randomn(seed,N)

Y=2*X-1+0.2*Z

p=lp(X,Y,c,rho)

print,Predictor Coefficients=,p

print,Covariance matrix

print,c

print,Correlation Coefficient=,rho

Lecture 11 12

Program lp

function lp,X,Y,c,rho,mux,muy

;Compute the linear predictor coefficients such that

;Yhat=aX+b is the minimum mse estimate of Y based on X.

;Shorten the X and Y vectors to the length of the shorter.

n=n_elements(X) < n_elements(Y)

X=(X[0:n-1])[*]

Y=(Y[0:n-1])[*]

;Compute the mean value of each.

mux=total(X)/n

muy=total(Y)/n

Continued on next page

Lecture 11 13

Program lp (continued)

;Compute the covariance matrix.

V=[[X-mux],[Y-muy]]

C=V##transpose(V)/(n-1)

;Compute the predictor coefficient and constant.

a=c[0,1]/c[0,0]

b=muy-a*mux

;Compute the correlation coefficient

rho=c[0,1]/sqrt(c[0,0]*c[1,1])

Return,[a,b]

END

Lecture 11 14

Example

Predictor Coefficients= 1.99598 -1.09500

Correlation Coefficient=0.812836

Covariance Matrix

0.950762 1.89770

1.89770 5.73295

Lecture 11 15

IDL Regress Function

IDL provides a number of routines for the analysis of data. The

function REGRESS does multiple linear regression.

Compute the predictor coecient a and constant b by

a=regress(X,Y,Const=b)

print,[a,b]

1.99598 -1.09500

Lecture 11 16

New Concept

Introduction to Random Processes

Today we will just introduce the basic ideas.

Lecture 11 17

Random Processes

Lecture 11 18

Random Process

A random variable is a function X(e) that maps the set of ex-

periment outcomes to the set of numbers.

A random process is a rule that maps every outcome e of an

experiment to a function X(t, e).

A random process is usually conceived of as a function of time,

but there is no reason to not consider random processes that are

functions of other independent variables, such as spatial coordi-

nates.

The function X(u, v, e) would be a function whose value de-

pended on the location (u, v) and the outcome e, and could be

used in representing random variations in an image.

Lecture 11 19

Random Process

The domain of e is the set of outcomes of the experiment. We

assume that a probability distribution is known for this set.

The domain of t is a set, T , of real numbers.

If T is the real axis then X(t, e) is a continuous-time random

process

If T is the set of integers then X(t, e) is a discrete-time random

process

We will often suppress the display of the variable e and write X(t)

for a continuous-time RP and X[n] or X

n

for a discrete-time RP.

Lecture 11 20

Random Process

A RP is a family of functions, X(t, e). Imagine a giant strip chart

recording in which each pen is identied with a dierent e. This

family of functions is traditionally called an ensemble.

A single function X(t, e

k

) is selected by the outcome e

k

. This is

just a time function that we could call X

k

(t). Dierent outcomes

give us dierent time functions.

If t is xed, say t = t

1,

then X(t

1

, e) is a random variable. Its

value depends on the outcome e.

If both t and e are given then X(t, e) is just a number.

Lecture 11 21

Moments and Averages

X(t

1

, e) is a random variable that represents the set of samples across

the ensemble at time t

1

If it has a probability density function f

X

(x; t

1

) then the moments

are

m

n

(t

1

) = E[X

n

(t

1

)] =

_

x

n

f

X

(x; t

1

) dx

The notation f

X

(x; t

1

) may be necessary because the probability

density may depend upon the time the samples are taken.

The mean value is

X

= m

1

, which may be a function of time.

The central moments are

E[(X(t

1

)

X

(t

1

))

n

] =

_

(x

X

(t

1

))

n

f

X

(x; t

1

) dx

Lecture 11 22

Pairs of Samples

The numbers X(t

1

, e) and X(t

2

, e) are samples from the same time

function at dierent times.

They are a pair of random variables (X

1

, X

2

).

They have a joint probability density function f(x

1

, x

2

; t

1

, t

2

).

From the joint density function one can compute the marginal den-

sities, conditional probabilities and other quantities that may be of

interest.

Lecture 11 23

S-ar putea să vă placă și

- Difference Equations in Normed Spaces: Stability and OscillationsDe la EverandDifference Equations in Normed Spaces: Stability and OscillationsÎncă nu există evaluări

- Chap7 Random ProcessDocument21 paginiChap7 Random ProcessSeham RaheelÎncă nu există evaluări

- Random ProcessDocument21 paginiRandom ProcessgkmkkÎncă nu există evaluări

- Stochastic Processes Lecture on Random Variables and SLLNDocument8 paginiStochastic Processes Lecture on Random Variables and SLLNomidbundyÎncă nu există evaluări

- GMM Estimation PDFDocument35 paginiGMM Estimation PDFdamian camargoÎncă nu există evaluări

- CH 00Document4 paginiCH 00Wai Kit LeongÎncă nu există evaluări

- MIT LECTURE ON DISCRETE RANDOM VARIABLESDocument18 paginiMIT LECTURE ON DISCRETE RANDOM VARIABLESSarvraj Singh RtÎncă nu există evaluări

- Instructor: DR - Saleem AL Ashhab Al Ba'At University Mathmatical Class Second Year Master DgreeDocument13 paginiInstructor: DR - Saleem AL Ashhab Al Ba'At University Mathmatical Class Second Year Master DgreeNazmi O. Abu JoudahÎncă nu există evaluări

- Lab PDFDocument11 paginiLab PDFaliÎncă nu există evaluări

- Mathematical Economics: 1 What To StudyDocument23 paginiMathematical Economics: 1 What To Studyjrvv2013gmailÎncă nu există evaluări

- Statistics Boot Camp: X F X X E DX X XF X E Important Properties of The Expectations OperatorDocument3 paginiStatistics Boot Camp: X F X X E DX X XF X E Important Properties of The Expectations OperatorHerard NapuecasÎncă nu există evaluări

- 3.0 ErrorVar and OLSvar-1Document42 pagini3.0 ErrorVar and OLSvar-1Malik MahadÎncă nu există evaluări

- Lab 7 BDocument7 paginiLab 7 Bapi-3826899Încă nu există evaluări

- How Are Rao-Blackwell Estimators "Better"?Document2 paginiHow Are Rao-Blackwell Estimators "Better"?Selva KumarÎncă nu există evaluări

- X (X X - . - . - . - . - X) : Neuro-Fuzzy Comp. - Ch. 3 May 24, 2005Document20 paginiX (X X - . - . - . - . - X) : Neuro-Fuzzy Comp. - Ch. 3 May 24, 2005Oluwafemi DagunduroÎncă nu există evaluări

- Class6 Prep ADocument7 paginiClass6 Prep AMariaTintashÎncă nu există evaluări

- Generating Random VariablesDocument7 paginiGenerating Random VariablesTsvetanÎncă nu există evaluări

- MIT6 436JF18 Lec06Document18 paginiMIT6 436JF18 Lec06DevendraReddyPoreddyÎncă nu există evaluări

- Week 2 NotesDocument23 paginiWeek 2 NotesHind OuazzaniÎncă nu există evaluări

- HMWK 4Document5 paginiHMWK 4Jasmine NguyenÎncă nu există evaluări

- Gary Chamberlain Econometric SDocument152 paginiGary Chamberlain Econometric SGabriel RoblesÎncă nu există evaluări

- Exercises For TFFY54Document25 paginiExercises For TFFY54sattar28Încă nu există evaluări

- Order Statistics PDFDocument3 paginiOrder Statistics PDFDwi LestarinsihÎncă nu există evaluări

- Midterm 1 ReviewDocument4 paginiMidterm 1 ReviewJovan WhiteÎncă nu există evaluări

- Tutorial On Principal Component Analysis: Javier R. MovellanDocument9 paginiTutorial On Principal Component Analysis: Javier R. Movellansitaram_1Încă nu există evaluări

- Chap2 PDFDocument20 paginiChap2 PDFJacobÎncă nu există evaluări

- Conditional Expectations And Renormalization: d dt φ (t) = R (φ (t) ) , φ (0) = x,, R, x, i = 1, - . -, n, and t isDocument14 paginiConditional Expectations And Renormalization: d dt φ (t) = R (φ (t) ) , φ (0) = x,, R, x, i = 1, - . -, n, and t isLouis LingÎncă nu există evaluări

- Estimation Theory PresentationDocument66 paginiEstimation Theory PresentationBengi Mutlu Dülek100% (1)

- ECONOMETRICS BASICSDocument61 paginiECONOMETRICS BASICSmaxÎncă nu există evaluări

- Linear Algebra and Applications: Benjamin RechtDocument42 paginiLinear Algebra and Applications: Benjamin RechtKarzan Kaka SwrÎncă nu există evaluări

- MultivariableRegression 6Document44 paginiMultivariableRegression 6Alada manaÎncă nu există evaluări

- Solution to exercises on MVN distributionsDocument3 paginiSolution to exercises on MVN distributionsAhmed DihanÎncă nu există evaluări

- Probability Formula SheetDocument11 paginiProbability Formula SheetJake RoosenbloomÎncă nu există evaluări

- Stat331-Multiple Linear RegressionDocument13 paginiStat331-Multiple Linear RegressionSamantha YuÎncă nu există evaluări

- Ee132b Hw1 SolDocument4 paginiEe132b Hw1 SolAhmed HassanÎncă nu există evaluări

- ExercisesDocument27 paginiExercisesPatryk MartyniakÎncă nu există evaluări

- Classical Multiple RegressionDocument5 paginiClassical Multiple Regression13sandipÎncă nu există evaluări

- Appendix Robust RegressionDocument17 paginiAppendix Robust RegressionPRASHANTH BHASKARANÎncă nu există evaluări

- Review Math FoundationDocument8 paginiReview Math FoundationPrince Arc MiguelÎncă nu există evaluări

- Lecture 2 ProbabilityDocument28 paginiLecture 2 ProbabilityJ ChoyÎncă nu există evaluări

- Regression and CorrelationDocument4 paginiRegression and CorrelationClark ConstantinoÎncă nu există evaluări

- Definition 4.1.1.: R Then We Could Define ADocument42 paginiDefinition 4.1.1.: R Then We Could Define AAkshayÎncă nu există evaluări

- Lecture 14: Principal Component Analysis: Computing The Principal ComponentsDocument6 paginiLecture 14: Principal Component Analysis: Computing The Principal ComponentsShebinÎncă nu există evaluări

- Exponential function properties and differential equationsDocument5 paginiExponential function properties and differential equationsAlonso Pablo Olivares LagosÎncă nu există evaluări

- Regression Model AssumptionsDocument50 paginiRegression Model AssumptionsEthiop LyricsÎncă nu există evaluări

- NLAFull Notes 22Document59 paginiNLAFull Notes 22forspamreceivalÎncă nu există evaluări

- Applied Econometrics RegressionDocument38 paginiApplied Econometrics RegressionchatfieldlohrÎncă nu există evaluări

- Functional Equations For Fractal Interpolants Lj. M. Koci C and A. C. SimoncelliDocument12 paginiFunctional Equations For Fractal Interpolants Lj. M. Koci C and A. C. SimoncelliSilvia PalascaÎncă nu există evaluări

- Ebook Course in Probability 1St Edition Weiss Solutions Manual Full Chapter PDFDocument63 paginiEbook Course in Probability 1St Edition Weiss Solutions Manual Full Chapter PDFAnthonyWilsonecna100% (5)

- Lecture 09Document15 paginiLecture 09nandish mehtaÎncă nu există evaluări

- Chap 5 PMEDocument48 paginiChap 5 PMEFarhan Khan NiaZiÎncă nu există evaluări

- More Discrete R.VDocument40 paginiMore Discrete R.VjiddagerÎncă nu există evaluări

- ProblemsDocument62 paginiProblemserad_5Încă nu există evaluări

- Lecture 7: Regression: 1.1 Linear ModelDocument20 paginiLecture 7: Regression: 1.1 Linear ModelKirandeep KaurÎncă nu există evaluări

- Assign20153 SolDocument47 paginiAssign20153 SolMarco Perez HernandezÎncă nu există evaluări

- Chapter3-Discrete DistributionDocument141 paginiChapter3-Discrete DistributionjayÎncă nu există evaluări

- Random Variable Modified PDFDocument19 paginiRandom Variable Modified PDFIMdad HaqueÎncă nu există evaluări

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)De la EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Încă nu există evaluări

- LOW-72 Johnson CounterDocument8 paginiLOW-72 Johnson Counterpsangwan8028848Încă nu există evaluări

- Telephone DirectoryDocument46 paginiTelephone DirectoryMadhu Babu SikhaÎncă nu există evaluări

- Queueing System FormulasDocument4 paginiQueueing System FormulasMadhu Babu SikhaÎncă nu există evaluări

- A List of JournalsDocument2 paginiA List of JournalsferitarzanÎncă nu există evaluări

- Basics of Research Paper Writing and PublishingDocument18 paginiBasics of Research Paper Writing and PublishingAli Hussain Kazim100% (1)

- A Single-Server QueueDocument30 paginiA Single-Server QueueMadhu Babu SikhaÎncă nu există evaluări

- Poisson Process GenerationDocument6 paginiPoisson Process GenerationMadhu Babu SikhaÎncă nu există evaluări

- Quiz 6Document3 paginiQuiz 6Shrey MangalÎncă nu există evaluări

- ProbDistributionsRandomVariablesDocument110 paginiProbDistributionsRandomVariablesjohnarulrajÎncă nu există evaluări

- DAT101x Lab - Exploring Data PDFDocument3 paginiDAT101x Lab - Exploring Data PDFFrançois AmaniÎncă nu există evaluări

- Quality Assurance and Quality Control in The Analytical Chemical LaboratoryDocument5 paginiQuality Assurance and Quality Control in The Analytical Chemical Laboratoryproyec_eÎncă nu există evaluări

- Machine Learning A Z Q ADocument52 paginiMachine Learning A Z Q ADina Garan100% (1)

- Harrison 2018Document32 paginiHarrison 2018Daniele SoardiÎncă nu există evaluări

- Route Choice Modelling in Urban Transportation NetDocument9 paginiRoute Choice Modelling in Urban Transportation NetSayan GhosalÎncă nu există evaluări

- Probability, Random Variables and Random Signals - 1 - MCQsDocument4 paginiProbability, Random Variables and Random Signals - 1 - MCQsPrajwal BirwadkarÎncă nu există evaluări

- MODULE 5 and 6 STATISTICS AND PROBABILITY - Docx (1) - PDFDocument9 paginiMODULE 5 and 6 STATISTICS AND PROBABILITY - Docx (1) - PDFGraecel Ramirez100% (2)

- Probability Distribution ChapterDocument11 paginiProbability Distribution ChapterShimelis Tesema100% (1)

- Psych Stat Problem and AnswersDocument3 paginiPsych Stat Problem and AnswersSummer LoveÎncă nu există evaluări

- Class Material - Multiple Linear RegressionDocument57 paginiClass Material - Multiple Linear RegressionVishvajit KumbharÎncă nu există evaluări

- Probility AssignmentDocument20 paginiProbility AssignmentMuhammad HananÎncă nu există evaluări

- Maximizing Returns and Minimizing Risk with Portfolio TheoryDocument47 paginiMaximizing Returns and Minimizing Risk with Portfolio TheoryShubham VarshneyÎncă nu există evaluări

- Chapter 2Document41 paginiChapter 2Basel TahaÎncă nu există evaluări

- One Dimensional Random Variables ExplainedDocument45 paginiOne Dimensional Random Variables ExplainedFitsumÎncă nu există evaluări

- OneWayANOVA LectureNotesDocument13 paginiOneWayANOVA LectureNotesTizon CoffinÎncă nu există evaluări

- Vs30 From Shallow ModelsDocument7 paginiVs30 From Shallow ModelsNarayan RoyÎncă nu există evaluări

- Exercise Prior MidtermDocument10 paginiExercise Prior MidtermNaila YumnaÎncă nu există evaluări

- University of The East: College of Engineering Computer Engineering Department CaloocanDocument10 paginiUniversity of The East: College of Engineering Computer Engineering Department CaloocanTawki BakiÎncă nu există evaluări

- Solomonoff's Induction Introduction and Some Philosophical UseDocument45 paginiSolomonoff's Induction Introduction and Some Philosophical UseAnimesh SinghÎncă nu există evaluări

- CIV2037F Additional QuestionsDocument3 paginiCIV2037F Additional QuestionsquikgoldÎncă nu există evaluări

- QP Qam06Document4 paginiQP Qam06Ravi NookalaÎncă nu există evaluări

- INLA RinlaDocument87 paginiINLA RinlaRachmawati RofahÎncă nu există evaluări

- MOMOENTDocument10 paginiMOMOENTkeithlain4123Încă nu există evaluări

- PSQT-QB-Unit 1Document3 paginiPSQT-QB-Unit 1fabiomughilanÎncă nu există evaluări

- MODULE in Stat Week 6Document10 paginiMODULE in Stat Week 6Joshua GonzalesÎncă nu există evaluări

- Stat 331 Tutorial SetDocument2 paginiStat 331 Tutorial SetMarcus KusiÎncă nu există evaluări

- EECE 5639 Computer Vision I: Filtering, Probability ReviewDocument54 paginiEECE 5639 Computer Vision I: Filtering, Probability ReviewSourabh Sisodia SrßÎncă nu există evaluări

- 5 The Normal DistributionDocument9 pagini5 The Normal DistributionIndah PrasetyawatiÎncă nu există evaluări