Documente Academic

Documente Profesional

Documente Cultură

3270notes Chapter 1-5

Încărcat de

Venus WanDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

3270notes Chapter 1-5

Încărcat de

Venus WanDrepturi de autor:

Formate disponibile

THE CHINESE UNIVERSITY OF HONG KONG

Department of Mathematics

MATH3270

Ordinary Dierential Equations

Contents

1 Ordinary dierential equations of rst-order 3

1.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 First-order linear ODE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.3 Separable equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.4 Exact equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.5 Homogeneous equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.6 Bernoullis equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.7 Substitution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2 Second Order Linear Equations 18

2.1 Solution space and Wronskian . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.2 Reduction of order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.3 Homogeneous equations with constant coecients . . . . . . . . . . . . . . . . . . 23

2.4 Method of Undetermined Coecients . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.5 Variation of Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.6 Mechanical and electrical vibrations . . . . . . . . . . . . . . . . . . . . . . . . . 30

3 Higher order Linear Equations 34

3.1 Solution space and Wronskian . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.2 Homogeneous equations with constant coecients . . . . . . . . . . . . . . . . . . 36

3.3 Method of undetermined coecients . . . . . . . . . . . . . . . . . . . . . . . . . 38

3.4 Method of variation of parameters . . . . . . . . . . . . . . . . . . . . . . . . . . 40

4 Systems of First Order Linear Equations 44

4.1 Basic properties of systems of rst order linear equations . . . . . . . . . . . . . . 44

4.2 Homogeneous linear systems with constant coecients . . . . . . . . . . . . . . . 46

4.3 Matrix exponential . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.4 Fundamental matrices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

4.5 Repeated eigenvalues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

4.6 Jordan normal forms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

4.7 Nonhomogeneous linear systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

1

5 Nonlinear Dierential Equations and Stability 76

5.1 Phase plane of linear systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

5.2 Autonomous systems and stability . . . . . . . . . . . . . . . . . . . . . . . . . . 82

5.3 Almost linear systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

5.4 Competing species . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

5.5 Predator-Prey equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

5.6 Liapunovs second method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

5.7 Periodic solutions and limit cycles . . . . . . . . . . . . . . . . . . . . . . . . . . 92

5.8 Chaos and strange attractors: The Lorenz equations . . . . . . . . . . . . . . . . 94

6 Answers to Exercises 97

2

1 Ordinary dierential equations of rst-order

1.1 Introduction

An equation of the form

F(t, y, y

, , y

(n)

) = 0, t (a, b),

where y = y(t), y

=

dy

dt

, , y

(n)

=

d

n

y

dt

n

is called an Ordinary Dierential Equation (ODE)

of the function y.

Examples:

1. y

ty = 0,

2. y

3y

+ 2 = 0,

3. y sin(

dy

dt

) +

d

2

y

dt

2

= 0.

The order of the ODE is dened to be the order of the highest derivative in the equation. In

solving ODEs, we are interested in the following problems:

Initial Value Problem(IVP): to nd solutions y(t) which satises given initial value

conditions, e.g. y(a) = 0, y

(a) = 1.

Boundary Value Problem(BVP): to nd solutions y(t) which satises given boundary

value conditions, e.g. y(a) = y(b) = 0.

An ODE is linear if it can be written as the form

a

0

(t)y

(n)

+a

1

(t)y

(n1)

+ +a

n1

(t)y

+a

n

(t)y = g(t), a

0

(t) = 0.

The linear ODE is called homogeneous if g(t) 0, inhomogeneous, otherwise. Also, it is

called a linear ODE of constant coecients when a

0

= 0, a

1

, , a

n

are constants. If an ODE

is not of the above form, we call it a non-linear ODE.

1.2 First-order linear ODE

The general form of a rst-order linear ODE is

y

+p(x)y = g(x). (1.2.1)

The basic principle to solve (1.2.1) is to make left hand side a derivative of an expression by

multiplying both side by a suitable factor called an integrating factor. To nd integrating

factor, multiplying both sides of (1.2.1) by e

f(x)

, where f(x) is to be determined, we have

e

f(x)

y

+e

f(x)

p(x)y = g(x)e

f(x)

.

Now, if we choose f(x) so that f

(x) = p(x), then the left hand side becomes

e

f(x)

y

+e

f(x)

f

(x)y =

d

dx

_

e

f(x)

y

_

.

Thus we may take

f(x) =

_

p(x)dx

Ordinary dierential equations of rst-order 4

and (1.2.1) can be solved easily as follow.

y

+p(x)y = g(x)

e

_

p(x)dx

dy

dx

+e

_

p(x)dx

p(x)y = g(x)e

_

p(x)dx

d

dx

e

_

p(x)dx

y = g(x)e

_

p(x)dx

e

_

p(x)dx

y =

_

_

g(x)e

_

p(x)dx

_

dx

y = e

_

p(x)dx

_

_

g(x)e

_

p(x)dx

_

dx

Note: The function f(x) =

_

p(x)dx is called an integrating factor. Integrating factor is not

unique. One may choose an arbitrary integration constant for

_

p(x)dx. Any primitive function

of p(x) may give an integrating factor.

Example 1.2.1. Find the general solution of y

+ 2xy = 0.

Solution: Multiply both sides by e

x

2

, we have

e

x

2 dy

dx

+e

x

2

2xy = 0

d

dx

e

x

2

y = 0

e

x

2

y = C

y = Ce

x

2

Example 1.2.2. Solve (x

2

1)y

+xy = 2x, x > 1.

Solution: Dividing both sides by x

2

1, the equation becomes

dy

dx

+

x

x

2

1

y =

x

x

2

1

.

Now

_

x

x

2

1

dx =

1

2

log(x

2

1) +C

Thus we multiply both sides of the equation by

exp(

1

2

log(x

2

1)) = (x

2

1)

1

2

and get

(x

2

1)

1

2

dy

dx

+

x

(x

2

1)

1

2

y =

2x

(x

2

1)

1

2

d

dx

_

(x

2

1)

1

2

y

_

=

2x

(x

2

1)

1

2

(x

2

1)

1

2

y =

_

2x

(x

2

1)

1

2

dx

y = (x

2

1)

1

2

_

2(x

2

1)

1

2

+C

_

y = 2 +C(x

2

1)

1

2

Ordinary dierential equations of rst-order 5

Example 1.2.3. Solve y

y tan x = 4 sin x, x (/2, /2).

Solution: An integrating factor is

exp(

_

tan xdx) = exp(log(cos x)) = cos x.

Multiplying both sides by cos x, we have

cos x

dy

dx

y sin x = 4 sin xcos x

d

dx

(y cos x) = 2 sin 2x

y cos x =

_

2 sin 2xdx

y cos x = cos 2x +C

y =

cos 2x +C

cos x

Example 1.2.4. A tank contains 1L of a solution consisting of 100 g of salt dissolved in water.

A salt solution of concentration of 20 gL

1

is pumped into the tank at the rate of 0.02 Ls

1

, and

the mixture, kept uniform by stirring, is pumped out at the same rate. How long will it be until

only 60 g of salt remains in the tank?

Solution: Suppose there is x g of salt in the solution at time t s. Then x follows the following

dierential equation

dx

dt

= 0.02(20 x)

Multiplying the equation by e

0.02t

, we have

dx

dt

+ 0.02x = 0.4

e

0.02t

dx

dt

+ 0.02e

0.02t

x = 0.4e

0.02t

d

dt

e

0.02t

x =

_

0.4e

0.02t

dt

e

0.02t

x = 20e

0.02t

+C

x = 20 +Ce

0.02t

Since x(0) = 100, C = 80. Thus the solution is

60 = 20 + 80e

0.02t

e

0.02t

= 2

t = 50 log 2

Ordinary dierential equations of rst-order 6

Example 1.2.5. David would like to by an apartment. He had examined his budget and deter-

mined that he can aord monthly payments of $20,000. If the annual interest is 6%, and the

term of the loan is 20 years, what amount can he aord to borrow?

Solution: Let $y be the remaining loan amount after t months. Then

dy

dt

=

0.06

12

y 20, 000

dy

dt

0.005y = 20, 000

e

0.005t

dy

dt

0.005ye

0.005t

= 20, 000e

0.005t

d

dt

(e

0.005t

y) = 20, 000e

0.005t

e

0.005t

y =

20, 000e

0.005t

0.005

+C

y = 4, 000, 000 +Ce

0.005t

Since the term of the loan is 20 years, y(240) = 0 and thus

4, 000, 000 +Ce

0.005240

= 0

C =

4, 000, 000

e

1.2

= 1, 204, 776.85

Therefore the amount that David can aord to borrow is

y(0) = 4, 000, 000 1, 204, 776.85e

0.005(0)

= 2, 795, 223.15

Note: The total amount that David pays is $240 20, 000 = $4, 800, 000.

1.3 Separable equations

A separable equation is an equation of the form

dy

dx

= f(x)g(y).

It can be solved as follow.

dy

g(y)

= f(x)dx

_

dy

g(y)

=

_

f(x)dx

Example 1.3.1. Find the general solution of y

= 3x

2

y.

Solution:

dy

y

= 3x

2

dx

_

dy

y

=

_

3x

2

dx

log y = x

3

+C

y = Ce

x

3

Ordinary dierential equations of rst-order 7

where C = e

C

.

Example 1.3.2. Solve 2

x

dy

dx

= y

2

+ 1, x > 0.

Solution:

dy

y

2

+ 1

=

dx

2

x

_

dy

y

2

+ 1

=

_

dx

2

x

tan

1

y =

x +C

y = tan(

x +C)

Example 1.3.3. Solve the initial value problem

dy

dx

=

x

y +x

2

y

, y(0) = 1.

Solution:

dy

dx

=

x

y(1 +x

2

)

ydy =

x

1 +x

2

dx

_

ydy =

_

x

1 +x

2

dx

y

2

2

=

1

2

_

1

1 +x

2

d(1 +x

2

)

y

2

= log(1 +x

2

) +C

Since y(0) = 1, C = 1. Thus

y

2

= 1 + log(1 +x

2

)

y =

_

1 + log(1 +x

2

)

Example 1.3.4. (Logistic equation) Solve the initial value problem for the logistic equation

dy

dt

= ry(1 y/K), y(0) = y

0

where r and K are constants.

Ordinary dierential equations of rst-order 8

Solution:

dy

y(1 y/K)

= rdt

_

dy

y(1 y/K)

dt =

_

rdt

_ _

1

y

+

1/K

1 y/K

_

dt = rt

log y log(1 y/K) = rt +C

y

1 y/K

= e

rt+C

y =

Ke

rt+C

K +e

rt+C

To satisfy the initial condition, we set

e

C

=

y

0

1 y

0

/K

and obtain

y =

y

0

K

y

0

+ (K y

0

)e

rt

.

Note: When t ,

lim

t

y(t) = K.

1.4 Exact equations

We say that the equation

M(x, y)dx +N(x, y)dy = 0 (1.4.1)

is exact if

M

y

=

N

x

.

In this case, there exists a function f(x, y) such that

f

x

= M and

f

y

= N.

Then the dierential equation can be written as

f

x

dx +

f

y

dy = 0

df(x, y) = 0

Therefore the general solution of the dierential equation is

f(x, y) = C.

To nd f(x, y), rst note that

f

x

= M.

Ordinary dierential equations of rst-order 9

Hence

f(x, y) =

_

M(x, y)dx +g(y).

Dierentiating both sides with respect to y, we have

N(x, y) =

y

_

M(x, y)dx +g

(y)

since

f

y

= N.

Now

N(x, y)

y

_

M(x, y)dx

is independent of x (why?). Therefore

g(y) =

_ _

N(x, y)

y

_

M(x, y)dx

_

dy

and we obtain

f(x, y) =

_

M(x, y)dx +

_ _

N(x, y)

y

_

M(x, y)dx

_

dy.

Remark: Equation (1.4.1) is exact when F = (M(x, y), N(x, y)) denes a conservative vector

eld. Then f(x, y) is just a potential function for F.

Example 1.4.1. Solve (4x +y)dx + (x 2y)dy = 0.

Solution: Since

y

(4x +y) = 1 =

x

(x 2y),

the equation is exact. We need to nd F(x, y) such that

F

x

= M and

F

y

= N.

Now

F(x, y) =

_

(4x +y)dx

= 2x

2

+xy +g(y)

To determine g(y), what we want is

F

y

= x 2y

x +g

(y) = x 2y

g

(y) = 2y

Therefore we may choose g(y) = y

2

and the solution is

F(x, y) = 2x

2

+xy y

2

= C.

Ordinary dierential equations of rst-order 10

Example 1.4.2. Solve

dy

dx

=

e

y

+x

e

2y

xe

y

.

Solution: Rewrite the equation as

(e

y

+x)dx + (xe

y

e

2y

)dy = 0.

Since

y

(e

y

+x) = e

y

=

x

(xe

y

e

2y

),

the equation is exact. Set

F(x, y) =

_

(e

y

+x)dx

= xe

y

+

1

2

x

2

+g(y)

We want

F

y

= xe

y

e

2y

xe

y

+g

(y) = xe

y

e

2y

g

(y) = e

2y

Therefore we may choose g(y) =

1

2

e

2y

and the solution is

xe

y

+

1

2

x

2

1

2

e

2y

= C.

When the equation is not exact, sometimes it is possible to convert it to an exact equation by

multiplying it by a suitable integrating factor. Unfortunately, there is no systematic way of

nding integrating factor.

Example 1.4.3. Show that (x, y) = x is an integrating factor of

(3xy +y

2

)dx + (x

2

+xy)dy = 0

and then solve the equation.

Solution: Multiplying the equation by x reads

(3x

2

y +xy

2

)dx + (x

3

+x

2

y)dy = 0.

Now

y

(3x

2

y +xy

2

) = 3x

2

+ 2xy

x

(x

3

+x

2

y) = 3x

2

+ 2xy

Ordinary dierential equations of rst-order 11

Thus the above equation is exact and x is an integrating factor. To solve the equation, set

F(x, y) =

_

(3x

2

y +xy

2

)dx

= x

3

y +

1

2

x

2

y

2

+g(y)

Now we want

F

y

= x

3

+x

2

y

x

3

+x

2

y +g

(y) = x

3

+x

2

y

g

(y) = 0

g(y) = C

Therefore the solution is

x

3

y +

1

2

x

2

y

2

= C.

Note: The equation in Example 1.4.3 is also a homogenous equation which will be discussed in

Section 1.5.

Example 1.4.4. Show that (x, y) = y is an integrating factor of

ydx + (2x e

y

)dy = 0

and then solve the equation.

Solution: Multiplying the equation by y reads

y

2

dx + (2xy ye

y

)dy.

Now

y

y

2

= 2y

x

(2xy ye

y

) = 2y

Thus the above equation is exact and y is an integrating factor. To solve the equation, set

F(x, y) =

_

y

2

dx

= xy

2

+g(y)

Now we want

F

y

= 2xy ye

y

2xy +g

(y) = 2xy ye

y

g

(y) = ye

y

g(y) =

_

ye

y

dy

=

_

yde

y

= ye

y

+

_

e

y

dy

= ye

y

+e

y

+C

Ordinary dierential equations of rst-order 12

Therefore the solution is

xy

2

ye

y

+e

y

= C.

1.5 Homogeneous equations

A rst order equation is homogeneous if it can be written as

dy

dx

= f

_

y

x

_

.

The above equation can be solved by the substitution u = y/x. Then y = xu and

dy

dx

= u +x

du

dx

.

Therefore the equation reads

u +x

du

dx

= f(u)

du

f(u) u

=

dx

x

which becomes a separable equation.

Example 1.5.1. Solve

dy

dx

=

x

2

+y

2

2xy

.

Solution: Rewrite the equation as

dy

dx

=

1 + (y/x)

2

2y/x

which is a homogeneous equation. Using substitution y = xu, we have

u +x

du

dx

=

dy

dx

=

1 +u

2

2u

x

du

dx

=

1 +u

2

2u

2

2u

2udu

1 u

2

=

dx

x

_

2udu

1 u

2

=

_

dx

x

log(1 u

2

) = log x +C

(1 u

2

)x = e

C

x

2

y

2

Cx = 0

where C = e

C

.

Example 1.5.2. Solve (y + 2xe

y/x

)dx xdy = 0.

Ordinary dierential equations of rst-order 13

Solution: Rewrite the equation as

dy

dx

=

y + 2xe

y/x

x

=

y

x

+ 2e

y/x

.

Let u = y/x, we have

u +x

du

dx

=

dy

dx

= u + 2e

u

x

du

dx

= 2e

u

e

u

du = 2

dx

x

_

e

u

du =

_

2

dx

x

e

u

= 2 log x +C

e

y/x

2 log x = C

1.6 Bernoullis equations

An equation of the form

y

+p(x)y = q(x)y

n

, n = 0, 1,

is called a Bernoullis dierential equation. It is a non-linear equation and y(x) = 0 is

always a solution when n > 0. To nd a non-trivial, we use the substitution

u = y

1n

.

Then

du

dx

= (1 n)y

n

dy

dx

= (1 n)y

n

(p(x)y +q(x)y

n

)

du

dx

+ (1 n)p(x)y

1n

= (1 n)q(x)

du

dx

+ (1 n)p(x)u = (1 n)q(x)

which is a linear dierential equation of u.

Note: Dont forget that y(x) = 0 is always a solution to the Bernoullis equation when n > 0.

Example 1.6.1. Solve

dy

dx

y = e

x

y

2

.

Solution: Let u = y

12

= y

1

,

du

dx

= y

2

dy

dx

= y

2

_

y +e

x

y

2

_

du

dx

+y

1

= e

x

du

dx

+u = e

x

Ordinary dierential equations of rst-order 14

which is a linear equation of u. To solve it, multiply both side by e

x

, we have

e

x

du

dx

+e

x

u = 1

d

dx

(e

x

u) = 1

e

x

u = x +C

u = (C x)e

x

y

1

= (C x)e

x

Therefore the general solution is

y =

e

x

C x

or y = 0

Example 1.6.2. Solve x

dy

dx

+y = xy

3

.

Solution: Let u = y

13

= y

2

,

du

dx

= 2y

3

dy

dx

du

dx

=

2y

3

x

_

y +xy

3

_

du

dx

2y

2

x

= 2

du

dx

2u

x

= 2

which is a linear equation of u. To solve it, multiply both side by exp(

_

2x

1

dx) = x

2

, we

have

x

2

du

dx

2x

3

u = 2x

2

d

dx

(x

2

u) = 2x

2

x

2

u = 2x

1

+C

u = 2x +Cx

2

y

2

= 2x +Cx

2

y

2

=

1

2x +Cx

2

or y = 0

1.7 Substitution

In this section, we give some examples of dierential equations that can be transformed to one

of the forms in the previous sections by a suitable substitution.

Example 1.7.1. Use the substitution u = log y to solve xy

4x

2

y + 2y log y = 0.

Ordinary dierential equations of rst-order 15

Solution:

du

dx

= y

/y

x

du

dx

= 4x

2

2 log y

x

2

du

dx

+ 2xu = 4x

3

d

dx

x

2

u = 4x

3

x

2

u =

_

4x

3

dx

x

2

u = x

4

+C

u = x

2

+

C

x

2

y = exp

_

x

2

+

C

x

2

_

Example 1.7.2. Use the substitution u = e

2y

to solve 2xe

2y

y

= 3x

4

+e

2y

.

Solution:

du

dx

= 2e

2y

dy

dx

x

du

dx

= 2xe

2y

dy

dx

x

du

dx

= 3x

4

+e

2y

1

x

du

dx

1

x

2

u = 3x

2

d

dx

_

u

x

_

= 3x

2

u

x

=

_

3x

2

dx

u = x

3

+C

e

2y

= x

3

+C

y =

1

2

log(x

4

+Cx)

Example 1.7.3. Use the substitution y = x +

1

u

to solve y

1

x

y = 1

1

x

2

y

2

.

Note: An equation of the form

y

+p

1

(x)y +p

2

(x)y

2

= q(x)

is called a Riccatis equation. If we know that y(x) = y

1

(x) is a particular solution, then the

general solution can be found by the substitution

y = y

1

+

1

u

.

Ordinary dierential equations of rst-order 16

Solution:

dy

dx

= 1

1

u

2

du

dx

1

x

y + 1

1

x

2

y

2

= 1

1

u

2

du

dx

1

u

2

du

dx

=

1

x

2

_

x +

1

u

_

2

1

x

_

x +

1

u

_

1

u

2

du

dx

=

1

xu

+

1

x

2

u

2

du

dx

1

x

u =

1

x

2

which is a linear equation of u. An integrating factor is

exp

_

_

1

x

dx

_

= exp(log x) = x

1

.

Thus

x

1

du

dx

x

2

u = x

3

d

dx

(x

1

u) = x

3

x

1

u =

1

2x

2

+C

u =

1

2x

+Cx

u =

2Cx

2

1

2x

Therefore the general solution is

y = x +

2x

2Cx

2

1

or y = x.

Example 1.7.4. Solve y

= 1 +t

2

2ty +y

2

given that y = t is a particular solution.

Solution: This is a Riccattis equation and y = t is a solution. Thus we use the substitution

y = t +

1

u

.

Then

dy

dt

= 1

1

u

2

du

dt

1 +t

2

2ty +y

2

= 1

1

u

2

du

dt

1 +t

2

2t(t +

1

u

) + (t +

1

u

)

2

= 1

1

u

2

du

dt

du

dt

= 1

u = C t

Ordinary dierential equations of rst-order 17

Therefore the general solution is

y = t +

1

C t

or y = t.

2 Second Order Linear Equations

In this chapter, we consider second order linear ordinary dierential equation, i.e., a dierential

equation of the form

d

2

y

dt

2

+p(t)

dy

dt

+q(t)y = g(t).

We let

L[y] = y

+p(t)y

+q(t)y

and write the equation as the form

L[y] = g(t). (2.1)

We say that the equation is homogeneous if g(t) = 0 and

L[y] = 0

is called the homogeneous equation associated to (2.1).

2.1 Solution space and Wronskian

First we state two fundamental results of second order linear ordinary dierential equations.

Theorem 2.1.1. The initial value problem

_

y

+p(t)y

+q(t)y = g(t), t I

y(t

0

) = y

0

, y

(t

0

) = y

0

,

where p, q and g are continuous on an interval I that contains the point y

0

, has a unique solution

on I.

Theorem 2.1.2 (Principle of superposition). If y

1

and y

2

are two solutions of the homogeneous

equation

L[y] = 0,

then c

1

y

1

+c

2

y

2

is also a solution for any constants c

1

and c

2

.

Let y

1

and y

2

be two solutions of a second order linear ordinary dierential equations. Then we

dene the Wronskian (or Wronskian determinant) to be

W(t) = W(y

1

, y

2

)(t) =

y

1

(t) y

2

(t)

y

1

(t) y

2

(t)

= y

1

(t)y

2

(t) y

1

(t)y

2

(t)

Suppose y

1

and y

2

are two solutions with W(t

0

) = 0, then the solution of the initial value

problem can be expressed in terms of y

1

and y

2

.

Theorem 2.1.3. Let t

0

I. Suppose y

1

and y

2

are two solutions of the homogeneous equation

L[y] = 0, t I,

and

W(t

0

) = 0.

Then the initial value problem for the homogeneous equation

_

L[y] = 0,

y(t

0

) = y

0

, y

(t

0

) = y

0

,

(2.1.1)

Second Order Linear Equations 19

has a unique solution of the form

y(t) = c

1

y

1

(t) +c

2

y

2

(t)

for some constants c

1

and c

2

.

Proof. Since W(t

0

) = 0, there exists c

1

, c

2

such that

_

y

1

(t

0

) y

2

(t

0

)

y

1

(t

0

) y

2

(t

0

)

__

c

1

c

2

_

=

_

y

0

y

0

_

.

Then y = c

1

y

1

+c

2

y

2

is a solution to the initial value problem (2.1.1).

Theorem 2.1.4. Suppose y

1

and y

2

are two solutions of the homogeneous equation L[y] = 0

such that W(t

0

) = 0 for some t

0

. Then the general solution of the equation is

y(t) = c

1

y

1

(t) +c

2

y

2

(t),

i.e., every solution of the equation can be written into this form.

Proof. Suppose u(t) is a solution to the homogeneous equation L[y] = 0. Let

y

0

= u(t

0

) and y

0

= u

(t

0

).

By Theorem 2.1.3, the function u(t), as a solution to the initial value problem (2.1.1), must be

of the form u(t) = c

1

y

1

(t) +c

2

y

2

(t).

Suppose y

1

and y

2

are two solutions on I such that W(t

0

) = 0 for some t

0

I, then y

1

, y

2

are

said to form a fundamental set of solutions of the equation.

Two functions u(t) and v(t) are said to be linearly dependent if there exists constants k

1

and

k

2

, not both zero, such that k

1

u(t) + k

2

v(t) = 0 for all t I. They are said to be linearly

independent if they are not linearly dependent.

Theorem 2.1.5. Let u(t) and v(t) be two continuous functions on open interval I. If W(u, v)(t

0

) =

0 for some t

0

I, then u and v are linearly independent.

Note: The converse of the above theorem is false, e.g. u(t) = t

3

, v(t) = |t|

3

.

Example 2.1.6. y

1

(t) = e

t

and y

2

(t) = e

2t

form a fundamental set of solutions of

y

+y

2y = 0

since W(y

1

, y

2

) = e

t

(2e

2t

) e

t

(e

2t

) = 3e

t

is not identically zero.

Example 2.1.7. y

1

(t) = e

t

and y

2

(t) = te

t

form a fundamental set of solutions of

y

2y

+y = 0

since W(y

1

, y

2

) = e

t

(te

t

+e

t

) e

t

(te

t

) = e

2t

is not identically zero.

Second Order Linear Equations 20

Example 2.1.8. The functions y

1

(t) = 3, y

2

(t) = cos

2

t and y

3

(t) = 2 sin

2

t are linearly

dependent since

2(3) + (6) cos

2

t + 3(2 sin

2

t) = 0.

One may justify that the Wronskian

y

1

y

2

y

3

y

1

y

2

y

3

y

1

y

2

y

= 0.

Example 2.1.9. Show that y

1

(t) = t

1/2

and y

2

(t) = t

1

form a fundamental set of solutions of

2t

2

y

+ 3ty

y = 0, t > 0.

Solution: It is easy to check that y

1

and y

2

are solutions to the equation. Now

W(y

1

, y

2

)(t) =

t

1/2

t

1

1

2

t

1/2

t

2

=

3

2

t

3/2

is not identically zero. We conclude that y

1

and y

2

form a fundamental set of solutions of the

equation.

Theorem 2.1.10 (Abels Theorem). If y

1

and y

2

are solutions of the equation

L[y] = y

+p(t)y

+q(t)y = 0,

where p and q are continuous on an open interval I, then

W(y

1

, y

2

)(t) = c exp

_

_

p(t)dt

_

,

where c is a constant. Further, y

1

and y

2

form a fundamental set of solutions if and only if

W(y

1

, y

2

)(t) is never zero in I.

Proof. Since y

1

and y

2

are solutions, we have

_

y

1

+p(t)y

1

+q(t)y

1

= 0,

y

2

+p(t)y

2

+q(t)y

2

= 0.

If we multiply the rst equation by y

2

, multiply the second equation by y

1

and add the resulting

equations, we get

(y

1

y

2

y

1

y

2

) +p(t)(y

1

y

2

y

1

y

2

) = 0

W

+p(t)W = 0

which is a rst-order linear and separable dierential equation. The solution can be obtained

easily as

W(t) = c exp

_

_

p(t)dt

_

,

where c is a constant. Since the exponential function is never zero, W(y

1

, y

2

) is never zero on

I if and only if c = 0 which is also equivalent to that y

1

and y

2

form a fundamental set of

solutions.

Second Order Linear Equations 21

Theorem 2.1.11. Let y

1

and y

2

be two solutions of

L[y] = y

+p(t)y

+q(t)y = 0,

where p and q are continuous on an open interval I. Then the following statements are equiva-

lent.

1. y

1

and y

2

form a fundamental set of solutions, i.e., W(y

1

, y

2

)(t

0

) = 0 for some t

0

I.

2. W(y

1

, y

2

)(t) = 0 for all t I.

3. Every solution of the equation is of the form c

1

y

1

+c

2

y

2

for some constants c

1

, c

2

.

4. y

1

and y

2

are linearly independent.

Proof. (1)(2): Follows from Abels Theorem.

(2)(3): By Theorem 2.1.4.

(3)(4): Take any t

0

I. By theorem 2.1.1, there exists solutions u(t), v(t) of the equation

such that

_

u(t

0

) = 1

u

(t

0

) = 0

and

_

v(t

0

) = 0

v

(t

0

) = 1

.

Suppose (3) is true, then there exists constants a

1

, a

2

, b

1

, b

2

such that

_

u(t) = a

1

y

1

(t) +a

2

y

2

(t)

v(t) = b

1

y

1

(t) +b

2

y

2

(t).

Thus

_

y

1

(t

0

) y

2

(t

0

)

y

1

(t

0

) y

2

(t

0

)

__

a

1

b

1

a

2

b

2

_

=

_

u(t

0

) v(t

0

)

u

(t

0

) v

(t

0

)

_

=

_

1 0

0 1

_

.

Hence the matrix

_

y

1

(t

0

) y

2

(t

0

)

y

1

(t

0

) y

2

(t

0

)

_

is non-singular. To prove (4), suppose c

1

y

1

(t) +c

2

y

2

(t) = 0 for all t I, then

_

y

1

(t

0

) y

2

(t

0

)

y

1

(t

0

) y

2

(t

0

)

__

c

1

c

2

_

=

_

0

0

_

.

This implies that c

1

= c

2

= 0. Therefore y

1

and y

2

are linearly independent.

(4)(1): Suppose (1) is not true, i.e., W(y

1

, y

2

)(t) is identically zero. Let t

0

I be any point,

we have W(y

1

, y

2

)(t

0

) = 0. Consequently, there exists constants c

1

and c

2

, not both zero, such

that

_

c

1

y

1

(t

0

) +c

2

y

2

(t

0

) = 0,

c

1

y

1

(t

0

) +c

2

y

2

(t

0

) = 0.

Let u(t) = c

1

y

1

(t) +c

2

y

2

(t). Then u(t) is also a solution to the equation with initial conditions

u(0) = u

(0) = 0.

Therefore u(t) = 0 for all t I by Theorem 2.1.1. Hence y

1

and y

2

are linearly dependent.

Second Order Linear Equations 22

2.2 Reduction of order

Suppose we know one solution y

1

(t), not everywhere zero, of

y

+p(t)y

+q(t)y = 0. (2.2.1)

To nd a second solution, let

y(t) = v(t)y

1

(t).

We have

y

= v

y

1

+vy

1

and

y

= v

y

1

+ 2v

1

+vy

1

.

Then (2.2.1) becomes

y

1

v

+ (2y

1

+py

1

)v

+ (y

1

+py

1

+qy

1

)v = 0. (2.2.2)

Since y

1

is a solution of (2.2.1), the coecient of v in (2.2.2) is zero. So the equation reads

y

1

v

+ (2y

1

+py

1

)v

= 0,

which is actually a rst order equation for v

.

Example 2.2.1. Given that y

1

(t) = e

2t

is a solution of

y

+ 4y

+ 4y = 0,

nd the general solution of the equation.

Solution: We set y = e

2t

v, then

y

= e

2t

v

2e

2t

v, and y

= e

2t

v

4e

2t

v

+ 4e

2t

v.

Thus the equation becomes

e

2t

v

4e

2t

v

+ 4e

2t

v + 4(e

2t

v

2e

2t

v) + 4e

2t

v = 0

e

2t

v

= 0

v

= 0

v

= C

1

v = C

1

t +C

2

Therefore the general solution is

y = e

2t

(C

1

t +C

2

) = C

1

te

2t

+C

2

e

2t

.

Example 2.2.2. Given that y

1

(t) = t

1

is a solution of

2t

2

y

+ 3ty

y = 0, t > 0,

nd the general solution of the equation.

Second Order Linear Equations 23

Solution: We set y = vt

1

, then

y

= v

t

1

vt

2

, and y

= v

t

1

2v

t

2

+ 2vt

3

.

Thus the equation becomes

2t

2

(v

t

1

2v

t

2

+ 2vt

3

) + 3t(v

t

1

vt

2

) vt

1

= 0

2tv

= 0

t

1

2

v

1

2

t

3

2

v

= 0

d

dt

(t

1

2

v

) = 0

t

1

2

v

= C

v

= Ct

1

2

v = C

1

t

3

2

+C

2

Therefore the general solution is

y = (C

1

t

3

2

+C

2

)t

1

= C

1

t

1

2

+C

2

t

1

.

2.3 Homogeneous equations with constant coecients

We consider homogeneous equation with constant coecients

a

d

2

y

dt

2

+b

dy

dt

+cy = 0, t R.

The equation

ar

2

+br +c = 0

is called the characteristic equation of the dierential equation. Let r

1

, r

2

be the two roots

(can be equal) of the characteristic equation.

Case 1. Both r

1

, r

2

are real, and r

1

= r

2

(b

2

4ac > 0). Then the general solution is given by

y = C

1

e

r

1

t

+C

2

e

r

2

t

,

where C

1

, C

2

are arbitrary constants.

Case 2. r

1

= r

2

is real (b

2

4ac = 0). Then the general solution is given by

y = C

1

e

r

1

t

+C

2

te

r

1

t

,

where C

1

, C

2

are arbitrary constants.

Case 3. Both r

1

, r

2

are not real (b

2

4ac < 0). Write

r

1

, r

2

= i ( > 0).

Then the general solution is given by

y = e

t

(C

1

cos(t) +C

2

sin(t)) ,

where C

1

, C

2

are arbitrary constants.

Second Order Linear Equations 24

Example 2.3.1. Solve

y

+ 5y

+ 6y = 0

Solution: The general solution is:

y = C

1

e

2t

+C

2

e

3t

.

Example 2.3.2. Solve the initial value problem

4y

4y

+y = 0, y(0) = 2, y

(0) = 4.

Solution: The general solution is:

y = C

1

e

t/2

+C

2

te

t/2

.

Now

_

y(0) = C

1

= 2

y

(0) = C

1

/2 +C

2

= 4

_

C

1

= 2

C

2

= 5

Thus

y = 2e

t/2

5te

t/2

.

Example 2.3.3. Solve the initial value problem

16y

8y

+ 145y = 0, y(0) = 2, y

(0) = 1.

Solution: The roots of the characteristic equation is

r

1

, r

2

=

1

4

3i.

Thus the general solution is:

y = C

1

e

t/4

cos 3t +C

2

e

t/4

sin 3t.

Now

_

y(0) = C

1

= 2

y

(0) = C

1

/4 + 3C

2

= 1

_

C

1

= 2

C

2

= 1/2

y = 2e

t/4

cos 3t +

1

2

e

t/4

sin 3t.

Note: This is a case of growing oscillation.

10

0

10

20

y(t)

2 4 6 8 10

t

Second Order Linear Equations 25

Example 2.3.4. Solve

y

+y

+y = 0.

Solution: The roots of the characteristic equation is

r

1

, r

2

=

1

2

3

2

i.

Thus the general solution is:

y = C

1

e

t/2

cos(

3t/2) +C

2

e

t/2

sin(

3t/2),

where C

1

, C

2

are arbitrary constants. This is a case of decaying oscillation.

0.5

0

0.5

1

1.5

2

2.5

y(t)

2 4 6 8 10 12 14 16

t

Example 2.3.5.

y

+ 9y = 0.

Solution: The general solution is:

y = C

1

cos 3t +C

2

sin 3t,

which oscillates steadily.

Note: In the second case i.e. r

1

= r

2

, the solution y = te

r

1

t

can be obtained by the method

of order reduction explained in section 2.2. Determine a function v(t) such that y = v(t)e

r

1

t

becomes a solution of the dierential equation. Since

y

= v(t)r

1

e

r

1

t

+v

(t)e

r

1

t

,

y

= v(t)r

2

1

e

r

1

t

+ 2r

1

v

(t)e

r

1

t

+v

(t)e

r

1

t

,

and

y

+by

+cy = (b + 2r

1

)v

(t)e

r

1

t

+v

(t)e

r

1

t

= v

e

r

1

t

,

we have v

= 0, i.e. v(t) = k

1

t +k

2

for some constants k

1

, k

2

. Hence y = te

r

1

t

is a solution.

2.4 Method of Undetermined Coecients

Throughout this section, we assume that the coecient of y

is 1 and consider

L[y] = y

+by

+cy.

Second Order Linear Equations 26

To solve the nonhomogeneous equation

L[y] = g on I, (2.4.1)

it suces to obtain the general solution y = C

1

y

1

+C

2

y

2

(as described above) of the associated

homogeneous equation:

L[y] = 0, (2.4.2)

and nd a (particular) solution Y of (2.4.1). For, any solution u of (2.4.1) is then given by:

u = C

1

y

1

+C

2

y

2

+Y

for some (suitable) constants C

1

and C

2

. This holds because uY is a solution of (2.4.2). Hence

by the preceding section, it is given by C

1

y

1

+C

2

y

2

for some particular constants C

1

and C

2

.

When g(t) = a

1

g

1

(t) + a

2

g

2

(t) + + a

k

g

k

(t) where a

1

, a

2

, , a

k

are real numbers and each

g

i

(t) is of the form e

t

, cos t, sin t, e

t

cos t, e

t

sin t, a polynomial in t or a product of a

polynomial and one of the above functions, then a particular solution Y

i

(t) is of the form which

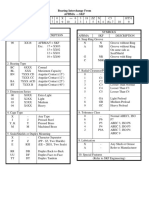

is listed in the following table.

The particular solution of ay

+by

+cy = g

i

(t)

g

i

(t) Y

i

(t)

P

n

(t) = a

n

t

n

+ +a

1

t +a

0

t

s

(A

n

t

n

+ +A

1

t +A

0

)

P

n

(t)e

t

t

s

(A

n

t

n

+ +A

1

t +A

0

)e

t

P

n

(t) cos t, P

n

(t) sin t t

s

_

(A

n

t

n

+ +A

1

t +A

0

) cos t

+(B

n

t

n

+ +B

1

t +B

0

) sin t

_

P

n

(t)e

t

cos t, P

n

(t)e

t

sin t t

s

_

(A

n

t

n

+ +A

1

t +A

0

)e

t

cos t

+(B

n

t

n

+ +B

1

t +B

0

)e

t

sin t

_

Notes: Here s = 0, 1, 2 is the smallest nonnegative integer that will ensure that

no term in Y

i

(t) is a solution of the corresponding homogeneous equation.

Example 2.4.3. Find a particular solution of y

+y

2y = 8e

2t

.

Solution: A particular solution is of the form Y = Ae

2t

, where A is a constant to be determined.

Now

Y

+Y

2Y = 4Ae

2t

.

So we set A = 2 and a particular solution is given by

Y = 2e

2t

.

Example 2.4.4. y

+y

2y = 9e

t

.

Solution: (This time Y = Ae

t

does not work.) Now 1 is a (simple) root of the characteristic

equation r

2

+r 2 = 0. We nd a particular solution by trying

Y = Ate

t

.

Second Order Linear Equations 27

Then

Y

+Y

2Y = (At + 2A)e

t

+ (At +A)e

t

2Ate

t

= 3Ae

t

.

So we set A = 3 and a particular solution is given by

Y = 3te

t

.

Example 2.4.5. y

+y

2y = 10 sin t.

Solution: We should set Y = Acos t +Bsin t. Then

_

Y

= Bcos t Asin t,

Y

= Acos t Bsin t,

Now

Y

+Y

2Y = (3A+B) cos t + (A3B) sin t

So we set

_

3A+B = 0,

A3B = 10.

Therefore a particular is given by

Y (t) = cos t + 3 sin t.

Example 2.4.6. y

+y

2y = 26e

t

cos 2t.

Solution: A particular solution is of the form Y = Ae

t

cos 2t +Be

t

sin 2t, then

Y

= (A+ 2B)e

t

cos 2t + (2A+B)e

t

sin 2t,

Y

= (3A+ 4B)e

t

cos 2t + (4A3B)e

t

sin 2t.

Now

Y

+Y

2Y = (4A+ 6B)e

t

cos 2t + (6A4B)e

t

sin 2t.

Put

_

4A+ 6B = 26,

6A4B = 0.

We have A = 2 and B = 3. Therefore a particular is given by

Y = 2e

t

cos 2t + 3e

t

sin 2t.

Example 2.4.7. Find the general solution of

y

+y

2y = 9e

t

10 sin t + 26e

t

cos 2t.

Second Order Linear Equations 28

Solution: The roots of the characteristic equation is r = 1, 2. Thus the general solution of

the associated homogeneous equation is

y

c

= c

1

e

t

+c

2

e

2t

.

The particular solutions corresponding to each term on the right hand side are

y

+y

2y = 9e

t

Y

1

= 3te

t

,

y

+y

2y = 10 sin t Y

2

= cos t + 3 sin t,

y

+y

2y = 26e

t

cos 2t Y

3

= 2e

t

cos 2t + 3e

t

sin 2t.

Therefore the general solution is

y = c

1

e

t

+c

2

e

2t

+ 3te

2t

+ cos t + 3 sin t 2e

t

cos 2t + 3e

t

sin 2t.

Example 2.4.8. Write down the form of a particular solution for the equation

y

4y

+ 5y = t

2

e

2t

+ 3t sin t 2e

2t

cos t.

Solution: The roots of the characteristic equation are r = 2 i. Thus a particular solution is

of the form

Y (t) = (A

2

t

2

+A

1

t +A

0

)e

2t

+ (B

1

t +B

0

) cos t + (C

1

t +C

0

) sin t +D

1

te

2t

cos t +D

2

te

2t

sin t.

Example 2.4.9. Write down the form of a particular solution for the equation

y

4y

+ 4y = te

2t

+t

2

e

3t

.

Solution: The characteristic equation has a double root r = 2. Thus a particular solution is of

the form

Y (t) = (A

1

t

3

+A

0

t

2

)e

2t

+ (B

2

t

2

+B

1

t +B

0

)e

3t

.

2.5 Variation of Parameters

Theorem 2.5.1. Let

W(y

1

, y

2

)

y

1

y

2

y

1

y

,

where y

1

, y

2

are fundamental set of solutions of

y

+p(t)y

+q(t)y = 0, on I.

Let u

1

, u

2

be such that

u

1

=

gy

2

W(y

1

, y

2

)

and u

2

=

gy

1

W(y

1

, y

2

)

,

where g(t) is a given continuous function on I. Then Y = u

1

y

1

+ u

2

y

2

is a particular solution

of

y

+p(t)y

+q(t)y = g(t), on I.

Second Order Linear Equations 29

Proof. Note that u

1

, u

2

satisfy

_

y

1

y

2

y

1

y

2

__

u

1

u

2

_

=

_

0

g

_

.

Let Y = u

1

y

1

+u

2

y

2

. Then

Y

= u

1

y

1

+u

1

y

1

+u

2

y

2

+u

2

y

2

= u

1

y

1

+u

2

y

2

since u

1

y

1

+u

2

y

2

= 0, and

Y

= u

1

y

1

+u

2

y

2

+u

1

y

1

+u

2

y

2

= u

1

y

1

+u

2

y

2

+g

since u

1

y

1

+u

2

y

2

= g. Therefore

Y

+pY

+qY

= (u

1

y

1

+u

2

y

2

+g) +p(u

1

y

1

+u

2

y

2

) +q(u

1

y

1

+u

2

y

2

)

= u

1

(y

1

+py

1

+qy

1

) +u

2

(y

2

+py

2

+qy

2

) +g

= g

since y

1

, y

2

are solutions to the homogeneous equation y

+py

+qy = 0.

Example 2.5.2. Solve

y

+ 4y =

3

sin t

.

Solution: Solving the corresponding homogeneous equation, we let

y

1

= cos 2t, y

2

= sin 2t.

We have

W(y

1

, y

2

)(t) =

cos 2t sin 2t

2 sin 2t 2 cos 2t)

= 2.

So

_

_

u

1

=

gy

2

W(t)

=

3 sin 2t

2 sin t

= 3 cos t,

u

2

=

gy

1

W(t)

=

3 cos 2t

2 sin t

=

3

2 sin t

3 sin t.

Hence,

_

u

1

= 3 sin t +c

1

,

u

2

=

3

2

ln | csc t cot t| + 3 cos t +c

2

.

and the general solution is

y = c

1

cos 2t +c

2

sin 2t 3 sin t cos 2t +

3

2

sin 2t ln | csc t cot t| + 3 cos t sin 2t

= c

1

cos 2t +c

2

sin 2t +

3

2

sin 2t ln | csc t cot t| + 3 sin t

where c

1

, c

2

are constants.

Second Order Linear Equations 30

Example 2.5.3. Solve

y

3y

+ 2y =

e

3t

e

t

+ 1

.

Solution: Solving the corresponding homogeneous equation, we let

y

1

= e

t

, y

2

= e

2t

.

We have

W(y

1

, y

2

)(t) =

e

t

e

2t

e

t

2e

2t

= e

3t

.

So

u

1

=

gy

2

W(t)

=

_

e

3t

e

t

+ 1

e

2t

_

/e

3t

=

e

2t

e

t

+ 1

,

u

2

=

gy

1

W(t)

=

_

e

3t

e

t

+ 1

e

t

_

/e

3t

=

e

t

e

t

+ 1

.

Thus

u

1

=

_

e

2t

e

t

+ 1

dt

=

_

e

t

e

t

+ 1

de

t

=

_ _

1

e

t

+ 1

1

_

d(e

t

+ 1)

= log(e

t

+ 1) (e

t

+ 1) +c

and

u

2

=

_

e

t

e

t

+ 1

dt

=

_

1

e

t

+ 1

d(e

t

+ 1)

= log(e

t

+ 1) +c

.

Therefore the general solution is

y = u

1

y

1

+u

2

y

2

= (log(e

t

+ 1) (e

t

+ 1) +c

)e

t

+ (log(e

t

+ 1) +c

)e

2t

= c

1

e

t

+c

2

e

2t

+ (e

t

+e

2t

) log(e

t

+ 1)

2.6 Mechanical and electrical vibrations

One of the reasons why second order linear equations with constant coecients are worth study-

ing is that they serve as mathematical models of simple vibrations.

Mechanical vibrations

Consider a mass m hanging on the end of a vertical spring of original length l. Let u(t), measured

Second Order Linear Equations 31

positive downward, denote the displacement of the mass from its equilibrium position at time t.

Then u(t) is related to the forces acting on the mass through Newtons law of motion

mu

(t) +ku(t) = f(t), (2.6.1)

where k is the spring constant and f(t) is the net force (excluding gravity and force from the

spring) acting on the mass.

Undamped free vibrations

If there is no external force, then f(t) = 0 and equation (2.6.1) reduces to

mu

(t) +ku(t) = 0.

The general solution is

u = C

1

cos

0

t +C

2

sin

0

t,

where

0

=

_

k

m

is the natural frequency of the system. The period of the vibration is given by

T =

2

0

= 2

_

m

k

.

We can also write the solution in the form

u(t) = Acos(

0

t ).

Then A is the amplitude of the vibration. Moreover, u satises the initial conditions

_

u(0) = u

0

= Acos

u

(0) = u

0

= A

0

sin .

Thus we have

_

_

_

A = u

2

0

+

u

2

0

2

0

= tan

1

u

0

u

0

0

.

Damped free vibrations

If we include the eect of damping, the dierential equation governing the motion of mass is

mu

+u

+ku = 0,

where is the damping coecient. The roots of the corresponding characteristic equation are

r

1

, r

2

=

_

2

4km

2m

.

The solution of the equation depends on the sign of

2

4km and are listed in the following

table.

Solution of mu

+u

+ku = 0

Case Solution Damping

2

4km

< 1 e

2m

t

(C

1

cos t +C

2

sin t) Small damping

2

4km

= 1 (C

1

t +C

2

)e

2m

t

Critical damping

2

4km

> 1 C

1

e

r

1

t

+C

2

e

r

2

t

Overdamped

Second Order Linear Equations 32

Here

=

_

k

m

2

4m

2

=

0

_

1

2

4km

is called the quasi frequency. As

2

/4km increases from 0 to 1, the quasi frequency de-

creases from

0

=

_

k/m to 0 and the quasi period increases from 2

_

m/k to innity.

Forces vibrations with damping

Suppose that an external force F

0

cos t is applied to a damped ( > 0) spring-mass system.

Then the equation of motion is

mu

+u

+ku = F

0

cos t.

The general solution of the equation must be of the form

u = c

1

u

1

(t) +c

2

u

2

(t) +Acos(t ) = u

c

(t) +U(t).

Since m, , k are all positive, the real part of the roots of the characteristic equation are always

negative. Thus u

c

0 as t and it is called the transient solution. The remaining

term U(t) is called the steady-state solution or the forced response. Straightforward, but

somewhat lengthy computations shows that

A =

F

0

, cos =

m(

2

0

2

)

, sin =

,

where

=

_

m

2

(

2

0

2

)

2

+

2

2

and

0

=

_

k/m.

If

2

< 2mk, resonance occurs, i.e. the maximum amplitude

A

max

=

F

0

0

_

1

2

/4mk

is obtained, when

=

max

=

0

_

1

2

2mk

.

We list in the following table how the amplitude A and phase angle of the steady-state

oscillation depends on the frequency of the external force.

Amplitude and phase of forced vibration

Frequency Amplitude Phase dierence

0 A

F

0

k

0

=

max

=

0

_

1

2

2mk

A

max

=

F

0

1

2

/4mk

cos

1

_

1

_

4mk/

2

1

_

=

0

F

0

2

A 0

Second Order Linear Equations 33

Forced vibrations without damping

The equation of motion of an undamped forced oscillator is

mu

+ku = F

0

cos t.

The general solution of the equation is

_

_

u = c

1

cos

0

t +c

2

sin

0

t +

F

0

cos t

m(

2

0

2

)

, =

0

u = c

1

cos

0

t +c

2

sin

0

t +

F

0

t sin

0

t

2m

0

, =

0

Suppose =

0

. If we assume that the mass is initially at rest so that the initial condition are

u(0) = u

(0) = 0, then the solution is

u =

F

0

m(

2

0

2

)

(cos t cos

0

t)

=

2F

0

m(

2

0

2

)

sin

(

0

)t

2

sin

(

0

+)t

2

.

If |

0

| is small, then

0

+ is much greater than |

0

|. The motion is a rapid oscillation

with frequency (

0

+)/2 but with a slowly varying sinusoidal amplitude

2F

0

m|

2

0

2

|

sin

(

0

)t

2

.

This type of motion is called a beat and |

0

|/2 is the beat frequency.

Electric circuits

Second order linear dierential equation with constant coecients can also be used to study

electric circuits. By Kirchhos law of electric circuit, the total charge Q on the capacitor in a

simple series LCR circuit satises the dierential equation

L

d

2

Q

dt

2

+R

dQ

dt

+

Q

C

= E(t),

where L is the inductance, R is the resistance, C is the capacitance and E(t) is the impressed

voltage. Since the ow of current in the circuit is I = dQ/dt, dierentiating the equation with

respect to t gets

LI

+RI

+C

1

I = E

(t).

Therefore the results for mechanical vibrations in the preceding paragraphs can be used to study

LCR circuit.

3 Higher order Linear Equations

3.1 Solution space and Wronskian

The theoretical structure and methods of solution developed in the preceding chapter for second

order linear equations extend directly to linear equations of third and higher order. An nth

order linear dierential equations is an equation of the form

L[y] =

d

n

y

dt

n

+p

n1

(t)

d

n1

y

dt

n1

+ +p

1

(t)

dy

dt

+p

0

(t)y = g(t).

Theorem 3.1.1. If the functions p

0

(t), p

1

(t), , p

n1

(t), and g(t) are continuous on the open

interval I, then there exists exactly one solution to the initial value problem

_

L[y] = g(t), t I

y(t

0

) = y

0

, y

(t

0

) = y

0

, , y

(n1)

(t

0

) = y

(n1)

0

,

.

Let y

1

, y

2

, , y

n

be solutions to the homogeneous equation

L[y] = 0.

Similar to second order equation, we dene the Wronskian as

W(y

1

, y

2

, , y

n

) =

y

1

y

2

y

n

y

1

y

2

y

n

.

.

.

.

.

.

.

.

.

.

.

.

y

(n1)

1

y

(n1)

2

y

(n1)

n

Theorem 3.1.2. Suppose y

1

, y

2

, , y

n

are solutions to the homogeneous equation L[y] = 0

such that W(t

0

) = 0 for some t

0

I. Then every solution to the equation can be written into

the form

y(t) = c

1

y

1

(t) +c

2

y

2

(t) + +c

n

y

n

(t).

Theorem 3.1.3 (Abels Theorem). Suppose y

1

, y

2

, , y

n

are solutions of a linear dierential

equation

L[y] =

d

n

y

dt

n

+p

n1

(t)

d

n1

y

dt

n1

+ +p

1

(t)

dy

dt

+p

0

(t)y = 0, on I.

Then the Wronskian W(y

1

, y

2

, , y

n

) satises

W(y

1

, y

2

, , y

n

)(t) = c exp

_

_

p

n1

(t)dt

_

for some constant c.

Proof. It suces to prove that W(t) satises the rst order dierential equation

W

(t) +p

n1

(t)W(t) = 0.

to this end write

y = (y

1

, y

2

, , y

n

),

Higher order Linear Equations 35

we have

W =

y

y

.

.

.

y

(n1)

.

Then

W

(t) =

d

dt

y

y

.

.

.

y

(n1)

.

.

.

y

(n1)

y

y

.

.

.

y

(n1)

y

y

.

.

.

y

(n1)

+ +

y

y

.

.

.

y

(n)

y

y

.

.

.

y

(n2)

p

n1

y

(n1)

+p

n2

y

(n2)

+ +p

1

y

+p

0

y

= p

n1

y

y

.

.

.

y

(n1)

= p

n1

W

as required.

A set of solutions satisfying the condition in the theorem is called a fundamental set of

solutions.

A set of functions f

1

, f

2

, , f

n

are said to be linearly independent if there does not exists

constants k

1

, k

2

, , k

n

, not all zero, such that

k

1

f

1

(t) +k

2

f

2

(t) + +k

n

f

n

(t) = 0,

for all t I. A set of solutions y

1

, y

2

, , y

n

of the homogeneous equation L[y] = 0 form a

fundamental set if and only if they are linearly independent.

Let Y (t) be a particular solution of the nonhomogeneous equation

L[y] = g.

Then every solution of the equation can be written as the form

y(t) = c

1

y

1

(t) +c

2

y

2

(t) + +c

n

y

n

(t) +Y (t),

where y

1

, y

2

, , y

n

is a fundamental set of solutions of the homogeneous equation L[y] = 0.

Higher order Linear Equations 36

3.2 Homogeneous equations with constant coecients

Consider the nth order linear homogeneous equation

L[y] = a

n

d

n

y

dt

n

+ +a

1

dy

dt

+a

0

y = 0,

where a

0

, a

1

, , a

n

are real constants. The equation

Z(r) = a

n

r

n

+ +a

1

r +a

0

= 0,

is called the characteristic equation of the dierential equation.

If is a real root of the characteristic equation with multiplicity m, then

e

t

, te

t

, , t

m1

e

t

are solutions to the equation.

If i is a purely imaginary root of the characteristic equation with multiplicity m, then

cos t, t cos t, , t

m1

cos t, and sint, t sin t, , t

m1

sin t

are solutions to the equation.

If +i is a complex root of the characteristic equation with multiplicity m, then

e

t

cos t, te

t

cos t, , t

m1

e

t

cos t,

and

e

t

sin t, te

t

sin t, , t

m1

e

t

sin t

are solutions to the equation.

Solutions of L[y] = 0

Root with multiplicity m Solutions

Real number e

t

, te

t

, , t

m1

e

t

Purely imaginary number i

cos t, t cos t, , t

m1

cos t,

sin t, t sint, , t

m1

sin t

Complex number +i

e

t

cos t, te

t

cos t, , t

m1

e

t

cos t,

e

t

sin t, te

t

sin t, , t

m1

e

t

sin t

Note that by fundamental theorem of algebra, there are exactly n functions which are of the

above forms. It can be proved that the Wronskian of these function are not identically zero.

Thus they form a fundamental set of solutions of the homogeneous equation L[y] = 0.

Example 3.2.1. Find the general solution of

y

(4)

+y

7y

+ 6y = 0.

Solution: The roots of the characteristic equation

r

4

+r

3

7r

2

r + 6 = 0

Higher order Linear Equations 37

are

r = 3, 1, 1, 2.

Therefore the general solution is

y(t) = c

1

e

3t

+c

2

e

t

+c

3

e

t

+c

4

e

2t

.

Example 3.2.2. Solve the initial value problem

_

y

(4)

y = 0,

y(0) = 7/2, y

(0) = 4, y

(0) = 5/2, y

(0) = 2.

Solution: The roots of the characteristic equation

r

4

1 = 0

are

r = 1, i.

Therefore the general solution is

y(t) = c

1

e

t

+c

2

e

t

+c

3

cos t +c

4

sin t.

The initial condition gives

_

_

_

_

1 1 1 0

1 1 0 1

1 1 1 0

1 1 0 1

_

_

_

_

_

_

_

_

c

1

c

2

c

3

c

4

_

_

_

_

=

_

_

_

_

7/2

4

5/2

2

_

_

_

_

.

Thus

_

_

_

_

c

1

c

2

c

3

c

4

_

_

_

_

=

_

_

_

_

0

3

1/2

1

_

_

_

_

and the solution is

y(t) = 3e

t

+

1

2

cos t sin t.

Example 3.2.3. Find the general solution of

y

(4)

+ 2y

+y = 0.

Solution: The characteristic equation is

r

4

+ 2r

2

+ 1 = 0

and its roots are

r = i, i, i, i.

Thus the general solution is

y(t) = c

1

cos t +c

2

sin t +c

3

t cos t +c

4

t sin t.

Higher order Linear Equations 38

Example 3.2.4. Find the general solution of

y

(4)

+y = 0.

Solution: The characteristic equation is

r

4

+ 1 = 0

and its roots are

r = e

2k+1

4

i

, k = 0, 1, 2, 3

= cos(

2k + 1

4

) +i sin(

2k + 1

4

), k = 0, 1, 2, 3

=

2

2

2

2

i

Thus the general solution is

y(t) = c

1

e

2

2

t

cos

2

2

t +c

2

e

2

2

t

sin

2

2

t +c

3

e

2

2

t

cos

2

2

t +c

4

e

2

2

t

sin

2

2

t.

3.3 Method of undetermined coecients

The main dierence in using the method of undetermined coecients for higher order equations

stems from the fact that roots of the characteristic equation may have multiplicity greater than

2. Consequently, terms proposed for the nonhomogeneous part of the solution may need to

be multiplied by higher powers of t to make them dierent from terms in the solution of the

corresponding homogeneous equation.

Example 3.3.1. Find the general solution of

y

3y

+ 3y

y = 2te

t

e

t

.

Solution: The characteristic equation

r

3

3r

2

+ 3r 1 = 0

has a triple root r = 1. So the general solution of the corresponding homogeneous equation is

y

c

(t) = c

1

e

t

+c

2

te

t

+c

3

t

2

e

t

.

Since r = 1 is a root of multiplicity 3, a particular solution of the dierential equation is of the

form

Y (t) = At

4

e

t

+Bt

3

e

t

.

Substituting Y (t) into the equation, we have

24Ate

t

+ 6Be

t

= 2te

t

e

t

.

Thus

A =

1

12

, B =

1

6

.

Higher order Linear Equations 39

Therefore the general solution is

y(t) = c

1

e

t

+c

2

te

t

+c

3

t