Documente Academic

Documente Profesional

Documente Cultură

Scale-Invariant Region-Based Hierarchical Image Matching

Încărcat de

satbir2008Descriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Scale-Invariant Region-Based Hierarchical Image Matching

Încărcat de

satbir2008Drepturi de autor:

Formate disponibile

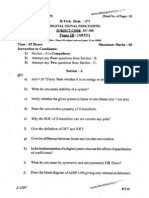

in Proc.

19th International Conference on Pattern Recognition (ICPR), Tampa, FL, December 2008

Scale-invariant Region-based Hierarchical Image Matching

Sinisa Todorovic and Narendra Ahuja Beckman Institute, University of Illinois at Urbana-Champaign {n-ahuja, sintod}@uiuc.edu Abstract

This paper presents an approach to scale-invariant image matching. Given two images, the goal is to nd correspondences between similar subimages, e.g., representing similar objects, even when the objects are captured under large variations in scale. As in previous work: similarity is dened in terms of geometric, photometric and structural properties of regions, and images are represented by segmentation trees that capture region properties and their recursive embedding. Matching two regions thus amounts to matching their corresponding subtrees. Scale invariance is aimed at overcoming two challenges in matching two images of similar objects. First, the absolute values of many object image properties may change with scale. Second, some of the nest details visible in the high-zoom image may not be visible in the coarser scale image. We normalize the region properties associated with one of the subtrees to the corresponding properties of the root of the other subtree. This makes the scales of objects represented by the two subtrees equal, and also decouples this scale from that of the entire scene. We also weight contributions of subregions to the total similarity of their parent regions by the relative area the subregions occupy within the parents. This reduces the penalty for not being able to match ne-resolution details present within only one of the two regions, since the penalty will be down-weighted by the relatively small area of these details. Our experiments demonstrate invariance of the proposed algorithm to large changes in scale. scale of capturing an object result in two main differences in the objects images to which matching must be made invariant. First, the absolute values of many object image properties may change with scale. Second, some of the nest details visible in the high-zoom image may not be visible in the coarser scale image. This paper presents an approach in which we achieve both of the above invariances. We extend our earlier work in which we perform image matching invariant to object orientation, illumination, and to a limited extent, to object size. Before we outline our approach, we rst very briey review the large amount of related work in image matching based on region properties. Finding region correspondences across images is one of the fundamental problems in computer vision. It is frequently encountered in many vision tasks, such as unsupervised learning of object models [7], and extraction of texture elements (or texels) from an image texture [2]. Most approaches use only geometric and photometric properties of regions. Others improve robustness by additionally accounting for structural properties of regions. They represent images as graphs which capture region structure, and formulate image matching as graph matching problem.[4, 9, 5, 3, 6, 8, 7]. Some graph-based methods allow many-to-many region correspondences to handle possible splits or merging of regions, caused, e.g., by differences in illumination across the images[6, 8]. We dene the goal of matching as nding for all regions in one image all similar regions in the other image, so the total number of matched regions and their associated similarities are maximized. The similarity measure is dened in terms of the geometric (e.g., shape, area), photometric (e.g., brightness), and structural properties (e.g., embedding of regions within larger ones). The image and object representations that we use here and in [7, 2, 8] have the following major features relevant to achieving the proposed scale invariance. Our image representation captures multiscale image segmentation via a segmentation tree. Segmentation tree captures the recursive embedding of smaller/ner regions inside bigger/more salient re1

1. Introduction

This paper is about matching real-world images to identify all pairs of similar subimages, e.g., for discovering and segmenting out any frequently occurring objects belonging to a visual category in a given set of arbitrary images [7]. The main contribution of this paper over previous work is the ability to perform the matching in a scale invariant manner. Thus, we would like an object to be matched across images even if its size varies e.g., when images have been acquired at varying distances from the object. Such variations in the

in Proc. 19th International Conference on Pattern Recognition (ICPR), Tampa, FL, December 2008

gions. This naturally facilitates scale based analysis. To achieve the invariance to object size, in the past we have expressed certain properties of a region, corresponding to a segmentation tree node, relative to the corresponding properties of the parent region in the segmentation tree. However, such denition of the relative properties of a region retains the dependence on the properties of the root node, i.e., on the image size. In this paper, we make two main contributions. First we eliminate the mentioned dependence by normalizing the region properties with respect to the candidate object pair instead of the images. Second, we build into the matching process insensitivity to those ne level regions, which are present in the high-zoom image, but have been lost in the coarser scale image. Overview of Proposed Approach: We extend in this paper the basic image representation, and many-tomany matching strategy presented in [8]. Our approach consists of four steps. (1) As in [8], images are represented by segmentation trees that capture the recursive embedding of regions obtained from a multiscale image segmentation. We associate a vector of absolute geometric and photometric properties of a corresponding region to each node in the tree, and thus depart from the specication presented in [8], where only relative region properties were used. In the sequel, we will refer to node and region, and descendant node and subregion, interchangeably. (2) Similar to the steps in [8] that allow many-to-many matching, the segmentation tree is modied by inserting new nodes, referred to as mergers. A merger represents the union of two sibling regions, whose boundaries share a part. A merger instantiates the hypothesis that a border between two regions is incorrectly detected (due to, e.g., lighting changes etc.), and therefore their union should be restored as a separate node. Each merger is inserted between its source nodes and their parent, as a new parent of the sources, and as a new child of the sources original parent. Then, to provide access to all descendants under a node during matching, and thus improve robustness, new edges are established between each node and all its descendants, transforming the tree into a DAG. (3) Note that every node in the DAG denes a subgraph rooted at that node. Thus, matching any two nodes can be formulated as nding a bijection between their descendants in the corresponding subgraphs. This bijection can be characterized by a similarity measure, dened in terms of region properties. Since our goal is to identify pairs of regions with similar intrinsic and structural properties, the bijection of their subregions needs to preserve the original node connectivity and maximize the associated similarity. Formally, two nodes are matched by nding the maximum-similarity subgraph isomorphism 2

between their respective subgraphs. In this step of our approach, we modify the algorithm of [8] in two critical ways mentioned at the beginning of this section and aimed at achieving scale invariance. First, when matching two regions, i.e., two subgraphs, we make the assumption that they represent similar object occurrences which should be matched, and normalize the absolute region properties of one of the subgraphs to the corresponding properties of the other. This modication addresses the problem of object properties being measured relative to the entire image as is the case in [8]. Second, when nding similarity of two regions, we weight the contributions of their subregions to the similarity, by the area the subregions occupy within the regions. This modication helps eliminate the direct dependency of similarity of two regions on the total number of their subregions present in the image. In turn, this helps achieve the second desirable scale property mentioned earlier in this section, since the penalty for not being able to match ne-resolution details present within only one of the two regions will be down-weighted by the rather small (relative) area of these details. (4) The subgraph isomorphism of a visited node pair is computed as a maximum weighted clique of the association graph constructed from the descendants. The proposed approach is validated on real images captured under large scale variations. The following sections describe the details of our algorithms.

2. Image Representation

This section presents steps (1)(2) of our approach. An image is represented by the segmentation tree, T =(V, E, ). Nodes vV represent regions obtained from the multiscale segmentation algorithm presented in [1]. This algorithm partitions an image into homogeneous regions, so changes in pixel intensity within the region are smaller than those across its boundary, regardless of the absolute degree of variation. Segmentation is performed regardless of the size, shape and location of regions, and their contrasts with the surround. The multiscale regions are then organized in tree T , where the root corresponds to the entire image, and each edge e=(v, u)E represents the embedding of region u within v, i.e., their parent-child relationship. Function : V [0, 1]d associates a d-dimensional vector, v , with every vV , where v consists of the following absolute region properties: 1) area, 2) outer-ring area not occupied by subregions, 3) mean brightness of the outer-ring area, 4) orientation of the principal axis with respect to the images x-axis, 5) four standard afneinvariant shape moments, and 6) centroid location. The elements of v are in [0, 1]. As mentioned in Sec. 1, T is modied by insert-

in Proc. 19th International Conference on Pattern Recognition (ICPR), Tampa, FL, December 2008

ing and appropriately connecting mergers of contiguous, sibling regions, i.e., regions that share boundaries and are embedded within the same parent. The presence of mergers allows addressing the instability of low-level image segmentation under varying imaging parameters. Next, the augmented T is transformed into a DAG by adding new edges between each node and all its descendants. This allows considering matches of all descendants under a node, even when its direct children cannot nd a good match. We keep the same notation T for the segmentation tree and its corresponding DAG.

3. Scale-invariant Graph Matching

Given two DAGs, T and T , we match all possible pairs of nodes (v, v )V V , and estimate their similarity, Svv . Let fvv ={(u, u )} denote a bijection between descendants u of v and u of v . The goal of our matching algorithm is to nd subgraph isomorphism fvv that preserves the original connectivity of subgraphs rooted at v and v , and maximizes Svv . We dene Svv in terms of region properties of all descendants u and u included in the subgraph isomorphism fvv . Invariance is achieved by normalizing properties of the subgraph rooted at v to those of v, so as to decouple the (relative) properties of their descendents from those of the entire images, and make them compatible by expressing them relative to the normalized common reference regions. To render Svv invariant to scale changes, we re-scale the area of v to be equal to the area of v (area)= v (area)=v (area), and use the same scaling factor to re-size all descendants u of v , u (area)= u (area). This decouples the scales of objects represented by v and v from the scales of the scenes represented by entire T and T . To also achieve rotation-in-plane and translation invariance, we rotate and translate all descendants u under v by the unique delta angle and displacement which make the orientations and centroids of v and v equal. Additionally, we make the mean brightness of v and v equal, and then use the same delta-brightness factor to increase (or decrease) the brightness of descendants u . This addresses local illumination changes, and decouples the brightness of objects represented by v and v from the global brightness of the entire images represented by T and T . There is no need to compute new shape moments of the resized regions, since they are afne invariant. This normalization yields new properties v and u of region v and its descendants u . While nding the subgraph isomorphism that maximizes Svv , the algorithm should match subregions of v and v whose differences in region properties are small. At the same time, it is desirable to nd good matches between subregions that are perceptu3

ally salient. In general, regions that have large intensity contrasts with the surround and occupy large areas within their parent regions are perceptually salient. Thus, we dene the saliency of region u, u , as a relative degree of difference between the brightness and area of u from the corresponding properties of parent v, |u (brightness)v (brightness)| (area) u + u(area) . Note that 255 v u is invariant to changes in scale and illumination. Using the above denitions of subgraph isomorphism f , absolute region properties , normalized properties , and region saliency , we dene the similarity of two regions v and v as Svv max

fvv (u,u )fvv

vv

uu

(u + u |u u |),

(1) where the weights vv uu make contributions of matches (u, u ) in (1) proportional to the relative areas they occupy within v and v . We dene vv uu as the total outer-ring area of u and u that is not occupied by the other descendants of v and v included in fvv , expressed as a percentage of the total area of v and v ,

u (outer-ring area)+u (outer-ring area) vv uu . As mene v (area)+v (area) tioned in Sec. 1, these weights help diminish the scale effects, since the weights are small for tiny subregions most affected by scale changes. From (1), the algorithm seeks matches among the descendants of v and v whose saliencies are high, and differences in normalized geometric and photometric properties are small. As shown in [8], the maximum similarity subgraph isomorphism f is equal to the maximum weighted clique of the association graph constructed from all descendant pairs (u, u ) of v and v , which can be found by using the replicator dynamics algorithm presented in [4]. Complexity of matching two regions, i.e., subgraphs each containing no more than |V | descendants, is O(|V |2 ). Note that the branching factor of our image DAGs varies from node to node, in proportion with the spatial variation of image structure. The DAG is not complete and the total number of subgraphs is image dependent. In experiments presented in the following section, we have observed that the number of subgraphs within an image DAG is typically |V |/2 (almost the same as would be in a complete binary tree containing |V | nodes). Therefore, complexity of matching all pairs of regions from two DAGs is O(|V |4 ). It takes about 1min in MATLAB on a 2.8GHz, 2GB RAM PC. e

4. Results

The proposed scale-invariant matching (SIM) is evaluated on 435 faces from Caltech-101, and 80 images of UIUC 2.1D natural textures [2]. Caltech-101

in Proc. 19th International Conference on Pattern Recognition (ICPR), Tampa, FL, December 2008

(a) Input images whose tree representations are matched

(a) Input images

(b) Matching results in the subsampled images using [8] (b) Matching results in the subsampled image using our algorithm

Figure 2. Model-to-image matching: (a) The texel model, learned using the image on the left, is matched with the DAG of the subsampled image on the right. (b) Darker shades of gray indicate higher similarity between model and image matches, i.e., higher condence in texel extraction.

(c) Matching results in the subsampled images using SIM

Figure 1. Unsupervised image-to-image matching: (b)-(c) Sample segmentation of the image on the right in (a). Darker shades of gray indicate higher similarity of matched regions. SIM yields darker shades of faces in (c) than those in (b).

images contain only a single, frontally viewed face, against cluttered background, under varying illumination. The texture images present additional challenges: texels are only statistically similar to each other, they may be partially occluded, and their placement is random. When matching Caltech-101 images, our algorithm is unaware that the images contain any common objects (i.e., faces). Thus, Caltech-101 faces are used for unsupervised image-to-image matching. The texture images are used to evaluate our algorithm in a different setting, that of model-to-image matching. Since the 4

recurrence of texels in the image is guaranteed by the assumption that the image shows texture, we rst learn the hierarchical texel model, as in [2], and then match the texel model with the segmentation tree of another image showing the same texture. We report comparison only with the approach of [8], for brevity, because the algorithm of [8] has already been demonstrated to outperform the state-of-the-art matching methods on challenging benchmark datasets, like Caltech-101. To evaluate the two proposed modications with respect to the approach of [8] namely, normalization of absolute region properties, and similarity weighting we additionally present results of SIM without one of these modications, referred to as SIMN and SIMW . In experiments with Caltech-101 images, we randomly select a total of 10 images, where the size of one image is kept intact, while the remaining nine images are all equally subsampled. In each experiment, the amount of image-size reduction is increased in increments of 5%, until 80% of the original size. Fig. 1

in Proc. 19th International Conference on Pattern Recognition (ICPR), Tampa, FL, December 2008

demonstrates a set of these experiments, where the unaltered image (top) is matched to rotated and subsampled images, which are all placed on a random background (bottom), for brevity. As can be seen, SIM is invariant to changes in scale and illumination, in-plane rotation and translation. It improves the matching results of [8], since similarity values Svv produced by SIM over regions representing common objects (i.e., faces) are larger than the similarities obtained using the approach of [8], while remaining low over background clutter. This, in turn, allows us to use SIM in solving higher-level vision problems, such as, for example, object detection. Specically, Fig. 1 suggests that it is possible to select a suitable threshold of Svv values to detect occurrences of common objects (i.e., faces) present at a wide-range of scales. For quantitative evaluation, we use the threshold that yields equal recall-precision rate, where matches are declared as true positives if the ratio of intersection and union of the matched area and the ground-truth area are greater than 0.5. Detection error includes false positives and negatives. Fig. 3 compares face detection results, averaged over 10 experiments, obtained using SIM, SIMN , SIMW and the approach of [8]. The plots show that SIM is relatively scale invariant up until the faces become one half of the original size, and that the slope of increase in error of SIM is less than that of [8]. Also, SIMN yields the worst detection results, since matching uses directly the absolute properties of regions without normalization.

0.8 0.7 0.6 Detection Error 0.5 0.4 0.3 0.2 0.1 0

Caltech101: Faces SIM [8] SIMN

0.8 0.7 Texel Detection Error 0.6 0.5 0.4 0.3 0.2 0.1

Texture SIM [8] SIMN

SIMW

SIMW

0.1

0.2 0.3 0.4 0.5 0.6 Reduction of image size

0.7

0.8

0.1

0.2 0.3 0.4 0.5 0.6 Reduction of image size

0.7

0.8

Figure 3. Face and texel detection using SIM, SIMN , SIMW and approach [8].

5. Conclusion

We have presented an approach to region-based, hierarchical image matching that explicitly accounts for changes in region structure, including their disappearances, due to scale variations. Our experiments demonstrate that the proposed matching is indeed invariant to a wide range of scales, changes in illumination, in-plane rotation, and translation of similar objects. Experiments also suggest that our algorithm facilitates unsupervised detection of texels in a given image texture, or objectcategory occurrences in an arbitrary set of images.1

References

[1] N. Ahuja. A transform for multiscale image segmentation by integrated edge and region detection. IEEE TPAMI, 18(12):12111235, 1996. [2] N. Ahuja and S. Todorovic. Extracting texels in 2.1D natural textures. In ICCV, 2007. [3] R. Glantz, M. Pelillo, and W. G. Kropatsch. Matching segmentation hierarchies. Int. J. Pattern Rec. Articial Intelligence, 18(3):397424, 2004. [4] M. Pelillo, K. Siddiqi, and S. W. Zucker. Matching hierarchical structures using association graphs. IEEE TPAMI, 21(11):11051120, 1999. [5] T. B. Sebastian, P. N. Klein, and B. B. Kimia. Recognition of shapes by editing their shock graphs. IEEE TPAMI, 26(5):550571, 2004. [6] A. Shokoufandeh, L. Bretzner, D. Macrini, M. Demirci, C. Jonsson, and S. Dickinson. The representation and matching of categorical shape. Computer Vision Image Understanding, 103(2):139154, 2006. [7] S. Todorovic and N. Ahuja. Extracting subimages of an unknown category from a set of images. In CVPR, volume 1, pages 927934, 2006. [8] S. Todorovic and N. Ahuja. Region-based hierarchical image matching. IJCV, 78(1):4766, 2008. [9] A. Torsello and E. R. Hancock. Computing approximate tree edit distance using relaxation labeling. Pattern Recogn. Lett., 24(8):10891097, 2003.

1 Acknowledgement: The support of the National Science Foundation under grant NSF IIS 07-43014 is gratefully acknowledged.

In experiments with UIUC image textures, the texel model is rst learned from the unaltered image texture, as in [2]. The texel model is a hierarchical graph whose nodes encode the statistical properties of corresponding texel parts, and edges capture their spatial relations. The texel model is matched to the segmentation tree of another rotated and subsampled image showing the same texture. Fig. 2 shows improvements of our approach over that of [8] in that Svv values produced by SIM are larger over regions occupied by the texels than Svv values obtained using the approach of [8]. By using the same detection strategy as before, we threshold matches of the texel model with subsampled images, and thus achieve texel extraction over various scales. Fig. 3 presents the detection results averaged over the 80 UIUC image textures. As the degree of subsampling increases, SIM is nearly scale invariant until the size of image texture is reduced to one half of the original, after which the slope of increase in texel-extraction error is less than that of [8]. Again, SIMN yields the worst texel detection. 5

S-ar putea să vă placă și

- Smaple Tour Proposal PDFDocument1 paginăSmaple Tour Proposal PDFsatbir2008Încă nu există evaluări

- BTECH-916 Neural Networks and Fuzzy LogicDocument1 paginăBTECH-916 Neural Networks and Fuzzy Logicsatbir2008Încă nu există evaluări

- BTEC 910 Satellite CommunicationDocument1 paginăBTEC 910 Satellite Communicationsatbir2008Încă nu există evaluări

- Datasheet 741Document5 paginiDatasheet 741Lucas Ernesto Caetano ErnestoÎncă nu există evaluări

- Ec 308Document2 paginiEc 308satbir2008Încă nu există evaluări

- Fourier Series 2Document5 paginiFourier Series 2satbir2008Încă nu există evaluări

- How Productive Are You?: Editors' Choice ArticleDocument16 paginiHow Productive Are You?: Editors' Choice Articlesatbir2008Încă nu există evaluări

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Radc TR 90 72Document584 paginiRadc TR 90 72Shandy Canester100% (1)

- Ohio University Critique PaperDocument6 paginiOhio University Critique PaperNelson Geverola Balneg100% (1)

- What Are The Physical Addresses For The Following Logical AddressesDocument5 paginiWhat Are The Physical Addresses For The Following Logical AddressesObelisk4100% (2)

- Project Progress ReportDocument3 paginiProject Progress ReportCharan yÎncă nu există evaluări

- Map 3 D 2015 InstallDocument15 paginiMap 3 D 2015 InstallHarrison HayesÎncă nu există evaluări

- S Qe Master Test Plan TemplateDocument7 paginiS Qe Master Test Plan TemplateRomiSayagoÎncă nu există evaluări

- Broadwell Janus HSW 40 50 70 13302-1 - A00 - 20140210Document104 paginiBroadwell Janus HSW 40 50 70 13302-1 - A00 - 20140210mbah bejoÎncă nu există evaluări

- 3D Module Quick GuideDocument13 pagini3D Module Quick GuideRoberson Sanchez RodriguezÎncă nu există evaluări

- Scada (Supervisory Control and Data Acquisition)Document48 paginiScada (Supervisory Control and Data Acquisition)Bagusaryowibowo WibowoÎncă nu există evaluări

- PDF - Eeglab Wiki TutorialDocument253 paginiPDF - Eeglab Wiki TutorialAndrés Canales JohnsonÎncă nu există evaluări

- Lovely Professional University Form/Lpuo/Ap-3Document3 paginiLovely Professional University Form/Lpuo/Ap-3Sam JindalÎncă nu există evaluări

- Chapter 2Document2 paginiChapter 2api-226205709Încă nu există evaluări

- MCP 4922Document48 paginiMCP 4922Maximiliano2BaracÎncă nu există evaluări

- Computer Architecture and PerformanceDocument33 paginiComputer Architecture and Performanceponygal13Încă nu există evaluări

- Analisis Proses Perencanaan Dan Evaluasi Pelaksanaan Standar Pelayanan Minimal Instalasi Gawat Darurat Di Rsud Dr. R. Soetijono BloraDocument8 paginiAnalisis Proses Perencanaan Dan Evaluasi Pelaksanaan Standar Pelayanan Minimal Instalasi Gawat Darurat Di Rsud Dr. R. Soetijono BloraAsti ArumsariÎncă nu există evaluări

- Datasheet PW MS SeriesDocument4 paginiDatasheet PW MS SeriesLương Thanh TùngÎncă nu există evaluări

- Hardware Maintenance Manual: Thinkpad T450Document120 paginiHardware Maintenance Manual: Thinkpad T450David ValenciaÎncă nu există evaluări

- B 10115Document90 paginiB 10115Anh HiểuÎncă nu există evaluări

- QR Codes..SeminarDocument25 paginiQR Codes..SeminarSumit GargÎncă nu există evaluări

- MEDPAQDocument41 paginiMEDPAQErick AlexanderÎncă nu există evaluări

- Electronic Signatures and InfrastructuresDocument30 paginiElectronic Signatures and InfrastructuresRaja Churchill DassÎncă nu există evaluări

- Manual Testing Interview Questions AnswersDocument17 paginiManual Testing Interview Questions AnswerssakibssÎncă nu există evaluări

- For More Free KCSE Revision Past Papers and AnswersDocument4 paginiFor More Free KCSE Revision Past Papers and Answerslixus mwangi100% (1)

- Theory Questions JavaDocument3 paginiTheory Questions Javaveda100% (2)

- Mubeen's CVDocument2 paginiMubeen's CVMubeen NasirÎncă nu există evaluări

- Kanban Vs Scrum DevelopmentDocument12 paginiKanban Vs Scrum DevelopmentBrunoZottiÎncă nu există evaluări

- Design Modeler Evaluation Guide A Quick Tutorial: Ansys, Inc. March, 2005Document91 paginiDesign Modeler Evaluation Guide A Quick Tutorial: Ansys, Inc. March, 2005hosseinidokht86Încă nu există evaluări

- Adobe Flash Media Server 4Document6 paginiAdobe Flash Media Server 4sourav3214Încă nu există evaluări

- UNIT-2 Two Dimensional Problem - Vector Variable Problem: Two Mark Questions and AnswerDocument6 paginiUNIT-2 Two Dimensional Problem - Vector Variable Problem: Two Mark Questions and AnswerKarthick RamÎncă nu există evaluări

- AMOS 12.00 Administration GuideDocument52 paginiAMOS 12.00 Administration Guidealexander.titaevÎncă nu există evaluări