Documente Academic

Documente Profesional

Documente Cultură

Bacterial Foraging Optimization Algorithm Produced by K. M. Passino in 2002

Încărcat de

Mohamed Zakaria EbrahimDescriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Bacterial Foraging Optimization Algorithm Produced by K. M. Passino in 2002

Încărcat de

Mohamed Zakaria EbrahimDrepturi de autor:

Formate disponibile

Bacterial Foraging Optimization Algorithm with Particle

Swarm Optimization Strategy for Global Numerical

Optimization

Hai Shen

Key Laboratory of Industrial

Informatics, Shenyang

Institute of Automation,

Chinese Academy of

Sciences, China

Graduate School of the

Chinese Academy of

Sciences, China

College of Physics Science

and Technology, Shenyang

Normal University, China

shenhai@sia.cn

Yunlong Zhu

Key Laboratory of Industrial

Informatics, Shenyang

Institute of Automation,

Chinese Academy of

Sciences, China

ylzhu@sia.cn

Xiaoming Zhou

Key Laboratory of Industrial

Informatics, Shenyang

Institute of Automation,

Chinese Academy of

Sciences, China

Graduate School of the

Chinese Academy of

Sciences, China

zhouxiaoming@sia.cn

Haifeng Guo

Key Laboratory of Industrial

Informatics, Shenyang

Institute of Automation,

Chinese Academy of

Sciences, China

guohf@sia.cn

Chunguang Chang

Key Laboratory of Industrial

Informatics, Shenyang

Institute of Automation,

Chinese Academy of

Sciences, China

changchunguang@sia.cn

ABSTRACT

In 2002, K. M. Passino proposed Bacterial Foraging Optimization

Algorithm (BFOA) for distributed optimization and control. One of

the major driving forces of BFOA is the chemotactic movement of

a virtual bacterium that models a trial solution of the optimization

problem. However, during the process of chemotaxis, the BFOA

depends on random search directions which may lead to delay in

reaching the global solution. Recently, a new algorithm BFOA

oriented by PSO termed BF-PSO has shown superior in propor-

tional integral derivative controller tuning application. In order to

examine the global search capability of BF-PSO, we evaluate the

performance of BFOA and BF-PSO on 23 numerical benchmark

functions. In BF-PSO, the search directions of tumble behavior

for each bacterium oriented by the individuals best location and

the global best location. The experimental results show that BF-

PSO performs much better than BFOA for almost all test functions.

Thats approved that the BFOA oriented by PSO strategy improve

its global optimization capability.

Corresponding author.

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for prot or commercial advantage and that copies

bear this notice and the full citation on the rst page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specic

permission and/or a fee.

GEC09, June 1214, 2009, Shanghai, China.

Copyright 2009 ACM 978-1-60558-326-6/09/06 ...$5.00.

Categories and Subject Descriptors

I.2 [Computing Methodologies]: Articial Intelligence; I.2.8 [

Problem Solving, Control Methods, and Search]: [control

theory]

General Terms

Algorithms, Performance.

Keywords

Bacterial Foraging, Particle Swarm Optimization, Numerical Opti-

mization

1. INTRODUCTION

Optimization originated in the applied mathematics arena is a

process of seeking the best possible solutions for given problems.

As a powerful solution approach, it has been used in almost all

elds of engineering, nance, management as well as social sci-

ence.The general unconstrained numerical optimization problem

which was relied on by those applied elds can be dened as:

min f (x) : x R

n

,

where f : R

n

R. At present, a study of global optimization

problems has become a highly concerned topic.

Optimization techniques may follow different approaches. Re-

cently, optimization techniques inspired by biology behaviors, known

as bio-mimetic optimization algorithms, have obtained more and

more attention[1]. Some algorithms have been proposed, such as

Genetic Algorithms (GAs), Particle Swarm Optimization (PSO),

497

Ant Colony Optimization (ACO) and glowworm swarm optimiza-

tion (GSO). Bio-mimetic optimization algorithms are developed

from simulation the evolutionary process and the behaviors of biol-

ogy. They are population-based(each member stands for an biology

individual), and initialized with a population of individuals. They

utilizes the direct information "tness" instead of individuals abil-

ity to adapt to the environment. These individuals are manipulated

over many generations by ways of mimicking social behavior of

biology, in an effort to nd the optima. In comparison with other

optimization algorithms, bio-mimetic optimization algorithms have

the following characteristics:

1) The individual components are distributed and autonomous,

there is no central control, and the fault of an individual cannot

inuence solving the whole problem, these characteristics ensure

this kind of algorithms has better robustness.

2) The manner of achieves individual collaboration though non-

directly information communication make sure of the expansibility

of the algorithm.

3) They dont demand to meet the requirement of differentiabil-

ity, convexity and other conditions for mathematical description of

the problem.

4) Because of concerns merely with basic mathematical opera-

tions, therefore, they are simple and easy to be implemented on

computer.

These advantages enabled bio-mimetic optimization algorithms

to widely use in a very short period, such as power system[2][3],

vehicle routing[4][5], mechanical design[6][7] and robotics[8].

Natural selection tends to eliminate animals with poor foraging

strategies and favor the propagation of genes of those animals that

have successful foraging strategies, since they are more likely to

enjoy reproductive success. After many generations, poor forag-

ing strategies are either eliminated or shaped into good ones. This

activity of foraging led the researchers to use it as optimization pro-

cess. Based on the researches on the foraging behavior of E. coli

bacteria, Prof. K.M.Passino proposed a new evolutionary compu-

tation technique known as Bacterial Foraging Optimization Algo-

rithm (BFOA)[9]. In BFOA, the foraging (methods for locating,

handling, and ingesting food) behavior of E. coli bacteria is mim-

icked.

As an evolutionary computation technique, BFOA is also an it-

eration based optimization tool. The rst is to generate a set of ran-

dom solutions in which each solution represents bacteria, the fol-

lowing is to measure the tness of these solutions, then retain some

excellent individuals and give up other individuals according to the

tness, nally, implement certain operations on those individuals

retained. Thus, the new solutions of the next iteration were yielded

and the next iteration work begins. Until date, BFOA has success-

fully been applied to real world problems such as PID controller

design[10][11], learning of articial neural networks[12], power

system[13][14][15],numerals recognition[16], and channel equal-

izer[17]. However, during the process of chemotaxis, the BFOA

depends on random search directions which may lead to delay in

reaching the global solution.

Particle Swarm Optimization (PSO) is also a bio-mimetic opti-

mization technique developed by Eberhart and Kennedy in 1995,

which was inspired by the social behavior of bird ocking and sh

schooling. The critical concept of PSO consists of, at each time

step, changing the velocity each particle toward its best location

of individual and global best location among the individual. That

the balance between the global and local search throughout the run

make PSO become a success optimization algorithm. In past sev-

eral years, PSO has been successfully applied in many research and

application areas[18]. It is demonstrated that PSO gets better re-

sults in a faster compared with other methods. Therefore, in 2008,

W. Korani proposed an improved BFOA, namely BF-PSO[19]. The

BF-PSO algorithm borrowed the ideas of velocity updating from

PSO, the search directions specied by the tumble of bacteria were

oriented by the individuals best location and the global best loca-

tion concurrently. Then the author applied BF-PSO algorithm to

the PID parameter tuning for a set of test plants. Simulation results

demonstrate that the proposed algorithm out performance both con-

ventional PSO and BFOA.

With the purpose of further investigation the performance of the

BF-PSO, we implemented BFOA and BF-PSO on a suite of 23

functions at the same time[20]. The 23 benchmark functions used

in our experiments have been widely employed by other researchers

to bio-mimetic optimization algorithms. However they were lit-

tle tested on BFOA. When compared with the BFOA used to nd

global optimization, results show that the BF-PSO algorithm can

nd more accurate results of almost all tested problems and has a

noticeable performance.

The rest of this paper is organized as follows. Section II de-

scribes the BFOA and its implementation in detailed. Section III

provides an extensive literature survey on BFOA. In section IV, the

BF-PSO algorithm that is used to nd global optimization will be

detailed. Section V gives the 23 benchmark test functions used in

our studies. Section VI presents the experimental results and dis-

cussions on BFOA and BF-PSO. Finally, the concluding remarks

and future research directions are given in section VII.

2. THE CLASSICAL BACTERIAL FORAG-

ING OPTIMIZATION ALGORITHM

In the process of foraging, E. coli bacteria undergo four stages,

namely, chemotaxis, swarming, reproduction, and elimination and

dispersal. In search space, BFOA seek optimum value through the

chemotaxis of bacteria, and realize quorum sensing via assemble

function between bacterial, and satisfy the evolution rule of the

survival of the ttest make use of reproduction operation, and use

elimination-dispersal mechanism to avoiding falling into premature

convergence.

2.1 Chemotaxis

The motion patterns that the bacteria will generate in the pres-

ence of chemical attractants and repellents are called chemotaxes.

For E. coli, this process was simulated by two different moving

ways: run or tumble. A Bacterium alternates between these two

modes of operation its entire lifetime. The bacterium sometimes

tumbles after a tumble or tumbles after a run. This alternation be-

tween the two modes will move the bacterium, and this enables

it to "search" for nutrients. Suppose

i

( j, k, l) represent the posi-

tion of the each member in the population of S bacterial at the jth

chemotactic step, and kth reproduction step, and lth elimination

The movement of bacterium may be presented by:

i

( j + 1, k, l) =

i

( j, k, l) + C(i)( j)

Where C(i)(i = 1, 2, . . . , S ) is the size of the step taken in the ran-

dom direction specied by the tumble. ( j) was used to dene the

random direction of movement after a tumble. J(i, j, k, l) is the t-

ness, which also denote the cost at the location of the ith bacterium

i

( j, k, l) R

n

. If at

i

( j + 1, k, l) the cost J(i, j + 1, k, l) is better

(lower) than at

i

( j, k, l) , then another step of size C(i) in this same

direction will be taken. Otherwise, bacteria will tumble via taking

another step of size C(i) in random direction ( j) in order to seek

better nutrient environment.

498

2.2 Swarming

An interesting group behavior has been observed for several motile

species of bacteria including E.coli and S. typhimurium. When a

group of E. coli cells is placed in the center of a semisolid agar with

a single nutrient chemo-effector, they move out from the center in

a traveling ring of cells by moving up the nutrient gradient cre-

ated by consumption of the nutrient by the group. To achieve this,

function to model the cell-to-cell signaling via an attractant and a

repellan. The mathematical representation for E.coli swarming can

be represented by:

J

cc

(, P( j, k, l)) =

S

i=1

J

i

cc

(,

i

( j, k, l))

=

S

i=1

_

_

d

attract

exp

_

_

w

attract

p

m=1

(

m

i

m

)

2

_

_

_

_

+

S

i=1

_

_

h

repellant

exp

_

_

w

repellant

p

m=1

(

m

i

m

)

2

_

_

_

_

where is the cost function value to be added to the actual cost func-

tion. S is the total number of bacteria and p is the number of param-

eters to be optimized which are present in each bacterium. d

attract

is the depth of the attractant released by the cell and w

attract

is a

measure of the width of the attractant signal. h

repellant

=d

attract

is the

height of the repellant effect and w

repellant

is a measure of the width

of the repellant.

2.3 Reproduction

According to the rules of evolution, individual will reproduce

themselves in appropriate conditions in a certain way. For bacterial,

a reproduction step takes place after all chemotactic steps.

J

i

health

=

Nc+1

j=1

J(i, j, k, l)

Where J

i

health

is the health of bacterium i. Sort bacteria and chemo-

tactic parameters C(i) in order of ascending cost (higher cost means

lower health). For keep a constant population size, bacteria with

the highest J

health

values die. The remaining bacteria are allowed to

split into two bacteria in the same place.

2.4 Elimination-Dispersal

In the evolutionary process, elimination and dispersal events can

occur such that bacteria in a region are killed or a group is dis-

persed into a new part of the environment due to some inuence.

They have the effect of possibly destroying chemotactic progress,

but they also have the effect of assisting in chemotaxis, since dis-

persal may place bacteria near good food sources. From the evolu-

tionary point of view, elimination and dispersal was used to guaran-

tees diversity of individuals and to strengthen the ability of global

optimization. In BFOA, bacteria are eliminated with a probability

of ped.In order to keeping the number of bacteria in the popula-

tion constant, if a bacterium is eliminated, simply disperse one to a

random location on the optimization domain.

3. RELATED WORKS ON BFOA

Currently, the related works on BFOA can be divided into anal-

ysis of BFOA, application and improvement.

A. The analysis of BFOA

One major step in BFOA is chemotactic behavior. In 2008,

S.Dasgupta and S.Das et al. made an analysis of the chemotaxis

operation in BFOA [21][22]. The analysis undertaken provides im-

portant insights into the search mechanism of BFOA. The analy-

sis points out that the chemotaxis usually results in sustained os-

cillation , especially on at tness landscapes, when a bacterium

cell is close to the optima. Therefore, it is necessary to bound on

the chemotactic step-height parameter that avoids limit-cycles and

guarantees convergence of the bacterial dynamics into an optimum.

Two simple schemes for adapting the chemotactic step-height have

been subsequently proposed. In the same year, A.Abraham and

A.Biswas provided a simple mathematical analysis of the repro-

duction step used in BFOA[23]. The analysis is focus on the re-

production in a simple two-bacterial system working on a one di-

mensional tness landscape. Their analysis reveals that the repro-

duction event contributes to the quick convergence of the bacterial

population near optima.

B. The application of BFOA

R.Majhi and G.Panda et al. developed a BFOA based adap-

tive model for short term and long term forecasting of stock in-

dices[24]. The weight of the combiner is updated using the BFOA

tool. The results of the experiment indicated that the propose modal

offers computational complexity, better prediction accuracy and

lesser training time compared to those obtained fromthe MLP modal.

BFOAwas also used for solving a highly non-linear and non-convex

problem [15]. It is found that the BFOA technique succeeds in

better loss minimization compared to conventional IPSLP tech-

nique. S.Mishra and C.N.Bhende used the modied BFOA to op-

timize the coefcients of Proportional plus Integral controllers for

active power lters[25]. L.Ulagammai et al. used BFOA to train

a Wavelet-based Neural Network (WNN) and identify the inherent

non-linear characteristics of power system loads[26].

In the area of PID applications, D.H.Kim and J.H.Cho presented

an intelligent tuning method of PID controller based on BFOA[27].

Simulation results show that the object function can be minimized

by gain selection for control and the variety gain can be obtained.

B.Niu et al. designed BF-PID controller using BFOA[28]. Com-

pared with GA-PID controller, BF-PID obtained a faster settling

time, less or no overshoot and higher robustness. A multi-objective

optimization method for the parameter tuning of fuzzy PID con-

trollers is proposed using BFOA. In the proposed BFOA-tuning

method, a cost function is dened in a systematic way. The simu-

lation results show that a fuzzy PID controller designed using the

proposed BFOA has good performance[11].

C. Improved Algorithms

BFOA use function to modal the cell-to-cell signaling via an

attractant and a repellant. However, its value does not depend

on the nutrient concentration at position . In 2002, Y.Liu and

K.M.Passino used a new function to represent the environment-

dependent cell-to-cell signaling[29].

J

ar

() = exp(M J())J

cc

()

where M is a tunable parameter. Then, for swarming, the mini-

mization is J(i, j, k, l) + J

ar

(

i

( j, k, l)) . By performing social forag-

ing with chemical-attractant-induced swarming, E.coli have better

chance in locating the optimal point in a noisy environment. Con-

sidering BFOA lacks in adaptation according to the operating con-

dition, S.Mishra presented a new algorithm Fuzzy Bacterial For-

aging (FBF)[30]. FBF uses variable run length in the chemotaxis

step in place of the original constant through a Takagi-Sugeno type

fuzzy inference scheme. The resulting shows FBF has superior

performance than GA when applied to the harmonic estimation

problem. W.J.Tang et al. proposed a dynamic bacterial foraging

499

algorithm (DBFA) which aims at optimization in dynamic environ-

ments[31]. The DBFA adopts a selection scheme which enables the

bacteria to exibly adapt to the changing environment. Compared

with BFA, DBFA is able to provide satisfactory performance, and

can react to most of the environmental changes in time.

Hybridization of BFOAwith other naturally inspired meta-heuristics

has remained an interesting problem for the researchers. A.Biswas

et al. come up with an improved variant of the BFOA algorithm by

combining the PSO based mutation operator with bacterial chemo-

taxis[32]. The new algorithm, named by the authors as Bacterial

Swarm Optimization (BSO). The performance of BSO is better

than classical PSO, original BFOA and MPSO-TVAC on several

numerical benchmark functions.In 2006, D.H.Kim and J.H.Cho in-

troduces clonal selection of immune algorithm and fuzzy logic into

bacterial foraging to enhance running speed and patch of optimal

condition[33]. In 2007, they also proposed a hybrid approach in-

volving GA and BFOA. Dynamic mutation and modied simple

crossover are used in BFOA[34].

4. BF-PSO ALGORITHM

In 2008, W.Korani proposed an improved BFOA, namely BF-

PSO. The BF-PSO combines both algorithms BF and PSO. The

aims is to make use of PSO ability to exchange social information

and BF ability in nding a new solution by elimination and disper-

sal.In BFOA, a unit length direction of tumble behavior is randomly

generated. Random direction may lead to delay in reaching the

global solution. In the BF-PSO, the unit length random direction

of tumble behavior can been decided by the global best position

and the best position of each bacteria. During the chemotaxis loop,

the update of the tumble direction is determined by:

( j + 1) = w ( j) + C1 R1(Plbest-Pcurrent)

+ C2 R2 (Pgbest-Pcurrent)

Where Plbest is the best position of each bacterial and Pgbest is

the global best bacterial. The brief pseudo-code of the BF-PSO has

been provided below:

[Step 1] Initialization: Parameters Setting.

p : Dimension of the search space.

S : The number of bacteria in the population.

N

c

: Chemotactic steps.

N

s

: Swimming length.

N

re

: The number of reproduction steps.

N

ed

: The number of elimination-dispersal events.

P

ed

: Elimination-dispersal with probability.

C( i ) (i = 1, 2, , S ) : The size of the step taken in the

random direction specied by the tumble.

P( j , k , l ) : P( j , k , l ) = {

i

( j , k , l ) | i = 1, 2, ......, S }.

Generate a random vector ( j) which elements lie in

[-1,1].

C1 , C2 , R1 , R2 , w: PSO parameters.

[Step 2] Elimination Dispersal loop: l = l + 1.

[Step 3] Reproduction loop: k = k + 1.

[Step 4] Chemotaxis loop: j = j + 1.

[4.1] Take a chemotactic step for every bacterium (i).

[4.2] Compute tness function: J(i, j, k, l),

then let J

last

= J(i, j, k, l).

[4.3] Tumble: Let ( j+1) = w( j)+C1R1(Plbest-Pcurrent)

+C2 R2 (Pgbest-Pcurrent).

[4.4] Move: Let

i

( j + 1, k, l) =

i

( j, k, l) + C(i)( j).

Computer tness function: J(i, j, k, l).

Then Let J(i, j + 1, k, l) = J(i, j + 1, k, l)+

J

cc

(

( j + 1, k, l), P( j + 1, k, l)).

[4.5] Swim: Let m = 0;

while (m < N

s

)

let m = m + 1;

if J(i, j, k, l) < J

last

Let J

last

= J(i, j, k, l),

Let

i

( j + 1, k, l) =

i

( j, k, l) + C(i)( j),

Computer tness function: J(i, j, k, l),

Let J(i, j + 1, k, l) = J(i, j + 1, k, l)+

J

cc

(

( j + 1, k, l), P( j + 1, k, l)).

Else let m = N

s

.

[4.6] Go to next bacterium.

[Step 5] If ( j < N

c

), go to Step 4.

[Step 6] Reproduction: Computer the health of the bacterium i:

J

i

health

=

Nc+1

j=1

J(i, j, k, l)

Sort bacteria and chemotactic parameters C(i) in order

of ascending cost J

health

. Bacteria with the highest J

health

values die, the remaining bacteria reproduce.

[Step 7] If (k < N

re

), go to Step 3.

[Step 8] Elimination-dispersal: Eliminate and disperse bacteria

with probability P

ed

.

[Step 9] If (l < N

ed

), go to Step 2.

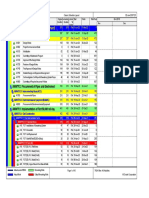

5. EXPERIMENTAL STUDIES

A. Test functions

To fully evaluate the performance of the BFOA and BF-PSO al-

gorithms without a biased conclusion towards some chosen prob-

lems, we employed 23 standard benchmark functions which are

given in Table I. These functions can be divided into three cate-

gories. Functions f

1

f

13

are high-dimensional problems. Func-

tions f

8

f

13

are multimodal functions where the number of local

minima increases exponentially with the problem dimension. They

appear to be the most difcult class of problems for many optimiza-

tion algorithms. Functions f

14

f

23

are low-dimensional functions

which have only a few local minima.

B. Experimental setting

The parameter setting of the BFOA and BF-PSO algorithm is

summarized as follows. The same population size S=50; the num-

ber of chemotactic steps N

c

=50; the number of reproduction steps

N

re

=2; the number of elimination-disperal events N

ed

=1; the prob-

ablity of elimination-dispersal P

ed

=0.25; the length of steps during

runs C(i)=0.1; the acceleration factors c

1

and c

2

were both 2.0, and

a decaying inertia weight w is 0.9. To make the comparison fair, the

populations for all the considered algorithms were initialized using

the same random seeds.

6. RESULT AND DISCUSSION

The average results of 30 independent runs are summarized in

Table I. Moreover, in order to be more intuitive analysis of per-

formance of BFOA and BF-PSO, the convergence results for se-

lected benchmark problems over 30 runs were attached in this pa-

per. Figures 1, 2 and 3 show the convergence results for uni-modal

functions ( f

1

f

7

), multimodal functions with many local min-

ima function ( f

8

f

13

) and multimodal functions with few local

minima function ( f

14

f

23

), respectively.

For uni-modal functions f

1

to f

7

, the BF-PSO is able to obtain

practically perfect optimization results, while BFOA has difculty

500

Table 1: The 23 Benchmark Functions, Where n is the Dimension of The Function, f

min

is the Global Minimum Value of the Function.

Test Functions n SD f

min

f

1

(x) =

_

n

i=1

x

2

i

30 [100, 100]

n

f

1

(

0 ) = 0

f

2

(x) =

_

n

i=1

|x

i

| +

n

i=1

|x

i

| 30 [10, 10]

n

f

2

(

0 ) = 0

f

3

(x) =

_

n

i=1

(

_

i

j=1

x

j

)

2

30 [100, 100]

n

f

3

(

0 ) = 0

f

4

(x) = max

i

{|x

i

|, 1 i n} 30 [100, 100]

n

f

4

(

0 ) = 0

f

5

(x) =

_

n1

i=1

(100(x

i+1

x

i

)

2

) + (x

i

1)

2

) 30 [30, 30]

n

f

5

(

1 ) = 0

f

6

(x) =

_

n

i=1

(x

i

+ 0.5)

2

30 [100, 100]

n

f

6

(

0 ) = 0

f

7

(x) =

_

n

i=1

ix

4

i

+ random[0, 1) 30 [1.28, 1.28]

n

f

7

(

0 ) = 0

f

8

(x) =

_

n

i=1

(x

i

sin(

|x

i

|)) 30 [500, 500]

n

f

8

(

420.9687) = 12569.5

f

9

(x) =

_

n

i=1

(x

2

i

10 cos(2x

i

) + 10)

2

30 [5.12, 5.12]

n

f

9

(

0 ) = 0

f

10

(x) = 20 exp

_

0.2

_

1

n

_

n

i=1

x

2

i

_

exp

_

1

n

_

n

i=1

cos 2x

i

_

+ 20 + e 30 [32, 32]

n

f

10

(

0 ) = 0

f

11

(x) =

1

4000

_

30

i=1

(x

i

100)

2

n

i=1

cos(

x

i

100

i

) + 1 30 [600, 600]

n

f

11

(

0 ) = 0

f

12

(x) =

n

{10 sin

2

(y

1

)) +

_

n1

i=1

(y

i

1)

2

[1 + 10 sin

2

(y

i+1

)]

30 [50, 50]

n

f

12

(

1 ) = 0

+(y

n

1)

2

} +

_

30

i=1

u(x

i

, 10, 100, 4)

f

13

(x) = 0.1{sin

2

(3x

1

) +

_

29

i=1

(x

i

1)

2

p[1 + sin

2

(3x

i+1

)]

30 [50, 50]

n

f

13

(

1 ) = 0

+(x

n

1)

2

[1 + sin

2

(2x

30

)]} +

_

30

i=1

u(x

i

, 5, 100, 4)

f

14

(x) =

_

1

500

+

_

25

j=1

1

j+

_

2

j=1

(x

i

a

i j

)

6

_

1

2 [65.536, 65.536]

n

f

14

(32, 32) = 0

f

15

(x) =

_

11

i=1

_

a

i

x

1

(b

2

i

+b

i

x

2

)

b

2

i

+b

i

x

3

+x

4

_

2

4 [5, 5]

n

f

15

(0.1928, 0.1908, 0.1231

,0.1358)= 0.0003075

f

16

(x) = 4x

2

1

2.1x

4

1

+

1

3

x

6

1

+ x

1

x

2

4x

2

2

+ 4x

4

2

2 [5, 5]

n

f

16

(0.08983, 0.7126) =

(0.08983, -0.7126)=-1.0316

f

17

(x) =

_

x

2

5.1

4

2

x

2

1

+

5

x

1

6

_

2

+ 10

_

1

1

8

_

cos x

1

+ 10

2 [5, 10] [0, 15]

f

17

(3.142, 2.275) =

(3.142, 2.275)=

(9.425, 2.425)=0.398

f

18

(x) = [1 + (x

1

+ x

2

+ 1)

2

(19 14x

1

+ 3x

2

1

14x

2

+ 6x

1

x

2

2 [2, 2]

n

f

18

(0, 1) = 3 +3x

2

2

)] [30 + (2x

1

+ 1 3x

2

)

2

(18 32x

1

+ 12x

2

1

+48x

2

36x

1

x

2

+ 27x

2

2

)]

f

19

(x) =

_

4

i=1

exp

_

_

3

j=1

a

i j

(x

j

p

i j

)

2

_

3 [0, 1]

n

f

19

(0.114, 0.556

,0.852)=-3.86

f

20

(x) =

_

4

i=1

exp

_

_

6

j=1

a

i j

(x

j

p

i j

)

2

_

6 [0, 1]

n

f

20

=(0.201, 0.15, 0.477,

0.275, 0.311, 0.657)=-3.32

f

21

(x) =

_

5

i=1

_

(x

i

a

i

)(x

i

a

i

)

T

+ c

i

_

1

4 [0, 10]

n

f

21

(

0 ) = 10

f

22

(x) =

_

7

i=1

_

(x

i

a

i

)(x

i

a

i

)

T

+ c

i

_

1

4 [0, 10]

n

f

22

(

0 ) = 10

f

23

(x) =

_

10

i=1

_

(x

i

a

i

)(x

i

a

i

)

T

+ c

i

_

1

4 [0, 10]

n

f

23

(

0 ) = 10

0 50 100 150 200 250 300

6

4

2

0

2

4

6

8

10

12

Generation

B

e

s

t

F

i

t

n

e

s

s

(

L

o

g

S

c

a

l

e

)

BFOA

BFPSO

(a) f1

0 100 200 300

0

5

10

Generation

B

e

s

t

F

i

t

n

e

s

s

(

L

o

g

S

c

a

l

e

)

BFOA

BFPSO

(b) f3

Figure 1: Convergence results of BFOA and BF-PSO for uni-modal functions.

501

0 50 100 150 200 250 300

2

0

2

4

6

8

10

12

Generation

B

e

s

t

F

i

t

n

e

s

s

(

L

o

g

S

c

a

l

e

)

BFOA

BFPSO

(a) f9

0 50 100 150 200 250 300

3

2

1

0

1

2

3

4

5

Generation

B

e

s

t

F

i

t

n

e

s

s

(

L

o

g

S

c

a

l

e

)

BFOA

BFPSO

(b) f10

Figure 2: Convergence results of BFOA and BF-PSO for multimodal functions with many local minima.

0 50 100 150 200 250 300

0

0.05

0.1

0.15

0.2

0.25

Generation

B

e

s

t

F

i

t

n

e

s

s

BFOA

BFPSO

(a) f15

0 50 100 150 200 250 300

10

5

0

5

10

Generation

B

e

s

t

F

i

t

n

e

s

s

BFOA

BFPSO

(b) f22

Figure 3: Convergence results of BFOA and BF-PSO or multimodal functions with few local minima.

with functions f

5

and f

6

, and the accuracy for the remaining func-

tions is also less good than the BF-PSO. According to the Figure 1

we plotted to observe the evolutionary process, in the beginning,

BFOA and BF-PSO all displays a faster convergence rate. But

when they nd solutions closer to the global optimum, BFOA ap-

peared to become trapped in a poor local optimum and unable to

escape from it, while BF-PSO is also able to improve its solution

steadily for a long time. The largest difference in performance be-

tween BFOA and BF-PSO occurs with function f

6

, the step func-

tion, which is characterized by plateaus and discontinuity. BFOA

performs poorly because search direction after tumble of bacteria

made it searching mainly in a relatively small local neighborhood,

and cannot move from one plateau to a lower one. On the other

hand, BF-PSO has a much higher probability of generating long

jumps than BFOA. Thats because that the search direction is ori-

ented concurrently by individuals best location and the global best

location enable BF-PSO to move from one plateau to a lower one

with relative ease.

For multimodal functions with many local minima f

8

to f

13

, the

BF-PSO generated signicantly better results than BFOA. Accord-

ing to the Figure 2, BFOA fell into a poor local optimum quite

early when it is just beginning to evolution. However, BF-PSO

can quickly converge toward the optima within a relatively small

number of generations due to its search direction after tumble of

bacteria.

Functions f

14

to f

23

are multimodal functions with only a few lo-

cal minima functions and the low dimensions. For these problems,

the BF-PSO procedure performs variably. On the functions f

16

to

f

20

, two algorithms yielded similar results which are all approxi-

mate the global optimal solution. Thats because these functions

represent completely similar surface characteristics, the major re-

gions of attraction are all not small local optima, such as f

18

(Figure

4). On the shekels family functions f

21

to f

23

, the performance

of BF-PSO is the worst among the all test functions. The BFOA

nds solutions closer to the global optimum, but BF-PSO doesnt

(Figure 3(b)). The problems f

21

to f

23

are characterized with large

at regions with sudden "fox holes" of varying depth (Figure 5).

The improved success rate of BFOA is due to its nature to explore

regions of interest and concentrate in the more promising ones at

each iteration. For BFOA, at the beginning of the search, there is

a number of promising "fox holes". After a short period, certain

degree of "jump" on a BFOA evolution curve occurs. Thats due to

the fact that the BFOA could sometimes experience a few genera-

tions without achieving a better solution. Such a situation can be

diagnosed. Its search direction made the "jump" very easy, and the

regions of attraction of the global minima is the smallest, so nding

the global minima become very difcult for BFOA.

502

Table 2: Comparison Between BFOA and BF-PSO on Bench-

mark Functions f

1

f

23

. All Results Have Been Averaged Over

30 Runs.

Function BFOA BF-PSO

f1 7.517710

1

5.634810

3

f2 3.9923 2.678310

1

f3 5.3897 7.108610

2

f4 1.3969 4.985310

2

f5 1.144510

2

2.744310

1

f6 1.430910

4

0

f7 2.8296 3.964410

1

f8 -4632.6 -6192

f9 9.082910

2

5.268210

1

f10 2.024910

1

6.493610

2

f11 4.514310

2

4.343710

2

f12 1.336810

2

1.8109

f13 2.6661 2.201210

1

f14 1.9920 9.980010

1

f15 1.326010

1

1.433210

3

f16 -9.818310

1

-1.0118

f17 0.4227 0.4165

f18 5.0307 3.2271

f19 -3.5766 -3.6383

f20 -2.4263 -3.2726

f21 -8.0725 -5.0429

f22 -8.1157 -5.6969

f23 -8.4042 -6.1105

2

0

2

2

1

0

1

2

0

2

4

6

8

10

x 10

5

x

1

x

2

y

Figure 4: f

18

(Goldstein-Price function).

0

5

10

0

5

10

12

10

8

6

4

2

0

x

1

x

2

y

Figure 5: f

23

(Shekel function), x

3

= x

1

, x

4

= x

2

.

7. CONCLUSIONS AND FUTURE

DIRECTIONS

In this paper, we make a detailed introduction of the underlying

ideas of BFOA, basic algorithmic structures, some major variants

proposed in the literature, and applications to optimization prob-

lems. In BFOA, because random search directions which may

lead to delay in reaching the global solution during the process of

chemotaxis of bacteria, so we also introduced an improved BFOA,

namely BF-PSO. In order to examine the performance of BF-PSO,

the BFOA and BF-PSO was investigated on 23 numerical bench-

mark functions. Fromthe simulation results, we can see this method

of search directions after tumble behavior for bacteria oriented by

PSO strategy greatly improved the optimization performance of

BFOA. The correctness and practicability of BF-PSO was proved.

Therefore, the BF-PSO has potential to be useful for other practi-

cal optimization problems. The future research effort should focus

on improving the convergence speed of BF-PSO. Also more ex-

periments are required to determine why and when the BF-PSO

methods fail on shekels family functions.

8. ACKNOWLEDGMENTS

This work was supported by the National Key of Technology RD

Program of China (Grant No.2007AA04Z189), the High-Tech Re-

search and Development Programof China (Grant No.20060104A11

18) and the Science and Technology Supporting 394 Program of

China (Grant No. 2006BAH02A09).

9. REFERENCES

[1] L. N. Decastro, F. J. Von Zuben, and Idea Group Pub et al.

Recent Developments In Biologically Inspired Computing.

IGI Publishing, Hershey, PA, USA, 2004.

[2] S. Gerbex, R. Cherkaoui, and A. J. Germond. Optimal

location of multi-type facts devices in a power system by

means of genetic algorithms. IEEE Transactions on Power

Systems, 16(3): 537544, 2001.

[3] M. A. Abido. Optimal design of power-system stabilizers

using particle swarm optimization. IEEE Transactions on

Energy Conversion, 17(3): 406413, 2002.

[4] J. E. Bell and P. R. McMullen. Ant colony optimization

techniques for the vehicle routing problem. Advanced

Engineering Informatics, 18(1): 4148, 2002.

[5] B. M. Baker and M. A. Ayechew. A genetic algorithm for the

vehicle routing problem. Computers & Operations Research,

30(5): 787800, 2003.

[6] C. A. Coello Coello. Theoretical and numerical

constraint-handling techniques used with evolutionary

algorithms a survey of the state of the art. Computer Methods

in Applied Mechanics and Engineering, 191(11-12):

12451287, 2002.

[7] R. F. Bo, R. Q. Li, and H. X. Pan. Concept optimization for

mechanical product by using ant colony system. Computer

Methods in Applied Mechanics and Engineering, 22(4):

628638, 2008.

[8] J. Wisnu, S. Kosuke, and F. Toshio. A pso-based mobile

robot for odor source localization in dynamic

advection-diffusion with obstacles environment: Theory,

simulation and measurement. IEEE Computational

Intelligence Magazine, 2(2): 3751, 2007.

[9] K. M. Passino. Biomimicry of bacterial foraging for

distributed optimization and control. IEEE Control Systems

Magazine, 22: 5267, 2002.

503

[10] A. Ali and S. Majhi. Design of optimum pid controller by

bacterial foraging strategy. In ICIT 2006: Proceedings of the

IEEE International Conference on Industrial Technology,

pages 601605, Mumbai, India, December, 2008. IEEE.

[11] H. Ch. Chen. Bacterial foraging based optimization design of

fuzzy PID controllers. In ICIC 2008: Proceedings of the 4th

International Conference on Intelligent Computing, volume

5226, pages 841849, Shanghai, China, September, 2008.

Springer-Verlag.

[12] D. H. Kim and C. H. Cho. Bacteria foraging based neural

network fuzzy learning. In IICAI 2005: Proceedings of the

2nd Indian International Conference on Articial

Intelligence, pages 20302036, Pune, India, December,

2005. IEEE.

[13] M. Tripathy and S. Mishra. Bacteria foraging based to

optimize both real power loss and voltage stability limit.

IEEE Transactions on Power Systems, 22(1): 240248, 2007.

[14] T. K. Das and G. K. Venayagamoorthy. Bio-inspired

algorithms for the design of multiple optimal power system

stabilizers: SPPSO and BFA. IEEE Transactions on Industry

Applications., 44(5): 14451457, 2008.

[15] M. Tripathy, S. Mishra, and L. L. Lai et al. Transmission loss

reduction based on FACTS and bacteria foraging algorithm.

In PPSN IX: Proceedings of the 9th International Conference

on Parallel Problem Solving from Nature, volume 4193,

pages 222231, Reykjavik, Iceland, September, 2006.

Springer-Verlag.

[16] M. Hanmandlu, A. V. Nath, and A. C. Mishra et al. Fuzzy

model based recognition of handwritten hindi numerals

using bacterial foraging. In ICIS 2007: Proceedings of the

6th Annual IEEE/ACIS International Conference on

Computer and Information Science, pages 309314,

Melbourne, Australia, July, 2007. IEEE Computer Society.

[17] B. Majhi and G. Panda. Recovery of digital information

using bacterial foraging optimization based nonlinear

channel equalizers. In ICDIM 2007: Proceedings of the First

IEEE International Conference on Digital Information

Management, pages 367372, Christ College, Bangalore,

India, December, 2006. IEEE Press.

[18] R. C. Eberhart and Y.H. Shi. Particle swarm optimization:

Developments, applications and resources. In CEC 2001:

proceedings of the IEEE congress on evolutionary

computation, pages 8186, Seoul, South Korea, May, 2001.

IEEE.

[19] W. Korani. Bacterial foraging oriented by particle swarm

optimization strategy for PID tuning. In GECCO 2008:

Proceedings of the Genetic and Evolutionary Computation

Conference, pages 18231826, Atlanta, GA, USA, July,

2008. ACM.

[20] X. Yao, Y. Liu, and G. M. Lin. Evolutionary

programmingmade faster. IEEE Transactions on

Evolutionary Computing, 3(2): 82102, July, 1999.

[21] S. Dasgupta, S. Das, and A. Abraham et al. Adaptive

computational chemotaxis in bacterial foraging algorithm. In

CISIS 2008: Proceedings of the Second International

Conference on Complex, Intelligent and Software Intensive

Systems, pages 6471, Genova, Italy, March, 2008. IEEE

Computer Society.

[22] S. Das, S. Dasgupta, and A. Biswas et al. On stability of the

chemotactic dynamics in bacterial foraging. In CSTST 2008:

IEEE/ACM International Conference on Soft Computing as

Transdisciplinary Science and Technology, pages 245251,

Paris, France, October, 2008. ACM.

[23] A. Abraham, A. Biswas, and S. Dasgupta et al. Analysis of

reproduction operator in bacterial foraging optimization

algorithm. In CEC 2008: IEEE World Congress on

Computational Intelligence, pages 14761483, Hong Kong,

June, 2008. IEEE Press.

[24] R. Majhi, G. Panda, and G. Sahoo et al. Stock market

prediction of S & P 500 and DJIA using bacterial foraging

optimization technique. In CEC 2007: IEEE Congress on

Evolutionary Computation, pages 25692575, Singapore,

September, 2007. IEEE Press.

[25] S. Mishra and C. N. Bhende. Bacterial foraging

technique-based optimized active power lter for load

compensation. IEEE Transactions on Power Delivery, 22(2):

457465, Jan, 2007.

[26] L. Ulagammai, P. Vankatesh, and P. S. Kannan et al.

Application of bacteria foraging technique trained and

articial and wavelet neural networks in load forecasting.

Neurocomputing, 70(16-18): 26592667, 2007.

[27] D. H. Kim and J. H. Cho. Adaptive tuning of PID controller

for multivariable system using bacterial foraging based

optimization. In AWIC 2005: Advances in Web Intelligence

Third International Atlantic Web Intelligence Conference,

volume 3528 of Lecture Notes in Computer Science, pages

231235, Lodz, Poland, June, 2005. Springer-Verlag.

[28] B. Niu, Y. l. Zhu, and X. X. He et al. Optimum design of PID

controllers using only a germ of intelligence. In WCICA

2006: Proceedings of the 6th World Congress on Intelligent

Control and Automation, pages 35843588, Dalian, China,

June, 2006. IEEE Press.

[29] Y. Liu and K. M. Passino. Biomimicry of social foraging

bacteria for distributed optimization: Models, principles, and

emergent behaviors. Journal of Optimization Theory and

Applications, 115(3): 603628, December, 2002.

[30] S. Mishra. A hybrid least square-fuzzy bacterial foraging

strategy for harmonic estimation. IEEE Transactions on

Evolutionary Computation, 9(1): 6173, 2005.

[31] W. J. Tang, Q. H. Wu, and J. R. Saunders. Bacterial foraging

algorithm for dynamic environments. In CEC 2006: IEEE

Congress on Evolutionary Computation, pages 13241330,

BC, Canada, July, 2006. IEEE Press.

[32] A. Biswas, S. Dasgupta, and S.Das et al. Synergy of pso and

bacterial foraging optimization: A comparative study on

numerical benchmarks. In HAIS 2007: the Second

International Symposium on Hybrid Articial Intelligent

Systems, pages 255263, Salamanca, Spain, November,

2007. Springer-Verlag.

[33] D. H. Kim and J. H. Cho. Advanced bacterial foraging and

its application using fuzzy logic based variable step size and

clonal selection of immune algorithm. In ICHIT06:

Proceedings of the 2006 International Conference on Hybrid

Information Technology, volume 1, pages 293298, Cheju

Island, Korea, November, 2006. IEEE Computer Society.

[34] D. H. Kim, A. Abraham, and J. H. Cho. A hybrid genetic

algorithm and bacterial foraging approach for global

optimization. Information Sciences, 177(18): 39183937,

2007.

504

S-ar putea să vă placă și

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (120)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- The Way of The Samurai, Shadowrun BookDocument19 paginiThe Way of The Samurai, Shadowrun BookBraedon Montgomery100% (8)

- Opening The Third EyeDocument13 paginiOpening The Third EyekakamacgregorÎncă nu există evaluări

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Muscular System Coloring Book: Now You Can Learn and Master The Muscular System With Ease While Having Fun - Pamphlet BooksDocument8 paginiMuscular System Coloring Book: Now You Can Learn and Master The Muscular System With Ease While Having Fun - Pamphlet BooksducareliÎncă nu există evaluări

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- Macro Economics A2 Level Notes Book PDFDocument33 paginiMacro Economics A2 Level Notes Book PDFMustafa Bilal50% (2)

- TelecomBasics/smart AntDocument7 paginiTelecomBasics/smart AntMohamed Zakaria EbrahimÎncă nu există evaluări

- 1567 Waterhandbook PDFDocument50 pagini1567 Waterhandbook PDFAdrian SieÎncă nu există evaluări

- PMDocument16 paginiPMMohamed Zakaria EbrahimÎncă nu există evaluări

- (Ebook - Electronics) - Smart Antenna SystemsDocument29 pagini(Ebook - Electronics) - Smart Antenna SystemsSachin M ShenoyÎncă nu există evaluări

- 10 Flow90Document114 pagini10 Flow90Mohamed Zakaria Ebrahim100% (1)

- (Solutions Manual) Elements of Electromagnetics - Sadiku - 3rd PDFDocument422 pagini(Solutions Manual) Elements of Electromagnetics - Sadiku - 3rd PDFPonteyoss Konjokeloss80% (64)

- 1771 IfeDocument82 pagini1771 Ifeemarq70Încă nu există evaluări

- Ujcn 2013 010103 PDFDocument6 paginiUjcn 2013 010103 PDFMohamed Zakaria EbrahimÎncă nu există evaluări

- Agenda Item: Source: Cwts Wg1 Title: Smart Antenna Technology Document For: ConsiderationDocument7 paginiAgenda Item: Source: Cwts Wg1 Title: Smart Antenna Technology Document For: ConsiderationMohamed Zakaria EbrahimÎncă nu există evaluări

- Lecture 03 PS OLDiagramDocument23 paginiLecture 03 PS OLDiagramdillehÎncă nu există evaluări

- Array Bactr ForagingDocument13 paginiArray Bactr ForagingMohamed Zakaria EbrahimÎncă nu există evaluări

- MRI TutorialDocument7 paginiMRI TutorialMohamed Zakaria EbrahimÎncă nu există evaluări

- Dhulikhel RBB PDFDocument45 paginiDhulikhel RBB PDFnepalayasahitya0% (1)

- Data Base Format For Company DetailsDocument12 paginiData Base Format For Company DetailsDexterJacksonÎncă nu există evaluări

- Vedic Town Planning ConceptsDocument17 paginiVedic Town Planning ConceptsyaminiÎncă nu există evaluări

- English ExerciseDocument2 paginiEnglish ExercisePankhuri Agarwal100% (1)

- Case Study StarbucksDocument2 paginiCase Study StarbucksSonal Agarwal100% (2)

- Fish Siomai RecipeDocument12 paginiFish Siomai RecipeRhyz Mareschal DongonÎncă nu există evaluări

- OpenGL in JitterDocument19 paginiOpenGL in JitterjcpsimmonsÎncă nu există evaluări

- VERITAS NetBackup 4 (1) .5 On UnixDocument136 paginiVERITAS NetBackup 4 (1) .5 On UnixamsreekuÎncă nu există evaluări

- Iot Practical 1Document15 paginiIot Practical 1A26Harsh KalokheÎncă nu există evaluări

- Teacher Resource Disc: Betty Schrampfer Azar Stacy A. HagenDocument10 paginiTeacher Resource Disc: Betty Schrampfer Azar Stacy A. HagenRaveli pieceÎncă nu există evaluări

- MyResume RecentDocument1 paginăMyResume RecentNish PatwaÎncă nu există evaluări

- Syllabus Math 305Document4 paginiSyllabus Math 305Nataly SusanaÎncă nu există evaluări

- 127 Bba-204Document3 pagini127 Bba-204Ghanshyam SharmaÎncă nu există evaluări

- RRC & RabDocument14 paginiRRC & RabSyed Waqas AhmedÎncă nu există evaluări

- Cpar Final Written Exam 1Document3 paginiCpar Final Written Exam 1Jeden RubiaÎncă nu există evaluări

- Transportation Engineering Unit I Part I CTLPDocument60 paginiTransportation Engineering Unit I Part I CTLPMadhu Ane NenuÎncă nu există evaluări

- Comparison of Offline and Online Partial Discharge For Large Mot PDFDocument4 paginiComparison of Offline and Online Partial Discharge For Large Mot PDFcubarturÎncă nu există evaluări

- Spermatogenesis: An Overview: Rakesh Sharma and Ashok AgarwalDocument25 paginiSpermatogenesis: An Overview: Rakesh Sharma and Ashok Agarwalumar umarÎncă nu există evaluări

- ASM NetworkingDocument36 paginiASM NetworkingQuan TranÎncă nu există evaluări

- Sci5 q3 Module3 NoanswerkeyDocument22 paginiSci5 q3 Module3 NoanswerkeyRebishara CapobresÎncă nu există evaluări

- Sample Database of SQL in Mysql FormatDocument7 paginiSample Database of SQL in Mysql FormatsakonokeÎncă nu există evaluări

- 11 Stem P - Group 2 - CPT First GradingDocument7 pagini11 Stem P - Group 2 - CPT First GradingZwen Zyronne Norico LumiwesÎncă nu există evaluări

- Mechanical Power FormulaDocument9 paginiMechanical Power FormulaEzeBorjesÎncă nu există evaluări

- Chiba International, IncDocument15 paginiChiba International, IncMiklós SzerdahelyiÎncă nu există evaluări

- Calculating Measures of Position Quartiles Deciles and Percentiles of Ungrouped DataDocument43 paginiCalculating Measures of Position Quartiles Deciles and Percentiles of Ungrouped DataRea Ann ManaloÎncă nu există evaluări

- (Guide) Supercharger V6 For Everyone, Make Your Phone Faster - Xda-DevelopersDocument7 pagini(Guide) Supercharger V6 For Everyone, Make Your Phone Faster - Xda-Developersmantubabu6374Încă nu există evaluări