Documente Academic

Documente Profesional

Documente Cultură

Voiced - Unvoiced Signal Detection

Încărcat de

Akhlaqur RahmanDescriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Voiced - Unvoiced Signal Detection

Încărcat de

Akhlaqur RahmanDrepturi de autor:

Formate disponibile

Separation of Voiced and Unvoiced using Zero crossing rate and Energy of the Speech Signal

Bachu R.G., Kopparthi S., Adapa B., Barkana B.D. Electrical Engineering Department School of Engineering, University of Bridgeport

Abstract

In speech analysis, the voiced-unvoiced decision is usually performed in extracting the information from the speech signals. In this paper, we performed two methods to separate the voiced- unvoiced parts of speech from a speech signal. These are zero crossing rate (ZCR) and energy. In here, we evaluated the results by dividing the speech sample into some segments and used the zero crossing rate and energy calculations to separate the voiced and unvoiced parts of speech. The results suggest that zero crossing rates are low for voiced part and high for unvoiced part where as the energy is high for voiced part and low for unvoiced part. Therefore, these methods are proved more effective in separation of voiced and unvoiced speech. 1. Introduction Speech can be divided into numerous voiced and unvoiced regions. The classification of speech signal into voiced, unvoiced provides a preliminary acoustic segmentation for speech processing applications, such as speech synthesis, speech enhancement, and speech recognition. Voiced speech consists of more or less constant frequency tones of some duration, made when vowels are spoken. It is produced when periodic pulses of air generated by the vibrating glottis resonate through the vocal tract, at frequencies dependent on the vocal tract shape. About two-thirds of speech is voiced and this type of speech is also what is most important for intelligibility. Unvoiced speech is non-periodic, random-like sounds, caused by air passing through a narrow constriction of the vocal tract as when consonants are spoken. Voiced speech, because of its periodic nature, can be identified, and extracted [1]. In recent years considerable efforts has been spent by researchers in solving the problem of classifying speech into voiced/unvoiced parts [2-8]. A pattern recognition approach and statistical and non statistical techniques has been applied for deciding whether the given segment of a speech signal should be classified as voiced speech or unvoiced speech [2,3,5, and 7]. Qi and Hunt classified voiced and unvoiced speech using non-parametric methods based on multi-layer feed forward network [4]. Acoustical features and pattern recognition techniques were used to separate the speech segments into voiced/unvoiced [8]. The method we used in this work is a simple and fast approach and may overcome the problem of classifying the speech into voiced/unvoiced using zero-crossing rate and energy of a speech signal. The methods that are used in this study are presented in the second part. The results are given in the third part. 2. Method In our design, we combined zero crossings rate and energy calculation. Zero-crossing rate is an important parameter for voiced/unvoiced classification. It is also often used as a part of the front-end processing in 1

automatic speech recognition system. The zero crossing count is an indicator of the frequency at which the energy is concentrated in the signal spectrum. Voiced speech is produced because of excitation of vocal tract by the periodic flow of air at the glottis and usually shows a low zero-crossing count [9], whereas the unvoiced speech is produced by the constriction of the vocal tract narrow enough to cause turbulent airflow which results in noise and shows high zero-crossing count. Energy of a speech is another parameter for classifying the voiced/unvoiced parts. The voiced part of the speech has high energy because of its periodicity and the unvoiced part of speech has low energy. The analysis for classifying the voiced/unvoiced parts of speech has been illustrated in the block diagram in Fig.1

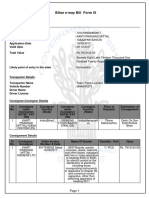

Fig.1: Block diagram of the voiced/unvoiced classification.

Fig. 2: Frame-byframe processing of speech signal. At the first stage, speech signal is divided into intervals in frame by frame without overlapping. It is given with Fig.2. 2.1. Zero-Crossings Rate In the context of discrete-time signals, a zero crossing is said to occur if successive samples have different algebraic signs. The rate at which zero crossings occur is a simple measure of the frequency content of a signal. Zero-crossing rate is a measure of number of times in a given time interval/frame that the amplitude of the speech signals passes through a value of zero, Fig3 and Fig.4. Speech signals are broadband signals and interpretation of average zero-crossing rate is therefore much less precise. 2

However, rough estimates of spectral properties can be obtained using a representation based on the shorttime average zero-crossing rate [11].

Fig. 3: Definition of zero-crossings rate

Fig. 4: Distribution of zero-crossings for unvoiced and voiced speech [11].

A definition for zero-crossings rate is:

Zn =

where

sgn[ x(m)] sgn[ x(m 1)] w(n m)

m =

(1)

1, x(n) 0 sgn[ x(n)] = 1, x(n) < 0

and

(2)

1 for ,0 n N 1 w(n) = 2 N 0 for , otherwise

(3)

The model for speech production suggests that the energy of voiced speech is concentrated below about 3 kHz because of the spectrum fall of introduced by the glottal wave, whereas for unvoiced speech, most of the energy is found at higher frequencies. Since high frequencies imply high zero crossing rates, and low frequencies imply low zero-crossing rates, there is a strong correlation between zero-crossing rate and energy distribution with frequency. A reasonable generalization is that if the zero-crossing rate is high, the speech signal is unvoiced, while if the zero-crossing rate is low, the speech signal is voiced [11]. 2.2. Short-Time Energy The amplitude of the speech signal varies with time. Generally, the amplitude of unvoiced speech segments is much lower than the amplitude of voiced segments. The energy of the speech signal provides a representation that reflects these amplitude variations. Short-time energy can define as:

En =

[ x(m) w(n m)]

m =

(4)

The choice of the window determines the nature of the short-time energy representation. In our model, we used Hamming window. The hamming window gives much greater attenuation outside the bandpass than the comparable rectangular window.

h(n) = 0.54 0.46 cos(2n /( N 1)) , 0 n N 1 h(n) = 0 , otherwise

(5)

Fig. 5: Computation of Short-Time Energy [11].

The attenuation of this window is independent of the window duration. Increasing the length, N, decreases the bandwidth, Fig 5. If N is too small,

En will fluctuate very rapidly depending on the exact

details of the waveform. If N is too large, E n will change very slowly and thus will not adequately reflect the changing properties of the speech signal [11].

3. Results MATLAB 7.0 is used for our calculations. We chose MATLAB as our programming environment as it offers many advantages. It contains a variety of signal processing and statistical tools, which help users in generating a variety of signals and plotting them. MATLAB excels at numerical computations, especially when dealing with vectors or matrices of data. One of the speech signal used in this study is given with Fig.6. Proposed voiced/unvoiced classification algorithm uses short-time zero-crossings rate and energy of the speech signal. The results of voiced/unvoiced decision using our model are presented in Table 1.

Four 0.2

0.15

0.1

0.05 A pt d m ue li

-0.05

-0.1

-0.15

-0.2

1000

2000

3000

4000 Samples

5000

6000

7000

8000

Fig.6: Original speech signal for word four.

Table 1: Voiced/unvoiced decisions for the word four using the model. Frames For word four, Sampling frequency fs=8000Hz Frame-1 (400 Samples) Frame-2 1(200 Samples) Frame-2 Frame-22(200 Samples) Frame-3 (400 Samples) Frame-4 (400 Samples) Frame-5 (400 Samples) Frame -6(400 Samples) Frame-71(200 Samples) Frame-7 Frame-72(200 Samples) Frame-811(100 Samples) Frame-8 Frame-812(100 Samples) Frame-82(200 Samples) Frame-9 (400 Samples) ZCR 152 52 19 41 41 43 56 31 30 24 11 19 89 Energy (J) 0.0018 0.0543 21.1189 186.6628 230.5772 252.98 193.70 27.2842 25.960 3.4214 0.4765 0.166 0.0054 Decision Unvoiced Unvoiced Voiced Voiced Voiced Voiced Voiced Voiced Voiced Voiced Unvoiced Unvoiced Unvoiced

In the frame-by-frame processing stage, the speech signal is segmented into a non-overlapping frame of samples. It is processed into frame by frame until the entire speech signal is covered. Table 1 includes the voiced/unvoiced decisions for word four. It has 3600 samples with 8000Hz sampling rate. At the beginning, we set the frame size as 400 samples. At the end of the algorithm if the decision is not clear, energy and zero-crossing rate is recalculated by dividing the related frame size into two frames. This phenomenon can be seen for Frame 2, 7, and 8 in the Table 1.

4. Conclusion We have presented an approach for separating the voiced /unvoiced part of speech in a simple and efficient way. The algorithm shows good results in classifying the speech as we segmented speech into many frames. In our future study, we plan to improve our results for voiced/unvoiced discrimination in noise.

References: [1] Jong Kwan Lee, Chang D. Yoo, Wavelet speech enhancement based on voiced/unvoiced decision, Korea Advanced Institute of Science and Technology The 32nd International Congress and Exposition on Noise Control Engineering, Jeju International Convention Center, Seogwipo, Korea,August 25-28, 2003. [2] B. Atal, and L. Rabiner, A Pattern Recognition Approach to Voiced-Unvoiced-Silence Classification with Applications to Speech Recognition, IEEE Trans. On ASSP, vol. ASSP-24, pp. 201-212, 1976. [3] S. Ahmadi, and A.S. Spanias, Cepstrum-Based Pitch Detection using a New Statistical V/UV Classification Algorithm, IEEE Trans. Speech Audio Processing, vol. 7 No. 3, pp. 333-338, 1999. [4] Y. Qi, and B.R. Hunt, Voiced-Unvoiced-Silence Classifications of Speech using Hybrid Features and a Network Classifier, IEEE Trans. Speech Audio Processing, vol. 1 No. 2, pp. 250-255, 1993. [5] L. Siegel, A Procedure for using Pattern Classification Techniques to obtain a Voiced/Unvoiced Classifier, IEEE Trans. on ASSP, vol. ASSP-27, pp. 83- 88, 1979. [6] T.L. Burrows, Speech Processing with Linear and Neural Network Models, Ph.D. thesis, Cambridge University Engineering Department, U.K., 1996. [7] D.G. Childers, M. Hahn, and J.N. Larar, Silent and Voiced/Unvoiced/Mixed Excitation (Four-Way) Classification of Speech, IEEE Trans. on ASSP, vol. 37 No. 11, pp. 1771-1774, 1989. [8] Jashmin K. Shah, Ananth N. Iyer, Brett Y. Smolenski, and Robert E. Yantorno Robust voiced/unvoiced classification using novel features and Gaussian Mixture model, Speech Processing Lab., ECE Dept., Temple University, 1947 N 12th St., Philadelphia, PA 19122-6077, USA. [9] Jaber Marvan, Voice Activity detection Method and Apparatus for voiced/unvoiced decision and Pitch Estimation in a Noisy speech feature extraction, 08/23/2007, United States Patent 20070198251. [10] Thomas F. Quatieri, Discrete-Time Speech Signal Processing: Principles and Practice, MIT Lincoln Laboratory, Lexington, Massachusetts, Prentice Hall, ISBN-13:9780132429429. [11] Rabiner, L. R., and Schafer, R. W., Digital Processing of Speech Signals, Englewood Cliffs, New Jersey, Prentice Hall, 512-ISBN-13:9780132136037, 1978.

Short Biographies of the Authors:

Rajesh G. Bachu is Graduate Assistant in Electrical Engineering at the University of Bridgeport, Bridgeport, CT. He is pursuing his Masters of Science, Electrical Engineering at the University of Bridgeport, CT.

Kopparthi S. is a graduate student in Electrical Engineering at the University of Bridgeport, Bridgeport, CT. He is pursuing his Masters of Science, Electrical Engineering at the University of Bridgeport, CT. Adapa B is a graduate student in Electrical Engineering at the University of Bridgeport, Bridgeport, CT. He is pursuing his Masters of Science, Electrical Engineering at the University of Bridgeport, CT. Buket D. Barkana is a Visiting Assistant Professor in the Department of Electrical Engineering at the University of Bridgeport, Bridgeport, CT. She earned her Ph.D. from Eskisehir Osmangazi University, Turkey in 2005. Her interests include Power Electronics Device Design, Speech Signal Processing.

S-ar putea să vă placă și

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- The Variable Resistor Has Been AdjustedDocument3 paginiThe Variable Resistor Has Been AdjustedPank O RamaÎncă nu există evaluări

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- ASCE Snow Loads On Solar-Paneled RoofsDocument61 paginiASCE Snow Loads On Solar-Paneled RoofsBen100% (1)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- L2 Biostatistics ProbabilityDocument84 paginiL2 Biostatistics ProbabilityAaron CiudadÎncă nu există evaluări

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- Bahir Dar University BIT: Faculity of Mechanical and Industrial EngineeringDocument13 paginiBahir Dar University BIT: Faculity of Mechanical and Industrial Engineeringfraol girmaÎncă nu există evaluări

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- Pref - 2 - Grammar 1.2 - Revisión Del IntentoDocument2 paginiPref - 2 - Grammar 1.2 - Revisión Del IntentoJuan M. Suarez ArevaloÎncă nu există evaluări

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Full Download Short Term Financial Management 3rd Edition Maness Test BankDocument35 paginiFull Download Short Term Financial Management 3rd Edition Maness Test Bankcimanfavoriw100% (31)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Ecotopia Remixed II-FormattedDocument54 paginiEcotopia Remixed II-FormattedthisisdarrenÎncă nu există evaluări

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Guidelines For The Management of Brain InjuryDocument26 paginiGuidelines For The Management of Brain InjuryfathaÎncă nu există evaluări

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Product Stock Exchange Learn BookDocument1 paginăProduct Stock Exchange Learn BookSujit MauryaÎncă nu există evaluări

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- By This Axe I Rule!Document15 paginiBy This Axe I Rule!storm0% (1)

- Viscous Fluid Flow Frank M White Third Edition - Compress PDFDocument4 paginiViscous Fluid Flow Frank M White Third Edition - Compress PDFDenielÎncă nu există evaluări

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- HMT RM65 Radial DrillDocument2 paginiHMT RM65 Radial Drillsomnath213Încă nu există evaluări

- Modern Myth and Magical Face Shifting Technology in Girish Karnad Hayavadana and NagamandalaDocument2 paginiModern Myth and Magical Face Shifting Technology in Girish Karnad Hayavadana and NagamandalaKumar KumarÎncă nu există evaluări

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- ZhentarimDocument4 paginiZhentarimLeonartÎncă nu există evaluări

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- Generalized Anxiety DisorderDocument24 paginiGeneralized Anxiety DisorderEula Angelica OcoÎncă nu există evaluări

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Assignment 4 SolutionsDocument9 paginiAssignment 4 SolutionsNengke Lin100% (2)

- Properties of Matter ReviewDocument8 paginiProperties of Matter Reviewapi-290100812Încă nu există evaluări

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- 10th ORLIAC Scientific Program As of 26 Jan 2018Document6 pagini10th ORLIAC Scientific Program As of 26 Jan 2018AyuAnatrieraÎncă nu există evaluări

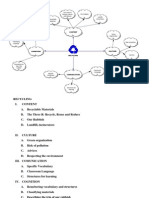

- Recycling Mind MapDocument2 paginiRecycling Mind Mapmsole124100% (1)

- Kwasaki ZX10R 16Document101 paginiKwasaki ZX10R 16OliverÎncă nu există evaluări

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Tuesday, 16 November 2021 - Afternoon Discovering ElectronicsDocument20 paginiTuesday, 16 November 2021 - Afternoon Discovering Electronicsnvmalt070Încă nu există evaluări

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Arts6,4, Week2, Module 2V4Document24 paginiArts6,4, Week2, Module 2V4Loreen Pearl MarlaÎncă nu există evaluări

- SP 73Document105 paginiSP 73Rodrigo Vilanova100% (3)

- Egt Margen From The Best ArticalDocument6 paginiEgt Margen From The Best ArticalakeelÎncă nu există evaluări

- Intel Stratix 10 Avalon - MM Interface For PCI Express Solutions User GuideDocument173 paginiIntel Stratix 10 Avalon - MM Interface For PCI Express Solutions User Guideenoch richardÎncă nu există evaluări

- Chapter 10 - The Mature ErythrocyteDocument55 paginiChapter 10 - The Mature ErythrocyteSultan AlexandruÎncă nu există evaluări

- Fama Fraternitatis Rosae Crucis PDFDocument2 paginiFama Fraternitatis Rosae Crucis PDFJudy50% (2)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- SSCNC Turning Tutorial ModDocument18 paginiSSCNC Turning Tutorial ModYudho Parwoto Hadi100% (1)

- Electrical Power System Device Function NumberDocument2 paginiElectrical Power System Device Function Numberdan_teegardenÎncă nu există evaluări

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)