Documente Academic

Documente Profesional

Documente Cultură

Implications of Cache Management in Partitioned Systems

Încărcat de

Sam RedDescriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Implications of Cache Management in Partitioned Systems

Încărcat de

Sam RedDrepturi de autor:

Formate disponibile

IMPLICATIONS OF CACHE MANAGEMENT IN PARTITIONED SYSTEMS.

Mar Soler1 , Alfons Crespo1 , Miguel Masmano2 and Roger Llorca-Cejudo3 a

1

, UPV, Camino de Vera s/n, 46022 Valencia, Spain Email: msolher@ai2.upv.es, acrespo@disca.upv.es 2 fentISS, CPI-UPV, Camino de Vera s/n, 46022 Valencia, Spain, Email: mmasmano@fentiss.com 3 CNES, 18, avenue Edouard Belin, 31401 Toulouse Cedex 9, France, Email: roger.llorca-cejudo@cnes.fr

1.

INTRODUTION

Space Industry has historically made use of robust architectures that were somewhat conservative, not including performance boosting techniques in order to assure vericability. This choice has become unfeasible due to the increasing complexity and volume of the required programs and data handled. This industry has opted for investing in research of partitioned systems as the best choice to cope with their needs. No new stantard has been created by the Space Industry for partitioned systems, so they are using ARINC-653. ARINC-653 standard establishes the requirements of safety-critical partitioned systems in avionics, including time and space isolation. The use of cache, being a shared resource, violates time isolation but is necessary nowadays due to performance requirements. In the framework of a CNES project for cache management in Xtratum hypervisored system, a deep analysis of the applicability of state of the art solutions to the unpredictability caused by the instruction cache, to grant time isolation in partitioned systems has been carried out. An extensive explanation of XtratuM is beyond the scope of the present survey, so please refer to http://www.xtratum.org for information on this hypervisor. This abstract is a summary of the research performed in the aforementioned project for CNES. To the best of our knowledge, there have been no studies on the relationship between cache and time isolation in partitioned systems so far, and thus this survey intends to extrapolate the results obtained in research addressed to high integrity systems in general to our more concrete and complex scenario.

ing their independent execution. Partitioning is a functional separation of applications both for fault containment (to prevent a faulty partition from causing a failure in any other) and ease of verication, validation and certication. To achieve this, partitions must be granted time and space isolation, according to ARINC-653. Space isolation lays in granting disjoint address spaces for the partitions, thus no address can be shared by several partitions and partition is allowed to access the address space of any other1 . Time isolation consists on avoiding any inuence of the execution of one partition over the execution time of any other. To achieve these and other requirements a hypervisor such as XtratuM must be used. In the reminder of the section, the cache-related issues specic to this kind of systems are discussed. Since the cache is a hardware resource shared by all partitions in the system, it is clear to see that concurrent impact2 will be generated, violating time isolation. According to ARINC-653 standard such violation must be avoided. A very straight forward way to avoid concurrent impact between partitions is to ush the cache in every partition context switch. This solution is being used by CNES at the moment, but it limits the performance boosting eect of the cache. The nal goal of our project is nding a solution, that copes with this time isolation requirement while aecting performance the least possible.

3.

EMBEDDED SYSTEMS AND CACHE

2.

PARTITIONED SYSTEMS OVERVIEW

In this Section the problems that cache behavior causes to high integrity systems and the main solutions proposed in literature so far are explained.

1 Space isolation can be only partially achieved in LEON2, since there is a mechanism for writing isolation, but the lack of MMU prevents reading isolation. 2 This concept is explained further in the text.

Partitioned systems are a particular case of high integrity systems where hardware resources are divided into logical partitions, each virtualized as a separate computer, to support several applications and allow-

2 3.1. Cache-Caused Problems in High Integrity Systems are enhanced. However interesting and useful approaches aimed at improving WCET estimation are inapplicable to the present project and thus they are not included in this abstract. Please refer to the bibliografhy ([1] [2]) for more information. In the remainder of this section approaches aimed at enhancing cache behavior are presented. There are ve main approaches: cache partitioning, cache locking, considering cache as part of the partition context cache aware programming style and cache aware layout. Both cache aware programming and cache aware layout are out of the scope of the present project and they wont be further analysed. Cache Partitioning: The cache is partitioned among tasks to avoid inter-task interference (concurrent impact). There are several possibilities: a partition per task, a partition per some high priority tasks and a pool for other tasks, a pool for every n tasks, etc. These solutions reduce concurrent impact but increase sequential impact by reducing the amount of cache available when a task is being executed (it is just a fraction of the total cache available without partitioning). Cache Partitioning can be implemented either by software or by specic hardware. In [5] J. Strner presents a hardware solution. The a cache is divided into two partitions, one for the current task and the other for the next task to be executed. A high integrity kernel is introduced that loads the last cache status of the task to be executed next, concurrently with the execution of the current task, thus avoiding the overhead of loading the cache. The advantages of this solution are its speed, parallel execution and ooading the processor with the scheduling algorithm and other O.S. services. Its obvious drawback is the need to modify the hardware which can be very dicult, especially in a conservative eld such as high integrity systems. Also, the task to be executed next is not always known, specially if interrupts are included. In [6] B. D. Bui, M. Caccamo, L. Sha and J. Martinez propose a software-based cache partitioning technique, more in particular an OS-controlled technique. This implementation considers the cache partitioning problem as an optimization problem, solved with a genetic algorithm. The advantages of such method are that no extra hardware is required, it allows more exibility (software, unlike hardware, can be changed as often as needed). Its obvious drawback is the overhead caused by the operating system having to nd a nice partitioning scheme. Cache Locking: Locking the cache can be useful to prevent potentially useful lines from being evicted from the cache. This can be achieved in many different ways, the main three are: 1. Locking the cache when an interrupt arrives: it avoids the unpredictable interference caused by

High integrity systems must be certied in order to be considered acceptable. This certication relays on the vericability of the schedulability of the required plan. This means not only that the worst case execution time (WCET) must be between the bounds of the needed schedule, but it must also be demonstrable. In traditional architectures, this demonstration was almost trivial by analysis because these architectures were simple to model. As performance enhancing techniques have been added, the model has grown more complex and so the verication of the schedule has also become more dicult. Traditionally, high integrity system designers have avoided such unpredictable technologies, but nowadays the need of performance has increased and caches have become unavoidable. The problems caused by cache in high integrity systems can be summarized as unpredictability due to the dynamic and adaptive behavior of caches. More precisely, they cause a high variability of execution times that endangers the vericability of an embedded system; they are non-analyzable becasue the dynamic behavior of caches makes execution states complex to determine; they cause local nondeterminism, i.e. timing behavior of an individual instruction cannot be determined locally, but depends on the history of execution which can be arbitrarily long; and they violate temporal isolation, since caches are globally shared resources; etc. Caches increase the variability of the execution time because of the dierence in the time consumption of cache hits and misses. The factors that inuence the proportion of misses are: size impact, a program that uses more code or data than the cache can hold, has many more cache misses; sequential impact also called intra-task interference, the contents of the cache at a given time depend on the history of execution, a program typically containings many dierent paths; concurrent impact also named intertask interference, when a higher-priority task or interrupt suspends the execution of the current task, part of its working set is likely to be evicted, causing further cache misses when execution is resumed; and nally layout impact where code and data are placed in main memory inuences the pattern of hits and misses.

3.2.

Approaches Proposed in Bibliography

Many have been the proposals to solve the problems presented in the previous Subsect. Some of these approaches are intended only to give a more accurate WCET bound, not trying to increase predictability or performance. Some others try to improve cache management so that predictability and performance

3 interruptions over the cache behavior of the normal execution path. It is especially useful when the scheduling policy is highly predictable (for example in cyclic scheduling) and the interrupts handling is not very critical. 2. Static Cache Locking: Explicitly loading contents into the cache at the beginning of the activity of the system and locking the cache so that it does not change during the whole life of the system. This appears to perform poorly (according to cache misses), but is totally predictable. [7]. 3. Dynamic Cache Locking [8]. The cache is loaded at dierent points of the system activity. This option provokes less cache misses but causes a considerable loading overhead. The cache can also be locked when some tasks are scheduled. If a task takes almost no benet of using the cache, it may be better to disable the cache for this task so that it does not delay the tasks currently using the cache. 4.1. LEON2 Cache Memories

The LEON2 architecture has separate on-chip instruction and data buses, including only one level caching with separate and independent instruction and data caches. The Harvard architecture is conned to on-chip accesses and cache hits, since the main memory bus is shared. Both caches can be congured separately. Either cache can be in one of three modes: disabled, enabled or frozen. If a cache is enabled, it operates the normal way. If a cache is disabled, there is an overhead with every cache miss. After disabling the cache, there is no automatic ush when it is reenabled. If this behavior is required, it must be done manually. If a cache is frozen, it operates normally, but read misses do not allocate new lines and no memory block is evicted. However, read misses that refer to a block partially in the cache would be loaded into the cache, and writes to an address in the cache would update both cache and memory. Regarding features of caches that favor predictability:

Cache can be locked completely or just partially in all presented cases. Cache State as part of the Context of the program: Similarly to dynamic cache locking, the contents of the cache are explicitly loaded into it, but in this case, in every context switch. At the beginning of every context switch the contents of the cache at this moment are stored in memory and at the end of the context switch, the cache state of the following task is restored. This causes a considerable overhead. This method has been mentioned in bibliography but scarcely studied due to this overhead. In systems where the context switch object is a task or a thread this cost is said to be much too high to pay for the performance enhancement and predictability it achieves [4]. However, in partitioned systems, where the context switch object is a partition, potentially including an operating system and several tasks or threads, it must be taken into account as a possible sollution. A more precise idea of the actual cost and benets of this approach should be obtained in order to decide its feasibility and interest.

It is possible to freeze the cache whether automatically when an asynchronous trap occurs or under program control. The LEON2 cache can be directly accessed using special load/store instructions. This allows a very accurate control of the cache and a vast exibility. Per-line locking is not available in LEON2FT (the Fault-Tolerant version of LEON2). Cache partitioning is not supported by specic hardware.

4.2.

State of the art in Sparc-oriented Solutions

Some cache-performance related projects focused on the LEON2 architecture have been carried out in the latest years. PEAL2 [10] [11] and COLA [4] projects, intimately related to our own, are exposed here. PEAL2 and COLA projects have focused in cacheaware layout, creating an automatic tool to nd an (approximately) optimal layout. The reminders of this section consists of a summary of these projects. The Rapita team claims that the random code layout produced by the linker can lead to otherwise avoidable conict misses (which would cause a longer WCET), or sudden changes in WCET if the code is modied. They propose a framework to automatically obtain a good layout. This framework

4.

PARTICULARITIES OF LEON2

As already stated, our ultimate objective is enhancing XtratuM-based Partitioned Systems on LEON2 by granting a better time isolation while aecting performance the least possible. It is for this reason that the particularities of LEON2 are studied in this chapter. For a start, the main characteristics of LEON2 cache are presented, and further on the works PEAL and COLA, closely related to our research, are discussed.

4 has three inputs: The program structure, a list of all sub-programs, with their size and address oset which can be generated by the compiler; an I-cache specication; a list of constraints (available memory areas and the such). The framework, called ICCA (Instruction Cache Conict Analyzer), produces new layouts and evaluates them by simulation. 4.3. The PEAL2 Project the cache is frozen, no cache miss causes a load from memory. Data and tags of each cache line can be manually updated with sta and lda instructions, allowing both static and dynamic locking of the cache, as much as cache as part of the partition context. 5.1.2. Cache Partitioning

The project objectives are nding new algorithms to compute better code layouts; dening the requirements of a tool, called ICCA, that automatically produces these layouts; and analyzing the results of these dierent layouts on on-board software provided by TAS. Two methods are used to obtain good layouts: rst a genetic algorithm and second a heuristic that lays out code according to its execution frequency (CLDS or Call-Loop Data Structure). When the best layout is obtained, the ICCA tool generates an xml le with the layout to be forced to the linker. The Rapita team concluded that not all software would prot a cache-aware layout and that little or no dierence would be obtained by changing the layout of certain programs or certain parts of a program. 4.4. The COLA Project

Since Leon2 has no hardware facility to partition the cache, a software partitioning method has been tested. To partition cache by software, code must be placed in memory in a way that instructions from dierent partitions are placed in memory addresses that map to dierent cache partitions. As an example, with a 2Kbyte-2-way cache, the address space would be of 1Kbyte. Having two ways, it may seem that two dierent partitions can use the same addresses interval (one per way). It can easily be demonstrated that this would violate timing isolation with the following example: Partition A and B share the same addresses interval. A lls the partition in one of the ways and is preempted by B. B has two dierent addresses that map in the same cache address. LRU makes B to evict a line of the way A was using and thus time isolation is violated. To partition a 1 Kbyte cache in 4 pieces of 256 bytes, it must be granted that the code corresponding to each of the pieces in the cache is placed in the addresses 0xX2n000-0xX2n7FF; 0xX2n800-0xX2nFFF; 0xX2n+1000-0xX2n+17FF; 0xX2n+1800 0xX2n+1FFF. To achieve this, code must be divided in functions of at most 256 bytes, and each function must have the attribute section and the proper alignment. This can be achieved with a linker le that can be obtained automatically with a script if sections are specied in the program and the number of cache partitions is specied before the linking is performed (it can be a parameter in the makele and could be placed in the xm cf le). It must be noted that in the kind of systems involved, spacial isolation is needed and the mechanism available in Leon2 to isolate partitions spatially, requires the memory of a partition to be a single area, not allowing the interleaving of areas corresponding to dierent partitions. For N partitions, only 1/N of the memory can be used, which is considerably restrictive.

As a result of the conclusions of the PEAL2 project, the COLA project intended to identify automatic methods to analyze and control the impact of the cache on execution times in high integrity systems; improve the ICCA tool; analyze the state of the art of other cache analysis and enhancement techniques; and evaluate the real possibilities of integration of cache analysis in the industrial world

5.

PROOF OF CONCEPT AND PROPOSED SCHEMA Proof of Concept

5.1.

After the study presented above, a proof of concept was carried out in order to discriminate the possibilities to modify cache behavior to preserve time isolation. This proof of concept includes three dierent methods: cache locking, cache partitioning and cache as part of a partition context. They are presented in the remainder of the abstract. 5.1.1. Cache Locking

5.2.

Proposed Schema

Per-line cache locking is unavailable in LEON2-FT, but the freezing of the cache works properly. When

After the proof of concept, it was concluded that considering the cache as part of the context was the most promising option among those avaliable. Static and dynamic cache freezing are very restricting and performance limiting, so it has been decided not to

5 explore this path at the moment. Partitioning the cache by software is a complicated task that requires a considerable amount of user eort and highly limits memory use. As stated before, the time cost of considering the cache as part of the context in systems where the context switch object is a task or a thread, can be considered too high. However, in partitioned systems, where the context switch object is a partition and the main goal is not performance enhancement but time isolation, it seems to be the most promising solution. Two complementary functions have been coded, to save and load cache contents before and after the context switch. These functions can be used: 1. The functions are placed right before and after the actual context switch. The cache saved is potentially very corrupted (the path followed from the arrival of the timer trap or the hypercall to the context switch can be long, thus altering the contents of the cache and making this solution useless). 2. When a trap arrives, it is checked to identify the cause: if it can cause a context switch, the cache contents are saved in the cache. The corruption of the cache is very smal. The drawback of this implementation is that sometimes the cache is stored in memory with no subsequent context switch. 3. A middle way solution: freezing the cache where it is saved in the second solution (to prevent corruption) and save it right before the context switch. REFERENCES [1] Ferdinand, C., Wilhelm, R.: Fast and Ecient Cache behavior Prediction for Real-Time Systems. Real-Time System, 131181 (1999) [2] Mueller, F: Predicting Instruction Cache behavior. Languaje, Compilers and Tools for Real-Time Systems (LCTRTS) (1994) [3] Wilhelm,R., Engblom,J., Ermedahl, A., Holsti, N., Thesing, S., Whalley, D. Bernat, G., Ferdinand, C., Heckmann, R., Mitra, T., Mueller, F., Puaut, I., Puschner, P., Staschulat, J., Stenstrm, P.: The o Worst-Case Execution Time Problem - Overview of Methods and Survey of Tools. ACM Transactions on Embedded Computing Systems (2008) [4] Mezzeti, E.: COLA Final Report. University of Padua (2010) [5] Basumallick, S., Nilsen, D.: Cache Issues in RealTime Systems. ACM SIGPLAN Workshop on Language, Compiler and Tool Support for Real-Time Systems (1996) [6] Busquets-Mataix, J. V., Wellings, A.: Adding Instruction Cache Eect to Schedulability Analysis of Preemptive Real-Time Systems. Proceedings of the second Real-Time and Application Symposium (1996) [7] Bui, B. D., Caccamo, M., Sha, L., Martinez, J.: Impact of Cache Partitioning on Multi-Tasking Real Time Embedded Systems. Embedded and Real-Time Computing Systems and Applications RTCSA 08. 14th IEEE International Conference (2008) [8] Liedtke, J., Hrtig, H., Hohmuth, M.: OSa Controlled Cache Predictability for Real-Time Systems. Real-Time Technology and Applications Symposium. Proceedings., Third IEEE (1999) [9] Asaduzzaman, A., Limbachiya, N., Mahgoub I.: Predictability and Performance Enhancement for Real-Time Embedded Systems by Cache-Locking. 10th Euromicro Workshop on real-time systems (1998) [10] Betts, A. et al: PEAL2 Final Report. RSPEAL2-FR-001 (2009) [11] Bernat, G., Colin, A., Esteves, J., Garcia, G., Moreno, C., Holsti, N.: Considerations on the LEON cache eects on the timing analysis of onboard applications. DASIA: Proceedings of theData Systems In Aerospace Conference (2008)

6.

CONCLUSIONS

This abstract starts with an overview of the particularities of partitioned systems that concern the project. Subsequently the state of the art of approaches to solve cache-caused unpredictability proposed in bibliography are discussed. Later on, a more specic overview regarding LEON2 is included. To nish, a summary of the proof of concept and a proposed solution is provided. After the proof of concept, it was concluded that considering the cache as part of the context was the most promising among the options avaliable. Several optional implementations of this solution have been carried out. Further research into this subject includes timing measurements, choosing the best implementation among the three and comparing its performance to the one obtained by ushing the cache after every context switch. This solution is still in a testing stage. The obtained results can endorse further articles on this topic.

S-ar putea să vă placă și

- Basic Data Lbii Basic Data LB Ii - 1997!02!17Document29 paginiBasic Data Lbii Basic Data LB Ii - 1997!02!17Polinho Donacimento75% (4)

- Introduction to Reliable and Secure Distributed ProgrammingDe la EverandIntroduction to Reliable and Secure Distributed ProgrammingÎncă nu există evaluări

- Microcontroller Interview Questions and Answers PDFDocument8 paginiMicrocontroller Interview Questions and Answers PDFbalaji_446913478Încă nu există evaluări

- A System Bus Power: Systematic To AlgorithmsDocument12 paginiA System Bus Power: Systematic To AlgorithmsNandhini RamamurthyÎncă nu există evaluări

- Tampere University of TechnologyDocument18 paginiTampere University of TechnologyDanilo OliveiraÎncă nu există evaluări

- OS Modules SummaryDocument6 paginiOS Modules SummaryshilpasgÎncă nu există evaluări

- A Survey On Cache Management Mechanisms For Real-Time Embedded SystemsDocument35 paginiA Survey On Cache Management Mechanisms For Real-Time Embedded SystemsAtulÎncă nu există evaluări

- MSC Exam For Feature Use: Non-Preemptive and Preemptive Scheduling AlgorithmDocument6 paginiMSC Exam For Feature Use: Non-Preemptive and Preemptive Scheduling AlgorithmAddisu DessalegnÎncă nu există evaluări

- MSC Exam For Feature Use: Non-Preemptive and Preemptive Scheduling AlgorithmDocument6 paginiMSC Exam For Feature Use: Non-Preemptive and Preemptive Scheduling AlgorithmLens NewÎncă nu există evaluări

- Checkpoint-Based Fault-Tolerant Infrastructure For Virtualized Service ProvidersDocument8 paginiCheckpoint-Based Fault-Tolerant Infrastructure For Virtualized Service ProvidersDevacc DevÎncă nu există evaluări

- Pal A Charla 1997Document13 paginiPal A Charla 1997Gokul SubramaniÎncă nu există evaluări

- Non Inclusive CachesDocument10 paginiNon Inclusive CachesJohnÎncă nu există evaluări

- Ieee ICDCS90Document8 paginiIeee ICDCS90ayaan khanÎncă nu există evaluări

- SJB Institute of TechnologyDocument18 paginiSJB Institute of TechnologySrisha UralaÎncă nu există evaluări

- Bcs Higher Education Qualifications BCS Level 6 Professional Graduate Diploma in IT April 2011 Examiners' Report Distributed & Parallel SystemsDocument7 paginiBcs Higher Education Qualifications BCS Level 6 Professional Graduate Diploma in IT April 2011 Examiners' Report Distributed & Parallel SystemsOzioma IhekwoabaÎncă nu există evaluări

- Assignment4-Rennie RamlochanDocument7 paginiAssignment4-Rennie RamlochanRennie RamlochanÎncă nu există evaluări

- Data Similarity-Aware Computation Infrastructure For The CloudDocument14 paginiData Similarity-Aware Computation Infrastructure For The CloudAjay TaradeÎncă nu există evaluări

- N Fficient Multiprocessor Emory Management Framework Using Multi AgentsDocument17 paginiN Fficient Multiprocessor Emory Management Framework Using Multi AgentscseijÎncă nu există evaluări

- CCT Unit - 1Document26 paginiCCT Unit - 1vedha0118Încă nu există evaluări

- High-Performance Linux Cluster Monitoring Using Java: Curtis Smith and David Henry Linux Networx, IncDocument14 paginiHigh-Performance Linux Cluster Monitoring Using Java: Curtis Smith and David Henry Linux Networx, IncFabryziani Ibrahim Asy Sya'baniÎncă nu există evaluări

- Assignment1-Rennie Ramlochan (31.10.13)Document7 paginiAssignment1-Rennie Ramlochan (31.10.13)Rennie RamlochanÎncă nu există evaluări

- Abrar HHG Gjugh KGJGHDocument23 paginiAbrar HHG Gjugh KGJGHrayedkhanÎncă nu există evaluări

- Icst 1011Document6 paginiIcst 1011International Jpurnal Of Technical Research And ApplicationsÎncă nu există evaluări

- Computer Science Notes 722Document3 paginiComputer Science Notes 722Biplap HomeÎncă nu există evaluări

- Component Based On Embedded SystemDocument6 paginiComponent Based On Embedded Systemu_mohitÎncă nu există evaluări

- Lecture 2.0 - Issues in Design of Distributed SystemDocument14 paginiLecture 2.0 - Issues in Design of Distributed SystemLynn Cynthia NyawiraÎncă nu există evaluări

- Chapter11.Real Time SystemsDocument29 paginiChapter11.Real Time SystemsAllan WachiraÎncă nu există evaluări

- Timing Analysis: in Search of Multiple ParadigmsDocument4 paginiTiming Analysis: in Search of Multiple Paradigmsvimal_raj205Încă nu există evaluări

- Aca Unit5Document13 paginiAca Unit5karunakarÎncă nu există evaluări

- Components in Real-Time SystemsDocument12 paginiComponents in Real-Time SystemsSupervis0rÎncă nu există evaluări

- Scheduling in Distributed SystemsDocument9 paginiScheduling in Distributed SystemsArjun HajongÎncă nu există evaluări

- Strategy For Power Efficient Design of PDocument8 paginiStrategy For Power Efficient Design of PsingarayyaswamyÎncă nu există evaluări

- User SandboxDocument11 paginiUser SandboxTantawyÎncă nu există evaluări

- Fuss, Futexes and Furwocks: Fast Userlevel Locking in LinuxDocument19 paginiFuss, Futexes and Furwocks: Fast Userlevel Locking in LinuxSnehansu Sekhar SahuÎncă nu există evaluări

- Crash-Only SoftwareDocument6 paginiCrash-Only SoftwareJeremySharpÎncă nu există evaluări

- Mod 4Document19 paginiMod 4PraneethÎncă nu există evaluări

- ES II AssignmentDocument10 paginiES II AssignmentRahul MandaogadeÎncă nu există evaluări

- RTESDocument7 paginiRTESDevika KachhawahaÎncă nu există evaluări

- DownloadDocument23 paginiDownloadShankar NarayanaÎncă nu există evaluări

- An Adaptive Programming Model For Fault-Tolerant Distributed ComputingDocument14 paginiAn Adaptive Programming Model For Fault-Tolerant Distributed ComputingieeexploreprojectsÎncă nu există evaluări

- Flexible Rollback Recovery in Dynamic Heterogeneous Grid ComputingDocument13 paginiFlexible Rollback Recovery in Dynamic Heterogeneous Grid ComputingKiran GhantaÎncă nu există evaluări

- Communication and ConcurrencyDocument3 paginiCommunication and ConcurrencyAmit SahaÎncă nu există evaluări

- A Study of Software Multithreading in DistributedDocument26 paginiA Study of Software Multithreading in DistributedPalanikumarÎncă nu există evaluări

- Operating SystemDocument18 paginiOperating SystemGROOT GamingYTÎncă nu există evaluări

- Aad4 PDFDocument19 paginiAad4 PDFshafikÎncă nu există evaluări

- Marsh, Scott, Leblanc, Markatos - First-Class User-Level ThreadsDocument12 paginiMarsh, Scott, Leblanc, Markatos - First-Class User-Level ThreadsJoaquin Lino de la TorreÎncă nu există evaluări

- HOMEWORK 213 Lovely Professional UniversityDocument4 paginiHOMEWORK 213 Lovely Professional UniversityJasminder PalÎncă nu există evaluări

- LocusDocument26 paginiLocusprizzydÎncă nu există evaluări

- Eliminating Routing Congestion Issues With Logic SynthesisDocument7 paginiEliminating Routing Congestion Issues With Logic SynthesisCharan TejaÎncă nu există evaluări

- Performance Evaluation of Overload Control in Multi-Cluster GridsDocument8 paginiPerformance Evaluation of Overload Control in Multi-Cluster GridsMike GordonÎncă nu există evaluări

- 326 1262 1 PB Distributed ComputingDocument51 pagini326 1262 1 PB Distributed Computingmaster2020Încă nu există evaluări

- Patterns To Ease The Port of Micro-Kernels in Embedded SystemsDocument16 paginiPatterns To Ease The Port of Micro-Kernels in Embedded SystemsTommy YenÎncă nu există evaluări

- Processes: Distributed Systems Principles and ParadigmsDocument78 paginiProcesses: Distributed Systems Principles and ParadigmsZain HamzaÎncă nu există evaluări

- Chapter - 1: Objective StatementDocument73 paginiChapter - 1: Objective StatementMithun Prasath TheerthagiriÎncă nu există evaluări

- Research Papers On Deadlock in Distributed SystemDocument7 paginiResearch Papers On Deadlock in Distributed Systemgw219k4yÎncă nu există evaluări

- OS Question Bank 5/8888Document15 paginiOS Question Bank 5/8888Harsh KumarÎncă nu există evaluări

- Answers Chapter 1 To 4Document4 paginiAnswers Chapter 1 To 4parameshwar6Încă nu există evaluări

- Research Paper PDFDocument6 paginiResearch Paper PDFNemraÎncă nu există evaluări

- SEM 3 BC0042 1 Operating SystemsDocument27 paginiSEM 3 BC0042 1 Operating Systemsraju_ahmed_37Încă nu există evaluări

- Temporal QOS Management in Scientific Cloud Workflow SystemsDe la EverandTemporal QOS Management in Scientific Cloud Workflow SystemsÎncă nu există evaluări

- Digital Control Engineering: Analysis and DesignDe la EverandDigital Control Engineering: Analysis and DesignEvaluare: 3 din 5 stele3/5 (1)

- Design and Test Strategies for 2D/3D Integration for NoC-based Multicore ArchitecturesDe la EverandDesign and Test Strategies for 2D/3D Integration for NoC-based Multicore ArchitecturesÎncă nu există evaluări

- LED Brightness Control Using PWM of LPC2138: ESD Lab Mini-ProjectDocument8 paginiLED Brightness Control Using PWM of LPC2138: ESD Lab Mini-ProjectAditya GadgilÎncă nu există evaluări

- Full Datasheet STi7105 PDFDocument313 paginiFull Datasheet STi7105 PDFtaxakasanÎncă nu există evaluări

- FPGA Based Stepper Motor Control Using Labview GUI TechniquesDocument5 paginiFPGA Based Stepper Motor Control Using Labview GUI TechniquesMADDYÎncă nu există evaluări

- Operating Systems CHAPTER 1 COMPLETE SOLUTION MADE BY ABHISHEK KUMARDocument9 paginiOperating Systems CHAPTER 1 COMPLETE SOLUTION MADE BY ABHISHEK KUMARAbhishemÎncă nu există evaluări

- Manual Eprom EngDocument5 paginiManual Eprom EngJames Tiberius KirkyÎncă nu există evaluări

- Configuratie LaptopDocument111 paginiConfiguratie LaptopAlex GrigorasÎncă nu există evaluări

- Instructions Set For 8085 and 8086Document15 paginiInstructions Set For 8085 and 8086Shanmugi VinayagamÎncă nu există evaluări

- Datasheet EEPROM W27C010-70 (128K)Document15 paginiDatasheet EEPROM W27C010-70 (128K)vanmarteÎncă nu există evaluări

- ATF16V8B, ATF16V8BQ, and ATF16V8BQL: FeaturesDocument27 paginiATF16V8B, ATF16V8BQ, and ATF16V8BQL: FeaturesSimilinga MnyongeÎncă nu există evaluări

- Instruction Sets: Addressing Modes and FormatsDocument13 paginiInstruction Sets: Addressing Modes and Formatsما هذا الهراءÎncă nu există evaluări

- MultiBeast Features 5.1.0Document10 paginiMultiBeast Features 5.1.0Marcia AndreaÎncă nu există evaluări

- STADocument123 paginiSTAMoon Sadia DiptheeÎncă nu există evaluări

- Ecad Lab ManualDocument55 paginiEcad Lab Manualjeravi84100% (3)

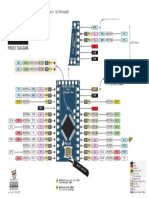

- ArduinoProMini PinoutDocument1 paginăArduinoProMini Pinout_gekidoÎncă nu există evaluări

- Clock Concurrent Opt WPDocument15 paginiClock Concurrent Opt WPkartimidÎncă nu există evaluări

- Assembly Language Programming:: 8085 Program To Add Two 8 Bit NumbersDocument9 paginiAssembly Language Programming:: 8085 Program To Add Two 8 Bit NumbersAnkit ratan pradhanÎncă nu există evaluări

- ISP1040C Intelligent SCSI Processor: Data SheetDocument4 paginiISP1040C Intelligent SCSI Processor: Data SheetKSÎncă nu există evaluări

- Digital Electronics (Register)Document30 paginiDigital Electronics (Register)Rohit MauryaÎncă nu există evaluări

- Command Line Assembly Language Programming For Arduino Tutorial 2Document10 paginiCommand Line Assembly Language Programming For Arduino Tutorial 2Kalaignan RajeshÎncă nu există evaluări

- Ica QBDocument10 paginiIca QBNaresh BopparathiÎncă nu există evaluări

- FemtoRV32 Piplined Processor ReportDocument25 paginiFemtoRV32 Piplined Processor ReportRatnakarVarunÎncă nu există evaluări

- Nr321402-Microprocessor and InterfacingDocument8 paginiNr321402-Microprocessor and InterfacingSRINIVASA RAO GANTAÎncă nu există evaluări

- 68HC11 Introduction.: Bits and BytesDocument22 pagini68HC11 Introduction.: Bits and BytesmohanaakÎncă nu există evaluări

- JOYPADDocument1 paginăJOYPADVladimir PopovicÎncă nu există evaluări

- Nintendo Entertainment System ArchitectureDocument10 paginiNintendo Entertainment System ArchitectureAlainleGuirec100% (1)

- Computer Science Notes: Computer Architecture & Fetch-Execute CycleDocument15 paginiComputer Science Notes: Computer Architecture & Fetch-Execute CycleShreyan GuptaÎncă nu există evaluări

- JaspergoldDocument20 paginiJaspergoldmuripaÎncă nu există evaluări

- TXS0102 2-Bit Bidirectional Voltage-Level Translator For Open-Drain and Push-Pull ApplicationsDocument47 paginiTXS0102 2-Bit Bidirectional Voltage-Level Translator For Open-Drain and Push-Pull Applicationskarthik4096Încă nu există evaluări