Documente Academic

Documente Profesional

Documente Cultură

Traffic Detection System

Încărcat de

manvimalik09Descriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Traffic Detection System

Încărcat de

manvimalik09Drepturi de autor:

Formate disponibile

A Trafc Object Detection System for Road Trafc Measurement and Management

Carsten Dalaff Institute for Transport Research, German Aerospace Center DLR ,Berlin, Germany Carsten.Dalaff@dlr.de Ralf Reulke Institute for Photogrammetry, Stuttgart University, Germany Ralf.Reulke@ifp.uni-stuttgart.de Axel Kroen ISSP Consult, Stuttgart, Germany kroen@stgt.ssp-consult.de Thomas Kahl ASIS GmbH, Berlin, Germany Thomas.Kahl@asis-it.de Martin Ruhe, Adrian Schischmanow, Gerald Schlotzhauer, Wolfram Tuchscheerer German Aerospace Center DLR ,Berlin, Germany rstname.lastname@dlr.de

Abstract

OIS is a new Optical Information System for road trafc observation and management. The complete system architecture from the sensor for automatic trafc detection up to the trafc light management for a wide area is designed under the requirements of an intelligent transportation system. Particular features of this system are the vision sensors with integrated computational and real-time capabilities, real-time algorithms for image processing and a new approach for dynamic trafc light management for a single intersection as well as for a wide area. The developed real-time algorithms for image processing extract trafc data even at night and under bad weather conditions. This approach opens the opportunity to identify and specify each trafc object, its location, its speed and other important object information. Furthermore, the algorithms are able to identify accidents, and non-motorized trafc like pedestrians and bicyclists. Combining all these single information the system creates new derivate and consolidated information. This leads to a new and more complete view on the trafc situation of an intersection. Only by this a dynamic and near real-time trafc light management is possible. To optimize a wide area trafc management it is necessary to improve the modelling and forecasting of trafc ow. Therefore the information of the current Origin-Destination (OD) ow is essentially. Taking this into account OIS also includes an approach for anonymous vehicle recognition. This approach is based on single object characteristics, order of objects and forecast information, which will be obtained from intersection to intersection.

Keywords: Trafc observation, trafc control, sensor network, sensor fusion 1 Introduction

Trafc observation, control and real-time management is one of the major components within future intelligent transportation systems (ITS). One central postulation of the European Government is the increase of road safety, so the number of killed people should be halved till the year 2010. There are nearly 41.900 road casualties and more than 1.7 million seriously injured persons each year in the European Union (EU). This causes about 45 billion Euro direct and approximately 160 billion Euro external costs per year. Daily 4.000 km of trafc congestions stress only the European highways. This means 10% of the complete European highway system. The economical damage is tremendous, but till now there is no common approach to calculated the real amount. So the ofcial numbers differ. The necessary investments for the European transportation eld will reach more than 10% of the EU gross national product (GNP). The needed nancial capabilities for transport infrastructure of the acceding countries (e.g. Poland, Estonia ) will increase this expense enor-

78

Image and Vision Computing NZ

mously. To realize just the priority projects in these countries, the EU has to be spend 91 billion Euro up to 2015. Taking this into account it seems effective to use parts of this money for innovative approaches for trafc management in future intelligent transportation systems. Having such an intelligent transportation system an increased road safety can be realized. So the economical damage can be reduced. One appropriate approach could be the use of the mentioned trafc object detection system for road trafc measurement and management. This systems is different to state-ofthe-art trafc measurement equipment, e.g. induction loops, which does not sufce anymore the growing demand of transport research and trafc control. The project OIS [1] uses optical and informational enabling technologies for an automatic trafc data generation with an image processing approach. Its main purpose is to acquire and evaluate autonomously trafc image sequences from roadside cameras. Trafc parameters will be obtained from extracted and characterized objects of this image sequences. To meet these requirements, numerous image processing algorithms have been developed since more then 20 years (e.g. special issue [2]), with simple web-cameras and more complex systems (e.g. [3]). Trafc scene information can be used to optimize trafc ow on intersections during busy periods, identify stalled vehicles and accidents, and is able to identify non-motorized trafc like pedestrians and bicyclists. Additional contributions can be obtained for the determination of the Origin-Destination (OD) matrix. The OD matrix contains the information where and when the trafc participants start and end their trip and which route they have chosen. OD matrix is one basic element for an optimized modelling and forecast of trafc ow. The estimated trafc ow is necessary for a dynamic wide area trafc control, management and travel guidance. Furthermore, the recent advances in computational hardware can provide high computational power with fast networking facilities at an affordable price. The availability of specic solutions in the low-cost general-purpose range allows special image processing and avoids some basic bottlenecks. A couple of trafc data measurement systems already exist. Best known is the induction loop. Induction loops are embedded in the pavement. They are able to measure the present of a vehicle, its speed and rough classication. These are only local information but for a wide area trafc management a big coverage of the area is needed. Another approach is based on the idea that moving vehicles transmit information about there position and velocity via mobile communication, e.g. GSM to a trafc management center. These data are called Floating Car Data (FCD). To get an overview of the trafc situation of a complete city at any time a huge number of ve-

hicles has to be equipped with hardware and mobile communication units. To get reliable data every one minute the current position and velocity of the vehicles is needed. This causes enormous costs for the mobile communication. The FCD approach provides spatial trafc information, but the spatial and time resolution doesnt t the requirement of a trafc signal control. OIS as a new and innovative trafc observation system that opens the opportunity to deliver all necessary input for a local trafc signal control as well as for a dynamic wide area trafc management system. Next challenge is the implementation of OIS in a wide area city.

System Requirements

A modern system for trafc control and real-time management has to meet the following requirements: Reliability under all illumination and weather conditions Working period non-stop, 24hours 7 days a week complete overview over the intersection from at least 20m in front to 20m behind working in real-time real-time (every half second a complete data set of the trafc situation) on an intersection This requirements should be taken into account for the design of all parts of the system, which covers all procedures and processes from image data acquisition, image processing and trafc data retrieval up to trafc control. For realizing an operating system for 24 hours and under different weather conditions infrared cameras should be used. Algorithms had to be developed for a special camera arrangement (with real-time demands) for vehicle detection and deriving relevant parameters for trafc description and control.

System Overview

To get a complete overview over the intersection from at least 20m in front to 20m behind it is necessary to have more than one camera. The number of required cameras depends on the intersection geometry and installation possibilities for the cameras on house walls or lampposts. The time synchronous image data acquisition from one observation point with different cameras will be done in a so-called camera node. The camera node is part of the OIS-philosophy and consists of different sensors as a combination of VIS and IRcameras. To t the real-time processing requirement, time consuming image processing parts will be implemented in a special real time-unit. This approach is in implementation at the moment for two intersections in

Palmerston North, November 2003

79

Berlin, Germany equipped with camera-systems. The architecture of the complete system is shown in the rst gure.

parameters (e.g. different resolutions, different spectral sensitivities) which are connected within a network similar to the internet and being able to convert the incoming physical signals not only to digital data but to process them to user needed information. Therefore, image fusion as well as fast and reliable algorithms are needed preferably near the sensor itself. Real-time processing and programmable circuits will play an important role.

Hardware Concept

Figure 1: System design for the test bed. As shown in g. 1 the camera nodes are part of a hierarchy, which are linked via Internet or Wireless LAN with the computer systems on the intersection and the management center. Due to the limited data transfer rate and possible failure of a system on an intersection the camera node works independently. Image processing is decentralized and will be done in the camera node, so that only objects and object features will be transmitted. Together with error information the object data will be collected on the next level in the hierarchy. For synchronization purposes time signals can be incorporated over a network or from independently received signals (like GPS). The camera node is part of a hierarchy starting with camera nodes, junctions, subregions, etc. The next so-called junction level unies all cameras observing the same junction. Starting with the information from the camera level, relevant data sets are fused in order to determine the same objects in different images. After that, these objects are tracked as long as they are visible. So trafc ow parameters (e.g. velocity, trafc jam, car tracks) can be retrieved. Level number 3, the so-called region level, uses the extracted trafc ow parameters out of the lower level and feeds this data into trafc models in order to control the trafc (e.g. switching the trafc lights). Additional levels can be inserted. For test applications the camera node will generate a compressed data stream. For typical working mode only object features in an image should be transmitted and collected in a computer of the hierarchy. Generally, optical sensor systems in the visible and near-infrared range of the electromagnetic spectrum have reached a very high quality standard, which meet the requirements even for high-level scientic and commercial tasks, above all concerning the radiometric and geometric resolution and data rate. Otherwise sensors working in the thermal infrared range (TIR) are still a research topic for trafc applications. Technology development for the next few years will not be focused on higher resolutions or faster read-outs, because for most applications the performance of these sensors is sufcient. The emphasis will be put on smart, intelligent sensor systems with different measurement

The hardware concept is oriented on the requirements, as described above. The system has to operate 24 hours and 7 days a week and to observe the whole junction and at least 20m of all related streets. To improve the opportunities for image acquisition more than one camera system for one observation standpoint should be in use. Such a camera node ts the rst requirement with a high (spatial) resolution camera and a low resolution thermal infrared camera. Also stereo and distance measurements are possible with two identical cameras. The camera node is able to acquire data from up to four cameras in a synchronized mode. The camera node has real-time data processing capabilities and allows synchronous capture of GPS and INS (inertial navigation system) data, which should be external mounted. Due to limitations of camera observing positions and possible occlusions (e.g. from buildings, cars and other disturbing objects) for intersection observation mostly more than one standpoint for a camera node (camera-system) is necessary. Therefore communication or data transmission between camera nodes and the computer in the next higher hierarchy becomes critical. To reduce data volume in the network the real time data processing capability of the camera-node is used to speed up image processing and to transmit only object data. For the real-time unit a hardware implementation was chosen. Large free programmable logic gate arrays (FPGA) are available now. A programming language is available (VHDL) and different image processing algorithms are implemented.

Data Processing

The processing of data in the sensor web follows successive steps. In the rst step image data are generated and pre-processed. After that the objects are extracted out of the images. In the last step all the object features from different camera nodes will be collected, unied and processed into trafc information. The data processing is optimized to the logical design of the system. There are operations for the system conguration, for the operational working for the data mining and visualization. Results are new data and information. A complex data management system regulates the access, the transmission, handling and the analysis of the data.

80

Image and Vision Computing NZ

5.1 Image Processing

The essential processing steps are the elimination of noise and systematic errors, compression/decompression, higher level image processing and spatial (or geo-)- and time-referencing. The last point is necessary to determine space and time coordinates of observed objects as an essential feature for data fusion. Most of the work on vehicle detection or recognition was done on ground images, mainly as pre-processing before tracking for surveillance or trafc applications [4]. Different approaches, e.g. nding edges [5] or deformable model for vehicle [?] can be found. Generally, there are problems in image processing with car occlusion [6] and shadows [7]. Beside stationary image acquisition from ground also from moving platforms and arial images [8] are used. Image processing approaches will also be used for detecting lanes and obstacles by fusing information [9]. Image processing for OIS was described in detail in [1]. The main processing is a classical object detection and identication task. Following major problems have to be solved - Object discrimination from a spatial and time variable background (cross, street, buildings, etc.) - Removing disturbing structures between object and camera, as well as shadow regions around the object Identication of cars in a row, which are occluded by other The rst problem can be solved at least in two different ways: 1. Working with the image sequences, which are subtracted from the image before or 2. following the time changing background. For the rst approach background can be eliminated very simple, but stalled vehicles are invisible. The other approach is an on-going update of the background. This needs much more expense, but allows a detection of moving and also stalled cars. As a result of this procedure, background can be subtracted from the current image and objects can be derived as shown in g. 2. Additional morphological operation removes clutter and close objects structures. The right image shows the grey coded objects after labelling.

like shadows have to be removed. A straightforward way is the analysis of grey and color values, as well as texture within the found object boundaries.

5.2

Data and object fusion

To ensure an operating system 24 hours a day even under bad weather conditions, e.g. rain, fog, and at night the fusion of a visible and an IR sensor is a promising approach. The fusion is possible on different stages of information processing: - data level (e.g. image data from different cameras) - object level (objects extracted from the image data) - information level. Image matching and registration as one part of the data fusion is a procedure that determines the best spatial t between two or more images acquired at the same time and depicting the same scene, by identical or different sensors. To fuse the different images and/or object data a synchronization of time is necessary. Time synchronization can be realized by internal clocks (e.g. computer) or external time information (e.g. GPS). For the application of OIS we merge images on camera node level and fuse the position information and object features on junction or region level. The object information are fed in from different camera nodes. Both procedures should be explained more detailed. Fusion on data level: To t the requirement for 24-hour observations, typical CCD-cameras fail because of limited illumination of the objects. Car headlights and rear lamps seems not be sufcient. To overcome this problem the car self-radiation, which has a maximum in the thermal spectral region (TIR), can be used. There are a bunch of different detectors sensitive in this spectral range. Most of the sensors are expensive and needs additional cooler. Recent developments show, that bolometer arrays are a candidate for cheaper and uncooled detector arrays. Therefore, a camera development was started, which gives full access to the sensor, control, data correction and dataow. First experiments were done with a commercial system. An example of the data and the fusion of both on data level is shown in g. 3. Observation was done on late afternoon. The left image is a typical CCD-image. Contrast becomes smaller, only reections from sun glitters on car roofs are visible. The middle image was taken from a bolometer sensor. The whole intersection and the street are visible. The right image is the fusion of both. For visualization grey level image was put in the green channel and the TIR image in the red. Merging the visible image and the IR-image a afne transformation was used. To

Figure 2: Object detection in a trafc scene (left: original image, right processed image. The other major problem occurs after detecting the object. To determine size and shape, disturbing effects

Palmerston North, November 2003

81

fuse data from different sources is obvious, but needs spatial and time synchronization, because of different imaging system and observation conditions. For this example, the synchronization task is based on manual procedures, like nding equivalent points in both IR and VIS images and the calculation of the necessary transformation. Especially the automatic spatial synchronization is a research topic. All these operations are done in each camera node. Another advantage of TIR images shows the following image pair, which was taken from the same observation point, but at daytime.

5.3

Data Acquisition and Georeferencing

Figure 3: Sensor fusion of VIS an IR images (I).

Transformation from image to world coordinates is an essential need in order to calculate metric based trafc data. Standard photogrammetric procedures for the transformation of coordinates within monocular images are used. Basic assumptions are well distributed and accurate measured ground control points (GCP) in object and image space as well as an exact camera calibration. The GCPs are calculated via DGPS within WGS84 and UTM-projection. The calculated camera calibration parameters (interior and exterior orientation) and image coordinates are input for the transformation equations. Due to monocular image accusation the object or vehicle positions have to be projected on a XY-plane in object space. The vehicle positions projected on that plane depend on the camera distance, the camera declination and the position of the point within the vehicle representing its position. Once the camera calibration is set the vehicle positions can be transformed to world coordinates within image sequences until the camera position changes. Additionally, intersection geometry for example lanes etc... can be transformed from image into object space.

Figure 4: Sensor fusion of VIS an IR images (II). Fig. 4 shows an example of fusing RGB and TIR images at daytime. After coregistration the IR-image to the RGB-image and applying afne transform, a direct comparison is possible. In difference to gure 1 the infrared image was t directly into the grey level image (a color separation of the RGB-image). The RGB image has a much more higher resolution than the TIRimage (720x576 pixel). Spatial and true color object data can be derived from this image. The TIR-image has a smaller resolution (320x240 pixel). The combination of both images gives a more complex result, because of the thermal features, which can be observed on the engine bonnet and as reected radiation from under the car. These are also new features for object detection and description within the image-processing task. Object Data Fusion: Occluded regions can only be analyzed with additional views on these objects. Because camera nodes always transmit object information, a data fusion process on object level is necessary. The object list from different camera nodes has to be analyzed and unied. Object features like position, size, and shape vary from each view angle. Therefore the different object features from different perspectives have to be compared. The result of this operation is one trafc object with an exact position, size and shape, etc. The assumptions for this operation are time and spatial synchronized image data. The sequential processing of this list allows the derivation of more detailed information, e.g. track of the trafc participants, removing erroneous objects, etc.

5.4

Calculation of Trafc Characteristics

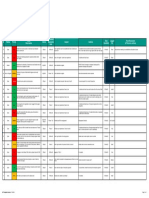

The following gure 5 shows the general trafc characteristics calculation process.

Figure 5: General trafc characteristics calculation process. The image processing (not shown above) cyclically delivers the parameters of all identied trafc objects such as cars, trucks, cyclists and pedestrians in trafc object lists (TO Lists), containing type, size, speed, direction, geographic location etc. of the objects for a certain sample time. A storage procedure (TO storage) writes this raw trafc data into a data base for further processing. A tracking procedure (TO Tracking) marks trafc objects appearing in consecutive time samples by an unique object identication. Thus, trafc objects can be pursued throughout the observed trafc area. The tracked trafc objects and their parameters are stored in the Tracked TO Data area of the data

82

Image and Vision Computing NZ

base. Using this data, a the Tracking Characteristics Calculation module computes trafc characteristics, e.g. trafc density or ow rates. To compute the trafc density the number of motor vehicles on a certain road segment is necessary. Using their geographical coordinates and direction all motor vehicles moving along a road segment are selected form the data base. By simple counting the number of this vehicles and scaling their number to a one kilometer segment the trafc density can be obtained. Flow rates can be determined by counting the number of cars crossing a dened traverse section. Based on the trafc object parameter set over a number of sample times and using the tracking information, the number of vehicles crossing the section is counted and a trafc ow measure in vehicles per hour or so can be obtained.

no sensor webs or measurement systems available, that are able to measure each object on a intersection and that provides all necessary spatial information for a real dynamic trafc control system. OIS is a system that is able to acquire features like size, shape and other object features to classify and identify trafc objects. Summarizing this, OIS gives a complete data information (overview) over a whole intersection. The project is funded by the German government (Federal Ministry of Education and Research, registration number: 03WKJ02B).

References

[1] R. Reulke, A. Brner, H. Hetzheim, A. Schischmanow, and H. Venus. A sensor web for roadtrafc observation. In Image and Vision Computing, New Zealand 2002, pages 293298, 2002. [2] A. Broggi and E. D. Dickmanns. Applications of computer vision to intelligent vehicles. Image and Vision Computing, 18(5):365366, April 2000. [3] F. Pedersini, A. Sarti, and S. Tubaro. Multicamera parameter tracking. IEE Proceedings Vision, Image and Signal Processing, 148(1):70 77, February 2001. [4] M. Betke, E. Haritaoglu, and L. S. Davis. Realtime multiple vehicle detection and tracking from a moving vehicle. Machine Vision and Applications, 12(2):6983, 2000. [5] J. Canny. A computational approach to edge detection. PAMI, 8:679698, 1986. [6] I. Ikeda, S. Ohnaka, and M. Mizoguchi. Trafc measurement with a roadside vision system - individual tracking of overlapped vehicles. In Proceedings of the 13th International Conference on Pattern Recognition, pages 859864, 1996. [7] G. S. K. Fung, Nelson H. C. Yung, Grantham K. H. Pang, and Andrew H. S. Lai. Effective moving cast shadow detection for monocular colr trafc image sequences. Optical Engineering, 41:14251440, June 2002. [8] R. Chellappa. An integrated system for site model supported monitoring of transportation activities in aerial images. In Proceedings of the 1996 ARPA Image Understanding Workshop, pages 275304, 1996. [9] M. Beauvais and S. Lakshmanan. Clark: a heterogeneous sensor fusion method for nding lanes and obstacles. Image and Vision Computing, 18(5):3974, April 2000.

6 An Adaptive and High Dynamic Network Control

The video based trafc sensor developed in this project creates possibilities for new concepts of trafc control for intersections and wide area networks. This approach also includes the development of a new dynamic and adaptive trafc control models for trafc lights. State-of-the-art in trafc observation is the induction loop. Induction loops are embedded in the pavement and register about 1 2m. This kind of sensor is able to measure the present of a vehicle, its speed and rough classication. In a next processing step it is possible to calculate time intervals between vehicles. This data is needed to control trafc light signals on intersections. For a real dynamic and demand based trafc signal light control, you would need the data of numerous induction loops on one single intersection. This is neither efcient nor realizable. OIS sensor web offers a new kind of trafc data/information. It is based on so called trafc-actuated signals. This means, the system is detecting information about the real-time trafc situation on an intersection automatically. For example the length of the queue for all different lanes, the trafc ow at the intersection, the density of trafc and the current velocity of each vehicle. The OIS sensor web automatically processes data-position, speed vectors of each vehicle, queue length as well as other relevant features. This leads to a complete and real-time overview at least 20 m before and behind an intersection. Based on this new quality of data, new approaches are developed to control trafc light signals on dynamic demand. At the moment most of trafc lights are controlled by two different ways: 1. control by x-time signals 2. control by so called actuated signals Fix-time signals means: green and red time is xed over the time and independent of the actual situation on the intersection. Actuated signals means: a number of x-time signals are used for different demands and situations. At this time there are nearly

Palmerston North, November 2003

83

S-ar putea să vă placă și

- Telegra Manual - DatasheetDocument79 paginiTelegra Manual - DatasheetNilanjan Chakravortty100% (1)

- Abinitio Session 1Document237 paginiAbinitio Session 1Sreenivas Yadav100% (1)

- Traffic Estimation and Prediction Based On Real Time Floating Car DataDocument7 paginiTraffic Estimation and Prediction Based On Real Time Floating Car DataChu Văn NamÎncă nu există evaluări

- Intelligent Traffic ManagementDocument10 paginiIntelligent Traffic ManagementnikughoshxÎncă nu există evaluări

- Vehicle Counting For Traffic Management Using Opencv and PythonDocument8 paginiVehicle Counting For Traffic Management Using Opencv and PythonKalyani DarapaneniÎncă nu există evaluări

- Graphic Representations in Traffic Surveys: 9RLFKL D52,%Document4 paginiGraphic Representations in Traffic Surveys: 9RLFKL D52,%Alexandra Elena BalanoiuÎncă nu există evaluări

- Intelligent Traffic Control System Project ReportDocument11 paginiIntelligent Traffic Control System Project ReportTemesgen Belay100% (2)

- A) Problem StatementDocument8 paginiA) Problem StatementRAKSHIT VELÎncă nu există evaluări

- Smart Traffic Management System Using Internet of Things: Sabeen Javaid, Ali Sufian, Saima Pervaiz, Mehak TanveerDocument6 paginiSmart Traffic Management System Using Internet of Things: Sabeen Javaid, Ali Sufian, Saima Pervaiz, Mehak TanveersabithaÎncă nu există evaluări

- Railway Track Security System ReportDocument26 paginiRailway Track Security System ReportAshishNegi100% (28)

- Hardwarex: Tian Lei, Abduallah A. Mohamed, Christian ClaudelDocument12 paginiHardwarex: Tian Lei, Abduallah A. Mohamed, Christian ClaudelIlham Nur AziziÎncă nu există evaluări

- 05734852Document20 pagini05734852tariq76Încă nu există evaluări

- Railway Track Security System Report PDFDocument26 paginiRailway Track Security System Report PDFeshu50% (2)

- 1st ReviewDocument14 pagini1st ReviewSkive FabsysÎncă nu există evaluări

- Video Sensor Network For Real-Time Traff PDFDocument10 paginiVideo Sensor Network For Real-Time Traff PDFMarc Colin VelasquezÎncă nu există evaluări

- Chapter One 1.1 Background of StudyDocument43 paginiChapter One 1.1 Background of Studyabdulazeez abubakarÎncă nu există evaluări

- Traffic Light Priority Control For Emergency Vehicle Using RFIDDocument4 paginiTraffic Light Priority Control For Emergency Vehicle Using RFIDLuis Galindo BernalÎncă nu există evaluări

- Capta SensorDocument10 paginiCapta SensorluisÎncă nu există evaluări

- Automatic Vehicle Counting Based On Surveillance Video StreamsDocument10 paginiAutomatic Vehicle Counting Based On Surveillance Video StreamsWelleah Mae T. LacsonÎncă nu există evaluări

- Synopsis ProjectDocument10 paginiSynopsis ProjectShaman TyagiÎncă nu există evaluări

- Improving Railway Safety With Obstacle Detection and Tracking System Using GPS-GSM ModelDocument7 paginiImproving Railway Safety With Obstacle Detection and Tracking System Using GPS-GSM ModelFìrœ Lōv MånÎncă nu există evaluări

- Vehicle Tracking and Classification in Challenging Scenarios Via Slice SamplingDocument17 paginiVehicle Tracking and Classification in Challenging Scenarios Via Slice SamplingYaste YastawiqalÎncă nu există evaluări

- Chapter 1: Introduction: Smart Traffic Controlling SystemDocument41 paginiChapter 1: Introduction: Smart Traffic Controlling Systemdivya varikutiÎncă nu există evaluări

- Traffic Monitoring Using Multiple Cameras, Homographies and Multi-Hypothesis TrackingDocument4 paginiTraffic Monitoring Using Multiple Cameras, Homographies and Multi-Hypothesis TrackingImam FathurÎncă nu există evaluări

- Design of Vehicle Positioning System Based On ARM: B. HarwareDocument3 paginiDesign of Vehicle Positioning System Based On ARM: B. HarwareNitheshksuvarnaÎncă nu există evaluări

- Vehicles Detection and Counting Based On Internet of Things Technology and Video Processing TechniquesDocument9 paginiVehicles Detection and Counting Based On Internet of Things Technology and Video Processing TechniquesIAES IJAIÎncă nu există evaluări

- Task 4 Video SensorsDocument16 paginiTask 4 Video Sensorsmohanad_j_jindeelÎncă nu există evaluări

- Intelligent Transportation System: by Jonathan D'CruzDocument27 paginiIntelligent Transportation System: by Jonathan D'CruzSaahib ReddyÎncă nu există evaluări

- Alacrity Control Using CateyeDocument5 paginiAlacrity Control Using CateyeRega RmsÎncă nu există evaluări

- Fuzzy LogicDocument51 paginiFuzzy LogicVinay KumarÎncă nu există evaluări

- Base PaperDocument28 paginiBase Papermohan venkatÎncă nu există evaluări

- Traffic Flow Management Using Wireless Sensor Networks: Project TitleDocument8 paginiTraffic Flow Management Using Wireless Sensor Networks: Project TitlevenkateshÎncă nu există evaluări

- Near Field Communication (NFC) Based Electronic Toll Collection SystemDocument7 paginiNear Field Communication (NFC) Based Electronic Toll Collection SystemFajar MubarokÎncă nu există evaluări

- Article 3Document21 paginiArticle 3sandhyasbfcÎncă nu există evaluări

- Irjet V5i1080 PDFDocument4 paginiIrjet V5i1080 PDFUtkarsh VermaÎncă nu există evaluări

- Why Its Intelligent Transport Technologies Intelligent Transport ApplicationsDocument15 paginiWhy Its Intelligent Transport Technologies Intelligent Transport ApplicationsKiran ChathamathÎncă nu există evaluări

- Intelligent-Transportation-Systems 12Document21 paginiIntelligent-Transportation-Systems 12hayatÎncă nu există evaluări

- Chapter I 1Document6 paginiChapter I 1I'm A'ightÎncă nu există evaluări

- Automatic Accident Detection and Ambulance RescueDocument11 paginiAutomatic Accident Detection and Ambulance Rescuesivasankar100% (2)

- Paper15 GESTION TRAFICODocument5 paginiPaper15 GESTION TRAFICOEusÎncă nu există evaluări

- Traffic Information System: Presented By: Anil Kr. Chhotu (11ID60R06) Pranav Mishra (11ID60R20)Document28 paginiTraffic Information System: Presented By: Anil Kr. Chhotu (11ID60R06) Pranav Mishra (11ID60R20)Pranav MishraÎncă nu există evaluări

- Document (Bilevel Feature Extraction)Document11 paginiDocument (Bilevel Feature Extraction)Gateway ManagerÎncă nu există evaluări

- Research On The Integrity Monitoring of GPS Vehicle SystemDocument4 paginiResearch On The Integrity Monitoring of GPS Vehicle SystemfranzkilaÎncă nu există evaluări

- A Mobile Cloud ComputingDocument81 paginiA Mobile Cloud ComputingVPLAN INFOTECHÎncă nu există evaluări

- RSC Week2Document5 paginiRSC Week2sahilsharan2704Încă nu există evaluări

- Andrei Mihai Iulian PROIECTDocument12 paginiAndrei Mihai Iulian PROIECTMihai AndreiÎncă nu există evaluări

- Tracking and Counting of Vehicles For Flow Analysis From Urban Traffic VideosDocument6 paginiTracking and Counting of Vehicles For Flow Analysis From Urban Traffic VideosjuampicÎncă nu există evaluări

- A Literature Survey On "Density Based Traffic Signal System Using Loadcells and Ir Sensors"Document3 paginiA Literature Survey On "Density Based Traffic Signal System Using Loadcells and Ir Sensors"hiraÎncă nu există evaluări

- A Novel Methodology For Traffic Monitoring and Efficient Data Propagation in Vehicular Ad-Hoc NetworksDocument10 paginiA Novel Methodology For Traffic Monitoring and Efficient Data Propagation in Vehicular Ad-Hoc NetworksInternational Organization of Scientific Research (IOSR)Încă nu există evaluări

- Intelligent Infrastructure For Next Generation Rail Systems PDFDocument9 paginiIntelligent Infrastructure For Next Generation Rail Systems PDFNalin_kantÎncă nu există evaluări

- Smart Traffic Management Using Deep LearningDocument3 paginiSmart Traffic Management Using Deep LearningInternational Journal of Innovative Science and Research TechnologyÎncă nu există evaluări

- Traffic Data Collection and AnalysisDocument14 paginiTraffic Data Collection and AnalysisSimranjit Singh MarwahÎncă nu există evaluări

- Report 24 ReportDocument24 paginiReport 24 Reportsanket panchalÎncă nu există evaluări

- The Car As An Ambient Sensing PlatformDocument11 paginiThe Car As An Ambient Sensing PlatformECE HOD AmalapuramÎncă nu există evaluări

- Vehicle To Infrastructure Communication Different Methods and Technologies Used For ItDocument3 paginiVehicle To Infrastructure Communication Different Methods and Technologies Used For ItBargavi SrinivasÎncă nu există evaluări

- A Parking Management System Based On Wireless Sensor NetworkDocument10 paginiA Parking Management System Based On Wireless Sensor NetworkKlarizze GanÎncă nu există evaluări

- Density Based Smart Traffic Control System Using CDocument8 paginiDensity Based Smart Traffic Control System Using CTimÎncă nu există evaluări

- 216852Document30 pagini216852krishna reddyÎncă nu există evaluări

- Team 2 Smartcity Traffic ManagementDocument4 paginiTeam 2 Smartcity Traffic Managementchowdaryganesh36Încă nu există evaluări

- Project ITSDocument35 paginiProject ITSAmeerÎncă nu există evaluări

- Automatic Number Plate Recognition: Fundamentals and ApplicationsDe la EverandAutomatic Number Plate Recognition: Fundamentals and ApplicationsÎncă nu există evaluări

- IitiDocument2 paginiIitiLiliana CondreaÎncă nu există evaluări

- Manual Sobre Volcanes 1Document2 paginiManual Sobre Volcanes 1R-Rod QuezadaÎncă nu există evaluări

- Project#99Document71 paginiProject#99RizwanaÎncă nu există evaluări

- Utilizing Atlassian Jira For Large-Scale SoftwareDocument4 paginiUtilizing Atlassian Jira For Large-Scale SoftwareSam GvmxdxÎncă nu există evaluări

- Tic Tac Toe Application Using AiDocument7 paginiTic Tac Toe Application Using AiPriyank KeniÎncă nu există evaluări

- Chromeleon Peak Groups - What's NewDocument15 paginiChromeleon Peak Groups - What's NewWimbi KalistaÎncă nu există evaluări

- Sinucom Ffs 76Document2 paginiSinucom Ffs 76Patryk MarczewskiÎncă nu există evaluări

- Switching NotesDocument12 paginiSwitching Notesdivyanshu11111100% (1)

- Oyo Business-AnalystDocument2 paginiOyo Business-AnalystHarshÎncă nu există evaluări

- Issue-Log MyISCO MCash E-WalletDocument1 paginăIssue-Log MyISCO MCash E-WalletJunaidi NordinÎncă nu există evaluări

- PCEP™ - Certified Entry-Level Python Programmer (Exam PCEP-30-01) - EXAM SyllabusDocument5 paginiPCEP™ - Certified Entry-Level Python Programmer (Exam PCEP-30-01) - EXAM SyllabusendoecaÎncă nu există evaluări

- Transit 235 Manual 1 R NikonDocument138 paginiTransit 235 Manual 1 R NikonTanggo Rastawira100% (1)

- NodeMCU-32S-Catalogue Entwicklerboard PINSDocument4 paginiNodeMCU-32S-Catalogue Entwicklerboard PINSLara MiltonÎncă nu există evaluări

- User Guide PocketBook 614 ENDocument64 paginiUser Guide PocketBook 614 ENHuriencuÎncă nu există evaluări

- Spring Boot ServicesDocument27 paginiSpring Boot ServicesMarcosÎncă nu există evaluări

- Enterprise Test Automation VisionDocument7 paginiEnterprise Test Automation VisionInfosysÎncă nu există evaluări

- Apple Macbook A1181 K36A MLB 051-7559 RevHDocument76 paginiApple Macbook A1181 K36A MLB 051-7559 RevHIulius CezarÎncă nu există evaluări

- Fortigate 80F DatasheetDocument7 paginiFortigate 80F DatasheetForense OrlandoÎncă nu există evaluări

- SRSDocument24 paginiSRSdave madhavÎncă nu există evaluări

- Service Manual: RMP-1660 USBDocument53 paginiService Manual: RMP-1660 USBmamtalvoÎncă nu există evaluări

- Secure ID PIN Generation - Guide: DisclaimerDocument7 paginiSecure ID PIN Generation - Guide: DisclaimerSunitha RathnamÎncă nu există evaluări

- SAP Faq'sDocument136 paginiSAP Faq'skungumaÎncă nu există evaluări

- Cyber SecurityDocument5 paginiCyber SecurityEditor IJTSRDÎncă nu există evaluări

- Introduction To Functional Programming With Haskell Exercise Sheet 1Document2 paginiIntroduction To Functional Programming With Haskell Exercise Sheet 1Paulo TorresÎncă nu există evaluări

- Standardisation Digital Transformation Power of Data!: Duncan AlexanderDocument51 paginiStandardisation Digital Transformation Power of Data!: Duncan AlexanderNadia Dyorota Magdalena AdyamcyzkovnaÎncă nu există evaluări

- ZOS JCL Restore: Process OverviewDocument3 paginiZOS JCL Restore: Process OverviewCharli ArryÎncă nu există evaluări

- Cybrary Security Plus Study GuideDocument36 paginiCybrary Security Plus Study GuidesemehÎncă nu există evaluări

- Ioncube Loader 10 User Guide: Performance of Encoded FilesDocument7 paginiIoncube Loader 10 User Guide: Performance of Encoded FilesAcib ChusnulÎncă nu există evaluări

- L3 Moto X Troubleshooting Guide V1.0Document193 paginiL3 Moto X Troubleshooting Guide V1.0Cosme Ronceros MayonÎncă nu există evaluări