Documente Academic

Documente Profesional

Documente Cultură

Merge Sort Quick Sort

Încărcat de

Tudor TilinschiDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Merge Sort Quick Sort

Încărcat de

Tudor TilinschiDrepturi de autor:

Formate disponibile

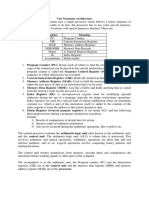

Analysis of Algorithms

CS 477/677

Sorting Part B

Instructor: George Bebis

(Chapter 7)

2

Sorting

Insertion sort

Design approach:

Sorts in place:

Best case:

Worst case:

Bubble Sort

Design approach:

Sorts in place:

Running time:

Yes

O(n)

O(n

2

)

incremental

Yes

O(n

2

)

incremental

3

Sorting

Selection sort

Design approach:

Sorts in place:

Running time:

Merge Sort

Design approach:

Sorts in place:

Running time:

Yes

O(n

2

)

incremental

No

Lets see!!

divide and conquer

4

Divide-and-Conquer

Divide the problem into a number of sub-problems

Similar sub-problems of smaller size

Conquer the sub-problems

Solve the sub-problems recursively

Sub-problem size small enough solve the problems in

straightforward manner

Combine the solutions of the sub-problems

Obtain the solution for the original problem

5

Merge Sort Approach

To sort an array A[p . . r]:

Divide

Divide the n-element sequence to be sorted into two

subsequences of n/2 elements each

Conquer

Sort the subsequences recursively using merge sort

When the size of the sequences is 1 there is nothing

more to do

Combine

Merge the two sorted subsequences

6

Merge Sort

Alg.: MERGE-SORT(A, p, r)

if p < r Check for base case

then q (p + r)/2 Divide

MERGE-SORT(A, p, q) Conquer

MERGE-SORT(A, q + 1, r) Conquer

MERGE(A, p, q, r) Combine

Initial call: MERGE-SORT(A, 1, n)

1 2 3 4 5 6 7 8

6 2 3 1 7 4 2 5

p r

q

7

Example n Power of 2

1 2 3 4 5 6 7 8

q = 4

6 2 3 1 7 4 2 5

1 2 3 4

7 4 2 5

5 6 7 8

6 2 3 1

1 2

2 5

3 4

7 4

5 6

3 1

7 8

6 2

1

5

2

2

3

4

4

7 1

6

3

7

2

8

6

5

Divide

8

Example n Power of 2

1

5

2

2

3

4

4

7 1

6

3

7

2

8

6

5

1 2 3 4 5 6 7 8

7 6 5 4 3 2 2 1

1 2 3 4

7 5 4 2

5 6 7 8

6 3 2 1

1 2

5 2

3 4

7 4

5 6

3 1

7 8

6 2

Conquer

and

Merge

9

Example n Not a Power of 2

6 2 5 3 7 4 1 6 2 7 4

1 2 3 4 5 6 7 8 9 10 11

q = 6

4 1 6 2 7 4

1 2 3 4 5 6

6 2 5 3 7

7 8 9 10 11

q = 9

q = 3

2 7 4

1 2 3

4 1 6

4 5 6

5 3 7

7 8 9

6 2

10 11

7 4

1 2

2

3

1 6

4 5

4

6

3 7

7 8

5

9

2

10

6

11

4

1

7

2

6

4

1

5

7

7

3

8

Divide

10

Example n Not a Power of 2

7 7 6 6 5 4 4 3 2 2 1

1 2 3 4 5 6 7 8 9 10 11

7 6 4 4 2 1

1 2 3 4 5 6

7 6 5 3 2

7 8 9 10 11

7 4 2

1 2 3

6 4 1

4 5 6

7 5 3

7 8 9

6 2

10 11

2

3

4

6

5

9

2

10

6

11

4

1

7

2

6

4

1

5

7

7

3

8

7 4

1 2

6 1

4 5

7 3

7 8

Conquer

and

Merge

11

Merging

Input: Array A and indices p, q, r such that

p q < r

Subarrays A[p . . q] and A[q + 1 . . r] are sorted

Output: One single sorted subarray A[p . . r]

1 2 3 4 5 6 7 8

6 3 2 1 7 5 4 2

p r

q

12

Merging

Idea for merging:

Two piles of sorted cards

Choose the smaller of the two top cards

Remove it and place it in the output pile

Repeat the process until one pile is empty

Take the remaining input pile and place it face-down

onto the output pile

1 2 3 4 5 6 7 8

6 3 2 1 7 5 4 2

p r

q

A1 A[p, q]

A2 A[q+1, r]

A[p, r]

13

Example: MERGE(A, 9, 12, 16)

p r q

14

Example: MERGE(A, 9, 12, 16)

15

Example (cont.)

16

Example (cont.)

17

Example (cont.)

Done!

18

Merge - Pseudocode

Alg.: MERGE(A, p, q, r)

1. Compute n

1

and n

2

2. Copy the first n

1

elements into

L[1 . . n

1

+ 1] and the next n

2

elements into R[1 . . n

2

+ 1]

3. L[n

1

+ 1] ; R[n

2

+ 1]

4. i 1; j 1

5. for k p to r

6. do if L[ i ] R[ j ]

7. then A[k] L[ i ]

8. i i + 1

9. else A[k] R[ j ]

10. j j + 1

p q

7

5 4 2

6

3 2 1

r q + 1

L

R

1 2 3 4 5 6 7 8

6 3 2 1 7 5 4 2

p r

q

n

1

n

2

19

Running Time of Merge

(assume last for loop)

Initialization (copying into temporary arrays):

O(n

1

+ n

2

) = O(n)

Adding the elements to the final array:

- n iterations, each taking constant time O(n)

Total time for Merge:

O(n)

20

Analyzing Divide-and Conquer Algorithms

The recurrence is based on the three steps of

the paradigm:

T(n) running time on a problem of size n

Divide the problem into a subproblems, each of size

n/b: takes D(n)

Conquer (solve) the subproblems aT(n/b)

Combine the solutions C(n)

O(1) if n c

T(n) = aT(n/b) + D(n) + C(n) otherwise

21

MERGE-SORT Running Time

Divide:

compute q as the average of p and r: D(n) = O(1)

Conquer:

recursively solve 2 subproblems, each of size n/2

2T (n/2)

Combine:

MERGE on an n-element subarray takes O(n) time

C(n) = O(n)

O(1) if n =1

T(n) = 2T(n/2) + O(n) if n > 1

22

Solve the Recurrence

T(n) = c if n = 1

2T(n/2) + cn if n > 1

Use Masters Theorem:

Compare n with f(n) = cn

Case 2: T(n) = (nlgn)

23

Merge Sort - Discussion

Running time insensitive of the input

Advantages:

Guaranteed to run in O(nlgn)

Disadvantage

Requires extra space ~N

24

Sorting Challenge 1

Problem: Sort a file of huge records with tiny

keys

Example application: Reorganize your MP-3 files

Which method to use?

A. merge sort, guaranteed to run in time ~NlgN

B. selection sort

C. bubble sort

D. a custom algorithm for huge records/tiny keys

E. insertion sort

25

Sorting Files with Huge Records and

Small Keys

Insertion sort or bubble sort?

NO, too many exchanges

Selection sort?

YES, it takes linear time for exchanges

Merge sort or custom method?

Probably not: selection sort simpler, does less swaps

26

Sorting Challenge 2

Problem: Sort a huge randomly-ordered file of

small records

Application: Process transaction record for a

phone company

Which sorting method to use?

A. Bubble sort

B. Selection sort

C. Mergesort guaranteed to run in time ~NlgN

D. Insertion sort

27

Sorting Huge, Randomly - Ordered Files

Selection sort?

NO, always takes quadratic time

Bubble sort?

NO, quadratic time for randomly-ordered keys

Insertion sort?

NO, quadratic time for randomly-ordered keys

Mergesort?

YES, it is designed for this problem

28

Sorting Challenge 3

Problem: sort a file that is already almost in

order

Applications:

Re-sort a huge database after a few changes

Doublecheck that someone else sorted a file

Which sorting method to use?

A. Mergesort, guaranteed to run in time ~NlgN

B. Selection sort

C. Bubble sort

D. A custom algorithm for almost in-order files

E. Insertion sort

29

Sorting Files That are Almost in Order

Selection sort?

NO, always takes quadratic time

Bubble sort?

NO, bad for some definitions of almost in order

Ex: B C D E F G H I J K L M N O P Q R S T U V W X Y Z A

Insertion sort?

YES, takes linear time for most definitions of almost

in order

Mergesort or custom method?

Probably not: insertion sort simpler and faster

30

Quicksort

Sort an array A[pr]

Divide

Partition the array A into 2 subarrays A[p..q] and A[q+1..r], such

that each element of A[p..q] is smaller than or equal to each

element in A[q+1..r]

Need to find index q to partition the array

A[pq] A[q+1r]

31

Quicksort

Conquer

Recursively sort A[p..q] and A[q+1..r] using Quicksort

Combine

Trivial: the arrays are sorted in place

No additional work is required to combine them

The entire array is now sorted

A[pq] A[q+1r]

32

QUICKSORT

Alg.: QUICKSORT(A, p, r)

if p < r

then q PARTITION(A, p, r)

QUICKSORT (A, p, q)

QUICKSORT (A, q+1, r)

Recurrence:

Initially: p=1, r=n

PARTITION())

T(n) = T(q) + T(n q) + f(n)

33

Partitioning the Array

Choosing PARTITION()

There are different ways to do this

Each has its own advantages/disadvantages

Hoare partition (see prob. 7-1, page 159)

Select a pivot element x around which to partition

Grows two regions

A[pi] s x

x s A[jr]

A[pi] s x x s A[jr]

i j

34

Example

7 3 1 4 6 2 3 5

i j

7 5 1 4 6 2 3 3

i j

7 5 1 4 6 2 3 3

i j

7 5 6 4 1 2 3 3

i j

7 3 1 4 6 2 3 5

i j

A[pr]

7 5 6 4 1 2 3 3

i j

A[pq] A[q+1r]

pivot x=5

35

Example

36

Partitioning the Array

Alg. PARTITION (A, p, r)

1. x A[p]

2. i p 1

3. j r + 1

4. while TRUE

5. do repeat j j 1

6. until A[j] x

7. do repeat i i + 1

8. until A[i] x

9. if i < j

10. then exchange A[i] A[j]

11. else return j

Running time: O(n)

n = r p + 1

7 3 1 4 6 2 3 5

i j

A:

a

r

a

p

i j=q

A:

A[pq] A[q+1r]

p

r

Each element is

visited once!

37

Recurrence

Alg.: QUICKSORT(A, p, r)

if p < r

then q PARTITION(A, p, r)

QUICKSORT (A, p, q)

QUICKSORT (A, q+1, r)

Recurrence:

Initially: p=1, r=n

T(n) = T(q) + T(n q) + n

38

Worst Case Partitioning

Worst-case partitioning

One region has one element and the other has n 1 elements

Maximally unbalanced

Recurrence: q=1

T(n) = T(1) + T(n 1) + n,

T(1) = O(1)

T(n) = T(n 1) + n

=

2 2

1

1 ( ) ( ) ( )

n

k

n k n n n

=

| |

+ = O +O = O

|

\ .

n

n - 1

n - 2

n - 3

2

1

1

1

1

1

1

n

n

n

n - 1

n - 2

3

2

O(n

2

)

When does the worst case happen?

39

Best Case Partitioning

Best-case partitioning

Partitioning produces two regions of size n/2

Recurrence: q=n/2

T(n) = 2T(n/2) + O(n)

T(n) = O(nlgn) (Master theorem)

40

Case Between Worst and Best

9-to-1 proportional split

Q(n) = Q(9n/10) + Q(n/10) + n

41

How does partition affect performance?

42

How does partition affect performance?

43

Performance of Quicksort

Average case

All permutations of the input numbers are equally likely

On a random input array, we will have a mix of well balanced

and unbalanced splits

Good and bad splits are randomly distributed across throughout

the tree

Alternate of a good

and a bad split

Nearly well

balanced split

n

n - 1 1

(n 1)/2 (n 1)/2

n

(n 1)/2 (n 1)/2 + 1

Running time of Quicksort when levels alternate

between good and bad splits is O(nlgn)

combined partitioning cost:

2n-1 = O(n)

partitioning cost:

n = O(n)

S-ar putea să vă placă și

- Introduction To Data StructuresDocument324 paginiIntroduction To Data StructuresSuryadevara Nagender KumarÎncă nu există evaluări

- Sorting AlgorithmDocument132 paginiSorting Algorithmanujgit100% (3)

- HashingDocument43 paginiHashingNimra Nazeer50% (2)

- Fermat and Euler Ts TheoremsDocument14 paginiFermat and Euler Ts TheoremsSurendra ReddyÎncă nu există evaluări

- Design and Analysis of Algorithms (DAA) NotesDocument169 paginiDesign and Analysis of Algorithms (DAA) Notesvinod.shringi7870Încă nu există evaluări

- 2 - Number Theory For CryptographyDocument33 pagini2 - Number Theory For Cryptographykaka shipai100% (1)

- Function - SortDocument5 paginiFunction - SortZhuan WuÎncă nu există evaluări

- Design Analysis and AlgorithmDocument83 paginiDesign Analysis and AlgorithmLuis Anderson100% (2)

- Design and Analysis of AlgorithmDocument29 paginiDesign and Analysis of AlgorithmManishÎncă nu există evaluări

- 02 Sorting (Chap 13)Document57 pagini02 Sorting (Chap 13)frankjamisonÎncă nu există evaluări

- 05 Algorithm AnalysisDocument29 pagini05 Algorithm AnalysisDavid TangÎncă nu există evaluări

- Data StructureDocument97 paginiData StructureKibru AberaÎncă nu există evaluări

- Machine Learning NNDocument16 paginiMachine Learning NNMegha100% (1)

- Von Neumann ArchitectureDocument8 paginiVon Neumann ArchitectureDinesh KumarÎncă nu există evaluări

- Divide and ConquerDocument50 paginiDivide and Conquerjoe learioÎncă nu există evaluări

- Python LabDocument27 paginiPython LabPrajwal P GÎncă nu există evaluări

- Sorting Insertion Sort Merge SortDocument45 paginiSorting Insertion Sort Merge SortMia SamÎncă nu există evaluări

- Introduction To Design and Analysis of AlgorithmsDocument50 paginiIntroduction To Design and Analysis of AlgorithmsMarlon TugweteÎncă nu există evaluări

- Factors in RDocument6 paginiFactors in RKarthikeyan RamajayamÎncă nu există evaluări

- DAA Notes JntuDocument45 paginiDAA Notes Jntusubramanyam62Încă nu există evaluări

- AVL TreeDocument36 paginiAVL TreesalithakkÎncă nu există evaluări

- Algorithms PDFDocument116 paginiAlgorithms PDFFoucault Mukho HyanglaÎncă nu există evaluări

- ThreadsDocument47 paginiThreads21PC12 - GOKUL DÎncă nu există evaluări

- SORTINGDocument46 paginiSORTINGKhushbu BansalÎncă nu există evaluări

- Intro - To - Data Structure - Lec - 1Document29 paginiIntro - To - Data Structure - Lec - 1Xafran KhanÎncă nu există evaluări

- Daa MCQDocument3 paginiDaa MCQLinkeshwar LeeÎncă nu există evaluări

- Heap AlgorithmDocument6 paginiHeap AlgorithmMaysam AlkeramÎncă nu există evaluări

- MC0080 Analysis and Design of AlgorithmsDocument15 paginiMC0080 Analysis and Design of AlgorithmsGaurav Singh JantwalÎncă nu există evaluări

- 1.1 Basics of Data StructuresDocument40 pagini1.1 Basics of Data StructuresMugi PawarÎncă nu există evaluări

- CS301FinaltermMCQsbyDr TariqHanifDocument15 paginiCS301FinaltermMCQsbyDr TariqHanifRizwanIlyasÎncă nu există evaluări

- Cs301 02 Mid Spring 20101 My Comp Let FileDocument59 paginiCs301 02 Mid Spring 20101 My Comp Let FileSobia_Zaheer_22520% (1)

- On Data StructuresDocument56 paginiOn Data StructuresParul Pandey TewariÎncă nu există evaluări

- Group Assignment: Technology Park MalaysiaDocument46 paginiGroup Assignment: Technology Park MalaysiaKesikan Velan50% (2)

- Bubble SortDocument16 paginiBubble SortZarnigar AltafÎncă nu există evaluări

- PPT On Data StructuresDocument54 paginiPPT On Data Structuresshyma naÎncă nu există evaluări

- Arsdigita University Month 8: Theory of Computation Professor Shai Simonson Exam 1 (50 Points)Document5 paginiArsdigita University Month 8: Theory of Computation Professor Shai Simonson Exam 1 (50 Points)brightstudentÎncă nu există evaluări

- Topological SortingDocument8 paginiTopological SortingGeorge fernandez.IÎncă nu există evaluări

- MCQ in DAADocument3 paginiMCQ in DAAkamakshi2Încă nu există evaluări

- Algorithm Analysis and Design - LectureDocument94 paginiAlgorithm Analysis and Design - LectureSuleiman GargaareÎncă nu există evaluări

- Persistant CompanyDocument13 paginiPersistant Companyanilnaik287Încă nu există evaluări

- Merge SortDocument9 paginiMerge SortΛίλη ΚαρυπίδουÎncă nu există evaluări

- (2023-24) BCS304 (Dsa) (M-1) - 1Document38 pagini(2023-24) BCS304 (Dsa) (M-1) - 1blank accountÎncă nu există evaluări

- Sorting and Searching AlgorithmsDocument49 paginiSorting and Searching AlgorithmsSubathra Devi MourouganeÎncă nu există evaluări

- Artificial Intelligence McqsDocument173 paginiArtificial Intelligence McqsMUHAMMAD KHUBAIBÎncă nu există evaluări

- CA 2marksDocument41 paginiCA 2marksJaya ShreeÎncă nu există evaluări

- Single Linked ListDocument11 paginiSingle Linked Listlakshmi.sÎncă nu există evaluări

- Sorting Algorithms - PresentationDocument32 paginiSorting Algorithms - PresentationSuleiman Gargaare0% (1)

- Greedy AlgorithmDocument30 paginiGreedy AlgorithmRahul Rahul100% (1)

- Case Study On Sorting AlgorithmDocument17 paginiCase Study On Sorting AlgorithmRochak BhattaraiÎncă nu există evaluări

- RecursionDocument38 paginiRecursionanjugaduÎncă nu există evaluări

- Vernam CipherDocument5 paginiVernam CipherDil Prasad KunwarÎncă nu există evaluări

- Topological Sort HomeworkDocument5 paginiTopological Sort Homeworkabhi74Încă nu există evaluări

- CS-1351 Artificial Intelligence - Two MarksDocument24 paginiCS-1351 Artificial Intelligence - Two Marksrajesh199155100% (1)

- CS218-Data Structures Final ExamDocument7 paginiCS218-Data Structures Final ExamRafayGhafoorÎncă nu există evaluări

- Quiz#1Reschduled CS502 Design and Analysis of AlgorithmsDocument2 paginiQuiz#1Reschduled CS502 Design and Analysis of AlgorithmsImran67% (6)

- 3 Sem - Data Structure NotesDocument164 pagini3 Sem - Data Structure NotesNemo KÎncă nu există evaluări

- CS 203 Data Structure & Algorithms: Performance AnalysisDocument21 paginiCS 203 Data Structure & Algorithms: Performance AnalysisSreejaÎncă nu există evaluări

- Simple Sorting and Searching Algorithms 2.1searching: PseudocodeDocument7 paginiSimple Sorting and Searching Algorithms 2.1searching: PseudocodebiniÎncă nu există evaluări

- ASSIGNMENT 1 - Basic Concepts of Analysis and Design of AlgorithmsDocument3 paginiASSIGNMENT 1 - Basic Concepts of Analysis and Design of AlgorithmsHina ChavdaÎncă nu există evaluări

- Learning RulesDocument60 paginiLearning RulesAkhil AroraÎncă nu există evaluări

- Decision Tree - GeeksforGeeksDocument4 paginiDecision Tree - GeeksforGeeksGaurang singhÎncă nu există evaluări

- 5743-Divide and ConquerDocument26 pagini5743-Divide and ConquerCricTalkÎncă nu există evaluări

- Quick SortDocument5 paginiQuick Sortare_you_peopleÎncă nu există evaluări

- CCC121-07-Sorting AlgorithmsDocument15 paginiCCC121-07-Sorting AlgorithmsJoshua MadulaÎncă nu există evaluări

- Sorting AlgorithmsDocument84 paginiSorting AlgorithmsDhavalÎncă nu există evaluări

- Sorting Algorithm in C-Bubble Sort and Selection SortDocument30 paginiSorting Algorithm in C-Bubble Sort and Selection SortShrishail KambleÎncă nu există evaluări

- Bubble Sort (With Code in Python-C++-Java-C)Document12 paginiBubble Sort (With Code in Python-C++-Java-C)Thee KullateeÎncă nu există evaluări

- Exercise For Sorting and Searching in Data Structure N Skema 2014Document3 paginiExercise For Sorting and Searching in Data Structure N Skema 2014yahzÎncă nu există evaluări

- Advanced SortingDocument13 paginiAdvanced SortingshemseÎncă nu există evaluări

- Sorting Techniques: Merge Sort Radix SortDocument10 paginiSorting Techniques: Merge Sort Radix SortPrachi ShahÎncă nu există evaluări

- QuicksortDocument12 paginiQuicksortsailaja002Încă nu există evaluări

- LABMANUALFORMATCNdocx 2022 08 28 20 44 43Document22 paginiLABMANUALFORMATCNdocx 2022 08 28 20 44 43Nishar AlamÎncă nu există evaluări

- Advanced Topics in SortingDocument36 paginiAdvanced Topics in SortingSergeÎncă nu există evaluări

- Sequential and Parallel Sorting AlgorithmsDocument63 paginiSequential and Parallel Sorting AlgorithmskesharwanidurgeshÎncă nu există evaluări

- Strand SortDocument18 paginiStrand SortArham SiddiquiÎncă nu există evaluări

- Mini Project Report: Visual Applications of Sorting AlgorithmsDocument25 paginiMini Project Report: Visual Applications of Sorting AlgorithmsAmisha100% (2)

- Heap SortDocument3 paginiHeap SortByronPérezÎncă nu există evaluări

- Assignment - Bubble Sort and Insertion Sort ImplementationDocument4 paginiAssignment - Bubble Sort and Insertion Sort ImplementationGiorno GiovannaÎncă nu există evaluări

- Menu Driven Program in CDocument53 paginiMenu Driven Program in CRonald CordovaÎncă nu există evaluări

- Randomized Quick SortDocument5 paginiRandomized Quick SortZubaKesriÎncă nu există evaluări

- Sorting AlgorithmsDocument14 paginiSorting AlgorithmsNonstop MasterÎncă nu există evaluări

- Name: Sanket Rahangdale REG No.: 19010855 ROLL No: B-171: Practical-2Document11 paginiName: Sanket Rahangdale REG No.: 19010855 ROLL No: B-171: Practical-2MR. SANKET RAHANGDALEÎncă nu există evaluări

- Data Structures Notes 155 177Document23 paginiData Structures Notes 155 177Ruthvik RachaÎncă nu există evaluări

- Assi 14Document3 paginiAssi 14saniyatemp05Încă nu există evaluări

- Analisis Perbandingan Kompleksitas Algoritma Pengurutan Nilai (Sorting)Document12 paginiAnalisis Perbandingan Kompleksitas Algoritma Pengurutan Nilai (Sorting)BSI PurwokertoÎncă nu există evaluări

- 2.3.3. Sorting Algorithms-3Document4 pagini2.3.3. Sorting Algorithms-3ChristineÎncă nu există evaluări

- Data Structures: Analysis of Insertion Sort, Bucket Sort and Radix Sort For Best, Worst and Average CaseDocument23 paginiData Structures: Analysis of Insertion Sort, Bucket Sort and Radix Sort For Best, Worst and Average CaseModase TanishÎncă nu există evaluări

- Bubble Sort and Selection Sort UpdatedDocument22 paginiBubble Sort and Selection Sort UpdatedUbaid RajputÎncă nu există evaluări

- Quick Sort & Merge SortDocument17 paginiQuick Sort & Merge SortGame ZoneÎncă nu există evaluări

- Week 8Document16 paginiWeek 8Jiten OmanÎncă nu există evaluări