Documente Academic

Documente Profesional

Documente Cultură

Neural Net Dup 1

Încărcat de

Pinaki GhoshDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Neural Net Dup 1

Încărcat de

Pinaki GhoshDrepturi de autor:

Formate disponibile

A Brief Overview of Neural

Networks

Overview

Relation to Biological Brain: Biological Neural Network

The Artificial Neuron

Types of Networks and Learning Techniques

Supervised Learning & Backpropagation Training

Algorithm

Learning by Example

Applications

Biological Neuron

Artificial Neuron

f(n)

{

W

W

W

W

Outputs

Activation

Function

I

N

P

U

T

S

W=Weight

Neuron

Transfer Functions

: ( )

1

1

n

SIGMOID f n

e

=

+

: ( ) LINEAR f n n =

1

0

Input

Output

Types of networks

Multiple Inputs and

Single Layer

Multiple Inputs

and layers

Types of Networks Contd.

Feedback

Recurrent Networks

Recurrent Networks

Feed forward networks:

Information only flows one way

One input pattern produces one output

No sense of time (or memory of previous state)

Recurrency

Nodes connect back to other nodes or

themselves

Information flow is multidirectional

Sense of time and memory of previous state(s)

Biological nervous systems show high levels of

recurrency (but feed-forward structures exists too)

ANNs The basics

ANNs incorporate the two fundamental

components of biological neural nets:

1. Neurones (nodes)

2. Synapses (weights)

Feed-forward nets

Information flow is unidirectional

Data is presented to Input layer

Passed on to Hidden Layer

Passed on to Output layer

Information is distributed

Information processing is parallel

Internal representation (interpretation) of data

Neural networks are good for prediction problems.

The inputs are well understood. You have a

good idea of which features of the data are

important, but not necessarily how to combine

them.

The output is well understood. You know what

you are trying to predict.

Experience is available. You have plenty of

examples where both the inputs and the output

are known. This experience will be used to train

the network.

Feeding data through the net:

(1 0.25) + (0.5 (-1.5)) = 0.25 + (-0.75) = - 0.5

0.3775

1

1

5 . 0

=

+e

Squashing:

Learning Techniques

Supervised Learning:

Inputs from the

environment

Neural Network

Actual System

Error

+

-

Expected

Output

Actual

Output

Training

Multilayer Perceptron

Inputs

First Hidden

layer

Second

Hidden Layer

Output

Layer

Signal Flow

Backpropagation of Errors

Function Signals

Error Signals

Neural networks for Directed Data Mining: Building

a model for classification and prediction

1. Identify the input and output features

2. Normalize (scaling) the inputs and outputs so their

range is between 0 and 1.

3. Set up a network on a representative set of training

examples.

4. Train the network on a representative set of training

examples.

5. Test the network on a test set strictly independent from

the training examples. If necessary repeat the training,

adjusting the training set, network topology, nad

parameters. Evaluate the network using the evaluation

set to see how well it performs.

6. Apply the model generated by the network to predict

outcomes for unknown inputs.

Learning by Example

Hidden layer transfer function: Sigmoid function

= F(n)= 1/(1+exp(-n)), where n is the net input to

the neuron.

Derivative= F(n) = (output of the neuron)(1-

output of the neuron) : Slope of the transfer

function.

Output layer transfer function: Linear function=

F(n)=n; Output=Input to the neuron

Derivative= F(n)= 1

Purpose of the

Activation Function

We want the unit to be active (near +1) when the right

inputs are given

We want the unit to be inactive (near 0) when the

wrong inputs are given.

Its preferable for activation function to be nonlinear.

Otherwise, the entire neural network collapses into a

simple linear function.

Possibilities for activation

function

Step function

Sign function Sigmoid (logistic) function

step(x) = 1, if x > threshold

0, if x threshold

(in picture above, threshold = 0)

sign(x) = +1, if x > 0

-1, if x 0

sigmoid(x) = 1/(1+e

-x

)

Adding an extra input with activation a

0

= - 1 and weight

W

0,j

= t (called the bias weight) is equivalent to having a

threshold at t. This way we can always assume a 0 threshold.

s s

Using a Bias Weight to

Standardize the Threshold

-1

T

x

1

x

2

W

1

W

2

W

1

x

1

+ W

2

x

2

< T

W

1

x

1

+ W

2

x

2

- T < 0

Real vs artificial neurons

axon

dendrites

dendrites

synapse

cell

x

0

x

n

w

0

w

n

o

i

n

i

i

x w

=0

otherwise 0 and 0 if 1

0

> =

=

i

n

i

i

x w o

Threshold units

Implementing AND

x

1

x

2

o(x

1

,x

2

)

otherwise 0

0 5 . 1 if 1 ) , (

2 1 2 1

=

> + + = x x x x o

1

1

-1

W=1.5

Assume Boolean (0/1) input values

Implementing OR

x

1

x

2

o(x

1

,x

2

)

1

1

-1

W=0.5

o(x1,x2) = 1 if 0.5 + x1 + x2 > 0

= 0 otherwise

Implementing NOT

x

1

o(x

1

,x

2

)

-1

W=-0.5

-1

otherwise 0

0 5 . 0 if 1 ) (

1 1

=

> = x x o

Implementing more complex

Boolean functions

x

1

x

2

1

1

0.5

-1

x

1

or x

2

x

3

1

1

1.5

(x

1

or x

2

) and x

3

-1

Learning by Example

Training Algorithm: backpropagation of

errors using gradient descent training.

Colors:

Red: Current weights

Orange: Updated weights

Black boxes: Inputs and outputs to a neuron

Blue: Sensitivities at each layer

The perceptron learning rule performs gradient descent in

weight space.

Error surface: The surface that describes the error on

each example as a function of all the weights in the

network. A set of weights defines a point on this surface.

(It could also be called a state in the state space of

possible weights, i.e., weight space.)

We look at the partial derivative of the surface with

respect to each weight (i.e., the gradient -- how much the

error would change if we made a small change in each

weight). Then the weights are being altered in an amount

proportional to the slope in each direction (corresponding

to a weight). Thus the network as a whole is moving in

the direction of steepest descent on the error surface.

Definition of Error:

Sum of Squared Errors

2 2

2

1

) (

2

1

Err o t E

examples

= =

Here, t is the correct (desired) output and o is the actual

output of the neural net.

This is introduced to simplify the math on the next slide

Reduction of Squared Error

Gradient descent reduces the squared error by calculating

the partial derivative of E with respect to each weight:

j

n

k

k k

j

j j

x in g Err

x W g t

W

Err

W

Err

Err

W

E

E

=

|

|

.

|

\

|

|

.

|

\

|

c

c

=

c

c

=

c

c

= V

=

) ( '

0

j j j

x in g Err W W + ) ( ' q

chain rule for derivatives

expand second Err above to (t g(in))

This is called in

0 =

c

c

j

W

t

because and chain rule

The weight is updated by times this gradient of error E in weight space. The fact

that the weight is updated in the correct direction (+/-) can be verified with examples.

learning rate

The learning rate, , is typically set to a small value such as 0.1

E is

a vector

V

V

First Pass

0.5

0.5

0.5

0.5 0.5

0.5

0.5

0.5

1

0.5

0.5

0.6225

0.6225

0.6225

0.6225

0.6508

0.6508

0.6508

0.6508

Error=1-0.6508=0.3492

G3=(1)(0.3492)=0.3492

G2= (0.6508)(1-

0.6508)(0.3492)(0.5)=0.0397

G1= (0.6225)(1-

0.6225)(0.0397)(0.5)(2)=0.0093

Gradient of the neuron= G

=slope of the transfer

function[{(weight of the

neuron to the next neuron)

(output of the neuron)}]

Gradient of the output

neuron = slope of the

transfer function error

Weight Update 1

New Weight=Old Weight + {(learning rate)(gradient)(prior output)}

0.5+(0.5)(0.3492)(0.6508)

0.6136

0.5124 0.5124

0.5124

0.6136

0.5124

0.5047

0.5047

0.5+(0.5)(0.0397)(0.6225)

0.5+(0.5)(0.0093)(1)

Second Pass

0.5047

0.5124

0.6136

0.6136 0.5047

0.5124

0.5124

0.5124

1

0.5047

0.5047

0.6391

0.6391 0.6236

0.6236

0.8033

0.6545

0.6545

0.8033

Error=1-0.8033=0.1967

G3=(1)(0.1967)=0.1967

G2= (0.6545)(1-

0.6545)(0.1967)(0.6136)=0.0273

G1= (0.6236)(1-

0.6236)(0.5124)(0.0273)(2)=0.0066

Weight Update 2

New Weight=Old Weight + {(learning rate)(gradient)(prior output)}

0.6136+(0.5)(0.1967)(0.6545)

0.6779

0.5209 0.5209

0.5209

0.6779

0.5209

0.508

0.508

0.5124+(0.5)(0.0273)(0.6236)

0.5047+(0.5)(0.0066)(1)

Third Pass

0.508

0.5209

0.6779

0.6779 0.508

0.5209

0.5209

0.5209

1

0.508

0.508

0.6504

0.6504 0.6243

0.6243

0.8909

0.6571

0.6571

0.8909

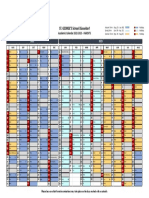

Weight Update Summary

Output Expected Output Error

w1 w2 w3

Initial conditions 0.5 0.5 0.5 0.6508 1 0.3492

Pass 1 Update 0.5047 0.5124 0.6136 0.8033 1 0.1967

Pass 2 Update 0.508 0.5209 0.6779 0.8909 1 0.1091

Weights

W1: Weights from the input to the input layer

W2: Weights from the input layer to the hidden layer

W3: Weights from the hidden layer to the output layer

Training Algorithm

The process of feedforward and

backpropagation continues until the

required mean squared error has been

reached.

Typical mse: 1e-5

Other complicated backpropagation

training algorithms also available.

Why Gradient?

O1

O2

O = Output of the neuron

W = Weight

N = Net input to the neuron

W1

W2

N =

(O1W1)

+(O2W

2)

O3 = 1/[1+exp(-N)]

Error =

Actual

Output O3

To reduce error: Change in weights:

o Learning rate

o Rate of change of error w.r.t rate of change of weight

Gradient: rate of change of error w.r.t rate of change of N

Prior output (O1 and O2)

0

Input

Output

1

Gradient in Detail

Gradient : Rate of change of error w.r.t rate of change in net input to

neuron

o For output neurons

Slope of the transfer function error

o For hidden neurons : A bit complicated ! : error fed back in terms of

gradient of successive neurons

Slope of the transfer function [ (gradient of next neuron

weight connecting the neuron to the next neuron)]

Why summation? Share the responsibility!!

An Example

1

0.4

0.731

0.598

0.5

0.5

0.5

0.5

0.6645

0.6645

0.66

0.66

1

0

Error = 1-0.66 = 0.34

Error = 0-0.66 = -0.66

G1=0.66(1-0.66)(-0.66)= -0.148

G1=0.66(1-0.66)(0.34)= 0.0763

Reduce more

Increase less

Improving performance

Changing the number of layers and

number of neurons in each layer.

Variation in Transfer functions.

Changing the learning rate.

Training for longer times.

Type of pre-processing and post-

processing.

Applications

Used in complex function approximations,

feature extraction & classification, and

optimization & control problems

Applicability in all areas of science and

technology.

Neural networks with more than one output

A department store chain wants to predict the

likelihood that customers will be purchasing

products from various departments, like

women apparel, furniture, and entertainment.

The store wants to use this information to plan

promotions and direct target mailings.

This network has three outputs, one for each

department. The outputs are a propensity for

the customer described in the inputs to make

his or her next purchase from the associated

department.

gender

age

Last purchase

Propensity to purchase

Womens apparel

Propensity to purchase

furniture

Propensity to purchase

entertainment

How can the department store determine the

right promotion or promotions to offer the

customer?

Taking the department corresponding to the unit

with the maximum value

Taking the departments corresponding to the

units with the top three values

Taking the departments corresponding to the

units that exceed some threshold value

Taking all departments corresponding to units

that are some percentage of the unit with he

maximum value.

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Logistic+Regression - DoneDocument41 paginiLogistic+Regression - DonePinaki Ghosh100% (1)

- Factors For Control ChartsDocument1 paginăFactors For Control ChartsPinaki GhoshÎncă nu există evaluări

- RC Six SigmaDocument47 paginiRC Six SigmaPinaki GhoshÎncă nu există evaluări

- Benchmarking of FMCG Industries in IndiaDocument22 paginiBenchmarking of FMCG Industries in IndiaPinaki GhoshÎncă nu există evaluări

- Comparision Between Taylor and Fayol S PrinciplesDocument12 paginiComparision Between Taylor and Fayol S Principlesbiotech_vidhyaÎncă nu există evaluări

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Action Plan in MathematicsDocument4 paginiAction Plan in MathematicsMELVIN JUAYANG LOZADA100% (2)

- Electrons and PhotonsDocument48 paginiElectrons and PhotonsVikashSubediÎncă nu există evaluări

- Social Science Blue PrintDocument2 paginiSocial Science Blue PrintMATWAR SINGH RAWATÎncă nu există evaluări

- Quantum Mechanics A Paradigms Approach 1st Edition Mcintyre Solutions ManualDocument36 paginiQuantum Mechanics A Paradigms Approach 1st Edition Mcintyre Solutions ManualMicheleWallsertso100% (16)

- (Ulrich, D. Younger, J. Brockbank, W. & Ulrich, M., 2011) The State of The HR ProfessionDocument18 pagini(Ulrich, D. Younger, J. Brockbank, W. & Ulrich, M., 2011) The State of The HR ProfessionJoana CarvalhoÎncă nu există evaluări

- E-Content #01 (SR) Introduction To Academic and Research Report WritingDocument6 paginiE-Content #01 (SR) Introduction To Academic and Research Report Writingaravind mouryaÎncă nu există evaluări

- GRD 7 Endterm 1 Exam: All SubjectsDocument113 paginiGRD 7 Endterm 1 Exam: All SubjectsBryan MasikaÎncă nu există evaluări

- AW - CW - 1st Sit - AS 20 (Final)Document6 paginiAW - CW - 1st Sit - AS 20 (Final)Quỳnh anh NguyễnÎncă nu există evaluări

- Lesson 3 EdtpaDocument4 paginiLesson 3 Edtpaapi-314828591Încă nu există evaluări

- Diego Gallo Macin Janet Santagada Eac 150 NBQ 09. Mar. 2018 Research Skills AssignmentDocument5 paginiDiego Gallo Macin Janet Santagada Eac 150 NBQ 09. Mar. 2018 Research Skills AssignmentDiego Gallo MacínÎncă nu există evaluări

- Academic Calendar 22-23 - SGSD - PARENTS - FINALDocument1 paginăAcademic Calendar 22-23 - SGSD - PARENTS - FINALfloraÎncă nu există evaluări

- Course Syllabus in Lie Detection Techniques: Minane Concepcion, TarlacDocument8 paginiCourse Syllabus in Lie Detection Techniques: Minane Concepcion, TarlacJoy SherlynÎncă nu există evaluări

- PAT - English Year 2 (Paper 1)Document6 paginiPAT - English Year 2 (Paper 1)Ellye Ornella RamualdÎncă nu există evaluări

- 8ou6j5o6cuko3s171fnu8bsdb1 GB590M4 Checklist Rubric 1907DDocument3 pagini8ou6j5o6cuko3s171fnu8bsdb1 GB590M4 Checklist Rubric 1907DGrace MwangiÎncă nu există evaluări

- A Revised Survey of The Seventeenth-Century Tokens of Buckinghamshire / George Berry and Peter MorleyDocument33 paginiA Revised Survey of The Seventeenth-Century Tokens of Buckinghamshire / George Berry and Peter MorleyDigital Library Numis (DLN)Încă nu există evaluări

- Guiding Childrens Social Development and Learning 8th Edition Kostelnik Test BankDocument13 paginiGuiding Childrens Social Development and Learning 8th Edition Kostelnik Test BankChadJacksonbidmk100% (16)

- SMC 2021 Extended SolutionsDocument24 paginiSMC 2021 Extended SolutionsLiu NingÎncă nu există evaluări

- Thesis University of SydneyDocument4 paginiThesis University of Sydneynikkismithmilwaukee100% (2)

- Writing Aims and ObjectivesDocument3 paginiWriting Aims and ObjectivesMohd Hanis 'Ady' IzdiharÎncă nu există evaluări

- Map Document 2015-06-03Document12 paginiMap Document 2015-06-03AjayRampalÎncă nu există evaluări

- HR Interview QuestionsDocument22 paginiHR Interview QuestionsShamshu ShaikÎncă nu există evaluări

- Cause and Effect EssayDocument3 paginiCause and Effect EssayLilianÎncă nu există evaluări

- Book Review OutlineDocument2 paginiBook Review OutlineMetiu LuisÎncă nu există evaluări

- EAPP Reviewer 1st SemDocument5 paginiEAPP Reviewer 1st SemAndrea Cefe VegaÎncă nu există evaluări

- Warm Ups English ClassDocument3 paginiWarm Ups English ClassBrian VLÎncă nu există evaluări

- Thai BookDocument4 paginiThai BookVijay MishraÎncă nu există evaluări

- Quantitative ResearchDocument10 paginiQuantitative ResearchAixa Dee MadialisÎncă nu există evaluări

- 6 The Self: Mind, Gender, and Body: Consumer Behavior, 12EDocument24 pagini6 The Self: Mind, Gender, and Body: Consumer Behavior, 12Escanny16Încă nu există evaluări

- Image CaptioningDocument14 paginiImage CaptioningSaginala SharonÎncă nu există evaluări

- Mahehwaran D Internship ReportDocument25 paginiMahehwaran D Internship ReportMaheshwaran DÎncă nu există evaluări