Documente Academic

Documente Profesional

Documente Cultură

Basic Analysis of Variance and The General Linear Model: Psy 420 Andrew Ainsworth

Încărcat de

itmmecDescriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Basic Analysis of Variance and The General Linear Model: Psy 420 Andrew Ainsworth

Încărcat de

itmmecDrepturi de autor:

Formate disponibile

Basic Analysis of Variance and

the General Linear Model

Psy 420

Andrew Ainsworth

Why is it called analysis of variance

anyway?

If we are interested in group mean differences, why

are we looking at variance?

t-test only one place to look for variability

More groups, more places to look

Variance of group means around a central tendency

(grand mean ignoring group membership) really

tells us, on average, how much each group is

different from the central tendency (and each other)

Why is it called analysis of

variance anyway?

Average mean variability around GM needs to

be compared to average variability of scores

around each group mean

Variability in any distribution can be broken

down into conceptual parts:

total variability = (variability of each group

mean around the grand mean) + (variability of

each persons score around their group mean)

General Linear Model (GLM)

The basis for most inferential statistics

(e.g. 420, 520, 524, etc.)

Simple form of the GLM

score=grand mean + independent variable + error

Y o c = + +

General Linear Model (GLM)

The basic idea is that everyone in the

population has the same score (the grand

mean) that is changed by the effects of an

independent variable (A) plus just random

noise (error)

Some levels of A raise scores from the

GM, other levels lower scores from the

GM and yet others have no effect.

General Linear Model (GLM)

Error is the noise caused by other variables you arent

measuring, havent controlled for or are unaware of.

Error like A will have different effects on scores but this

happens independently of A.

If error gets too large it will mask the effects of A and make it

impossible to analyze the effects of A

Most of the effort in research designs is done to try and

minimize error to make sure the effect of A is not buried in

the noise.

The error term is important because it gives us a yard stick

with which to measure the variability cause by the A effect.

We want to make sure that the variability attributable to A is

greater than the naturally occurring variability (error)

GLM

Example of GLM ANOVA backwards

We can generate a data set using the GLM formula

We start off with every subject at the GM (e.g. =5)

a

1

a

2

Case Score Case Score

s

1

s

2

s

3

s

4

s

5

5

5

5

5

5

s

6

s

7

s

8

s

9

s

10

5

5

5

5

5

GLM

Then we add in the effect of A (a1 adds 2 points and

a2 subtracts 2 points)

a

1

a

2

Case Score Case Score

s

1

s

2

s

3

s

4

s

5

5 + 2 = 7

5 + 2 = 7

5 + 2 = 7

5 + 2 = 7

5 + 2 = 7

s

6

s

7

s

8

s

9

s

10

5 2 = 3

5 2 = 3

5 2 = 3

5 2 = 3

5 2 = 3

1

35

a

Y =

2

15

a

Y =

1

2

245

a

Y =

2

2

45

a

Y =

1

7

a

Y =

3

3

a

Y =

GLM

Changes produced by the treatment represent

deviations around the GM

2 2 2

2 2 2 2

( ) [(7 5) (3 5) ]

5(2) 5( 2) 5[(2) ( 2) ] 40

j

n Y GM n

or

= + =

+ + =

GLM

Now if we add in some random variation (error)

a

1

a

2

Case Score Case Score SUM

s

1

s

2

s

3

s

4

s

5

5 + 2 + 2 = 9

5 + 2 + 0 = 7

5 + 2 1 = 6

5 + 2 + 0 = 7

5 + 2 1 = 6

s

6

s

7

s

8

s

9

s

10

5 2 + 0 = 3

5 2 2 = 1

5 2 + 0 = 3

5 2 + 1 = 4

5 2 + 1 = 4

1

35

a

Y =

2

15

a

Y =

50 Y =

1

2

251

a

Y =

2

2

51

a

Y =

2

302 Y =

1

7

a

Y =

3

3

a

Y =

5 Y =

GLM

Now if we calculate the variance for each group:

The average variance in this case is also going to

be 1.5 (1.5 + 1.5 / 2)

2

2

2

2

2

1

( )

15

51

5

1.5

1 4

a

N

Y

Y

N

s

N

= = =

1

2

2

2

2

1

( )

35

251

5

1.5

1 4

a

N

Y

Y

N

s

N

= = =

GLM

We can also calculate the total variability in

the data regardless of treatment group

The average variability of the two groups is

smaller than the total variability.

2

2

2

2

1

( )

50

302

10

5.78

1 9

N

Y

Y

N

s

N

= = =

Analysis deviation approach

The total variability can be partitioned

into between group variability and

error.

( ) ( ) ( )

ij ij j j

Y GM Y Y Y GM = +

Analysis deviation approach

If you ignore group membership and

calculate the mean of all subjects this

is the grand mean and total variability

is the deviation of all subjects around

this grand mean

Remember that if you just looked at

deviations it would most likely sum to

zero so

Analysis deviation approach

( ) ( ) ( )

2 2 2

/

ij j ij j

i j j i j

total bg wg

total A S A

Y GM n Y GM Y Y

SS SS SS

SS SS SS

= +

= +

= +

Analysis deviation approach

A Score

( )

2

ij

Y GM

( )

2

j

Y GM

( )

2

ij j

Y Y

a

1

9

7

6

7

6

16

4

1

4

1

(7 5)

2

= 4

4

0

1

0

1

a

2

3

1

3

4

4

4

16

4

1

1

(3 5)

2

= 4

0

4

0

1

1

50 Y =

2

302 Y =

52 =

8 =

12 =

5 Y =

5(8) 40 n = =

52 = 40 + 12

Analysis deviation approach

degrees of freedom

DF

total

= N 1 = 10 -1 = 9

DF

A

= a 1 = 2 1 = 1

DF

S/A

= a(S 1) = a(n 1) = an a =

N a = 2(5) 2 = 8

Analysis deviation approach

Variance or Mean square

MS

total

= 52/9 = 5.78

MS

A

= 40/1 = 40

MS

S/A

= 12/8 = 1.5

Test statistic

F = MS

A

/MS

S/A

= 40/1.5 = 26.67

Critical value is looked up with df

A

, df

S/A

and alpha. The test is always non-

directional.

Analysis deviation approach

ANOVA summary table

Source SS df MS F

A 40 1 40 26.67

S/A 12 8 1.5

Total 52 9

Analysis computational

approach

Equations

Under each part of the equations, you divide by the

number of scores it took to get the number in the

numerator

( )

2

2

2 2

Y T

Y

T

SS SS Y Y

N an

= = =

( )

2

2

j

A

a

T

SS

n an

=

( )

2

2

/

j

S A

a

SS Y

n

=

Analysis computational

approach

Analysis of sample problem

2

50

302 52

10

T

SS = =

2 2 2

35 15 50

40

5 10

A

SS

+

= =

2 2

/

35 15

302 12

5

S A

SS

+

= =

Analysis regression

approach

Levels of A Cases Y X YX

a

1

S

1

S

2

S

3

S

4

S

5

9

7

6

7

6

1

1

1

1

1

9

7

6

7

6

a

2

S

6

S

7

S

8

S

9

S

10

3

1

3

4

4

-1

-1

-1

-1

-1

-3

-1

-3

-4

-4

Sum 50 0 20

Squares Summed 302 10

N 10

Mean 5

Analysis regression

approach

Y = a + bX + e

e = Y Y

Analysis regression

approach

Sums of squares

( )

2

2

2

50

( ) 302 52

10

Y

SS Y Y

N

= = =

( )

2

2

2

0

( ) 10 10

10

X

SS X X

N

= = =

( )( )

(50)(0)

( ) 20 20

10

Y X

SP YX YX

N

= = =

Analysis regression

approach

( )

( ) 52

Total

SS SS Y = =

( )

| |

2

2

( )

20

40

( ) 10

regression

SP YX

SS

SS X

= = =

( ) ( ) ( )

52 40 12

residual total regression

SS SS SS = = =

Slope

Intercept

( )

2

2

( )( )

( ) 20

2

( ) 10

Y X

YX

SP YX

N

b

SS X

X

X

N

(

= = = =

5 2(0) 5 a Y bX = = =

Analysis regression

approach

1

2

'

For a :

' 5 2(1) 7

For a :

' 5 2( 1) 3

Y a bX

Y

Y

= +

= + =

= + =

Analysis regression

approach

Degrees of freedom

df

(reg.)

= # of predictors

df

(total)

= number of cases 1

df

(resid.)

= df(total) df(reg.) =

9 1 = 8

Statistical Inference

and the F-test

Any type of measurement will include a

certain amount of random variability.

In the F-test this random variability is seen

in two places, random variation of each

person around their group mean and each

group mean around the grand mean.

The effect of the IV is seen as adding further

variation of the group means around their

grand mean so that the F-test is really:

Statistical Inference

and the F-test

If there is no effect of the IV than the

equation breaks down to just:

which means that any differences between the

groups is due to chance alone.

BG

WG

effect error

F

error

+

=

1

BG

WG

error

F

error

= ~

Statistical Inference

and the F-test

The F-distribution is based on having

a between groups variation due to the

effect that causes the F-ratio to be

larger than 1.

Like the t-distribution, there is not a

single F-distribution, but a family of

distributions. The F distribution is

determined by both the degrees of

freedom due to the effect and the

degrees of freedom due to the error.

Statistical Inference

and the F-test

Assumptions of the analysis

Robust a robust test is one that is

said to be fairly accurate even if the

assumptions of the analysis are not

met. ANOVA is said to be a fairly

robust analysis. With that said

Assumptions of the analysis

Normality of the sampling distribution of

means

This assumes that the sampling distribution of

each level of the IV is relatively normal.

The assumption is of the sampling distribution

not the scores themselves

This assumption is said to be met when there

is relatively equal samples in each cell and the

degrees of freedom for error is 20 or more.

Assumptions of the analysis

Normality of the sampling distribution

of means

If the degrees of freedom for error are small

than:

The individual distributions should be

checked for skewness and kurtosis (see

chapter 2) and the presence of outliers.

If the data does not meet the distributional

assumption than transformations will need to

be done.

Assumptions of the analysis

Independence of errors the size of the error for

one case is not related to the size of the error in

another case.

This is violated if a subject is used more than once

(repeated measures case) and is still analyzed with

between subjects ANOVA

This is also violated if subjects are ran in groups. This

is especially the case if the groups are pre-existing

This can also be the case if similar people exist within

a randomized experiment (e.g. age groups) and can

be controlled by using this variable as a blocking

variable.

Assumptions of the analysis

Homogeneity of Variance since we are

assuming that each sample comes from

the same population and is only affected

(or not) by the IV, we assume that each

groups has roughly the same variance

Each sample variance should reflect the

population variance, they should be equal to

each other

Since we use each sample variance to estimate

an average within cell variance, they need to be

roughly equal

Assumptions of the analysis

Homogeneity of Variance

An easy test to assess this assumption is:

2

largest

max

2

smallest

max

10, than the variances are roughly homogenous

S

F

S

F

=

s

Assumptions of the analysis

Absence of outliers

Outliers a data point that doesnt really

belong with the others

Either conceptually, you wanted to study

only women and you have data from a man

Or statistically, a data point does not cluster

with other data points and has undue

influence on the distribution

This relates back to normality

Assumptions of the analysis

Absence of outliers

S-ar putea să vă placă și

- Stat Slides 5Document30 paginiStat Slides 5Naqeeb Ullah KhanÎncă nu există evaluări

- Lesson 6.4 Simple Analysis of Variance FinDocument19 paginiLesson 6.4 Simple Analysis of Variance FinJeline Flor EugenioÎncă nu există evaluări

- Analysis of Variance: Session 5Document25 paginiAnalysis of Variance: Session 5keziaÎncă nu există evaluări

- Comparing Several Means: AnovaDocument52 paginiComparing Several Means: Anovapramit04Încă nu există evaluări

- AnovaDocument105 paginiAnovaasdasdas asdasdasdsadsasddssaÎncă nu există evaluări

- BivariateDocument54 paginiBivariateAnkit KapoorÎncă nu există evaluări

- Lecture 2 EPS 550 SP 2010Document30 paginiLecture 2 EPS 550 SP 2010Leslie SmithÎncă nu există evaluări

- 2.ANOVA-solution - Solution LaboratoryDocument13 pagini2.ANOVA-solution - Solution LaboratoryAriadna AbadÎncă nu există evaluări

- Prepared By: Rex Mabanta Ralph Stephen Bartolo Reynante LumawanDocument43 paginiPrepared By: Rex Mabanta Ralph Stephen Bartolo Reynante LumawanRicardo VelozÎncă nu există evaluări

- MODULE 9 Anova BSADocument10 paginiMODULE 9 Anova BSAJevelyn Mendoza FarroÎncă nu există evaluări

- Examples of Continuous Probability Distributions:: The Normal and Standard NormalDocument57 paginiExamples of Continuous Probability Distributions:: The Normal and Standard NormalPawan JajuÎncă nu există evaluări

- Examples of Continuous Probability Distributions:: The Normal and Standard NormalDocument57 paginiExamples of Continuous Probability Distributions:: The Normal and Standard NormalAkshay VetalÎncă nu există evaluări

- Session 16,17 and 18Document7 paginiSession 16,17 and 18jyotisagar talukdarÎncă nu există evaluări

- Measures of Central TendencyDocument29 paginiMeasures of Central TendencyShafiq Ur RahmanÎncă nu există evaluări

- Pertemuan 3 AnovaDocument60 paginiPertemuan 3 AnovaKerin ArdyÎncă nu există evaluări

- Chi-Square, F-Tests & Analysis of Variance (Anova)Document37 paginiChi-Square, F-Tests & Analysis of Variance (Anova)MohamedKijazyÎncă nu există evaluări

- Anova Slides PresentationDocument29 paginiAnova Slides PresentationCarlos Samaniego100% (1)

- Anova - One Way Sem 1 20142015 DKDocument8 paginiAnova - One Way Sem 1 20142015 DKAnonymous jxnjKLÎncă nu există evaluări

- ANOVA Unit3 BBA504ADocument9 paginiANOVA Unit3 BBA504ADr. Meghdoot GhoshÎncă nu există evaluări

- Anova FinalDocument22 paginiAnova FinalHezekiah BatoonÎncă nu există evaluări

- Tatang A Gumanti 2010: Pengenalan Alat-Alat Uji Statistik Dalam Penelitian SosialDocument15 paginiTatang A Gumanti 2010: Pengenalan Alat-Alat Uji Statistik Dalam Penelitian SosialPrima JoeÎncă nu există evaluări

- Multiple Reg LudlowDocument54 paginiMultiple Reg LudlowchompoonootÎncă nu există evaluări

- Examples AnovaDocument13 paginiExamples AnovaMamunoor RashidÎncă nu există evaluări

- Fundamentals of StatisticsDocument6 paginiFundamentals of StatisticsLucky GojeÎncă nu există evaluări

- Anova: Analysis of Variation: Math 243 Lecture R. PruimDocument30 paginiAnova: Analysis of Variation: Math 243 Lecture R. PruimMahender KumarÎncă nu există evaluări

- Six Sigma - Live Lecture 14Document66 paginiSix Sigma - Live Lecture 14Vishwa ChethanÎncă nu există evaluări

- S11 SPDocument15 paginiS11 SPSaagar KarandeÎncă nu există evaluări

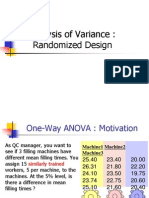

- Analysis of Variance: Randomized DesignDocument19 paginiAnalysis of Variance: Randomized DesignSylvia CheungÎncă nu există evaluări

- Test ReliabilityDocument41 paginiTest ReliabilityMacky DacilloÎncă nu există evaluări

- Aem214 CH-3CDocument6 paginiAem214 CH-3CLucky GojeÎncă nu există evaluări

- Mann WHitney U TestDocument35 paginiMann WHitney U Testeric huabÎncă nu există evaluări

- Anova Non Parametric TestDocument18 paginiAnova Non Parametric TestGerlyn MortegaÎncă nu există evaluări

- Statistics TA CHP 13 Experimental Design and ANOVA-2Document62 paginiStatistics TA CHP 13 Experimental Design and ANOVA-2Jennifer LimerthaÎncă nu există evaluări

- Analysis of Variance & CorrelationDocument31 paginiAnalysis of Variance & Correlationjohn erispeÎncă nu există evaluări

- Anova: Analysis of Variation: Math 243 Lecture R. PruimDocument30 paginiAnova: Analysis of Variation: Math 243 Lecture R. PruimLakshmi BurraÎncă nu există evaluări

- ch04 2013Document21 paginich04 2013angelli45Încă nu există evaluări

- Theory & Problems of Probability & Statistics Murray R. SpiegelDocument89 paginiTheory & Problems of Probability & Statistics Murray R. SpiegelPriya SharmaÎncă nu există evaluări

- Hypothesis Testing and Comparison of Two PopulationsDocument27 paginiHypothesis Testing and Comparison of Two Populationsmilahanif8Încă nu există evaluări

- Comparing Two GroupsDocument25 paginiComparing Two GroupsJosh PotashÎncă nu există evaluări

- One Way Analysis of Variance (ANOVA) : "Slide 43-45)Document15 paginiOne Way Analysis of Variance (ANOVA) : "Slide 43-45)Dayangku AyusapuraÎncă nu există evaluări

- AnnovaDocument19 paginiAnnovaLabiz Saroni Zida0% (1)

- Measure of Central TendencyDocument40 paginiMeasure of Central TendencybmÎncă nu există evaluări

- 1 Assignment Solution SubmisionDocument3 pagini1 Assignment Solution SubmisionSanjay StÎncă nu există evaluări

- Quantitative Methods For Business 18-SolutionsDocument15 paginiQuantitative Methods For Business 18-SolutionsabhirejanilÎncă nu există evaluări

- Chi Square Calculation MethodDocument10 paginiChi Square Calculation Methodananthakumar100% (1)

- Edme7 One-Factor TutDocument29 paginiEdme7 One-Factor TutDANIEL-LABJMEÎncă nu există evaluări

- Analysis of Variance (Anova)Document29 paginiAnalysis of Variance (Anova)aksabhishek88Încă nu există evaluări

- Econtentfandttest 200515034154Document28 paginiEcontentfandttest 200515034154Anjum MehtabÎncă nu există evaluări

- Watson Introduccion A La Econometria PDFDocument253 paginiWatson Introduccion A La Econometria PDF131270Încă nu există evaluări

- 5 One-Way ANOVA (Review) and Experimental DesignDocument15 pagini5 One-Way ANOVA (Review) and Experimental DesignjuntujuntuÎncă nu există evaluări

- Basic Business Statistics: Analysis of VarianceDocument85 paginiBasic Business Statistics: Analysis of VarianceDavid Robayo MartínezÎncă nu există evaluări

- Standard Deviation Made EasyDocument8 paginiStandard Deviation Made EasyShe LagundinoÎncă nu există evaluări

- Business Research Methods: Bivariate Analysis - Tests of DifferencesDocument56 paginiBusiness Research Methods: Bivariate Analysis - Tests of DifferencesLina Haytham HalasaÎncă nu există evaluări

- Examples of Continuous Probability Distributions:: The Normal and Standard NormalDocument57 paginiExamples of Continuous Probability Distributions:: The Normal and Standard NormalkethavarapuramjiÎncă nu există evaluări

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignDe la EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignÎncă nu există evaluări

- Statistics: a QuickStudy Laminated Reference GuideDe la EverandStatistics: a QuickStudy Laminated Reference GuideÎncă nu există evaluări

- Quantitative Method-Breviary - SPSS: A problem-oriented reference for market researchersDe la EverandQuantitative Method-Breviary - SPSS: A problem-oriented reference for market researchersÎncă nu există evaluări

- Age of TalentDocument28 paginiAge of TalentSri LakshmiÎncă nu există evaluări

- AP Suppliers in R12Document51 paginiAP Suppliers in R12NagarajuÎncă nu există evaluări

- Coa Module 1Document79 paginiCoa Module 1B G JEEVANÎncă nu există evaluări

- TestNG NotesDocument23 paginiTestNG Notesvishal sonwaneÎncă nu există evaluări

- Manual de Martillo HidraulicoDocument146 paginiManual de Martillo HidraulicoJoshua RobinsonÎncă nu există evaluări

- CV of Monowar HussainDocument3 paginiCV of Monowar Hussainরেজাউল হকÎncă nu există evaluări

- Designing and Maitenance of Control PanelDocument40 paginiDesigning and Maitenance of Control PanelVenomÎncă nu există evaluări

- C4H260 Participants HandbookDocument187 paginiC4H260 Participants HandbookRavi Dutt RamanujapuÎncă nu există evaluări

- Deep Learning For Human Beings v2Document110 paginiDeep Learning For Human Beings v2Anya NieveÎncă nu există evaluări

- BAS1303 White Paper Interface Comparsion eDocument5 paginiBAS1303 White Paper Interface Comparsion eIndraÎncă nu există evaluări

- CASE Tools Tutorial CASE Tools Tutorial CASE Tools Tutorial CASE Tools TutorialDocument52 paginiCASE Tools Tutorial CASE Tools Tutorial CASE Tools Tutorial CASE Tools TutorialAleeza BukhariÎncă nu există evaluări

- How To Add Fonts To Xdo FileDocument5 paginiHow To Add Fonts To Xdo Fileseethal_2Încă nu există evaluări

- Mutual Aid Pact Internally Provided Backup Empty Shell Recovery Operations Center ConsiderationsDocument2 paginiMutual Aid Pact Internally Provided Backup Empty Shell Recovery Operations Center ConsiderationsRedÎncă nu există evaluări

- Muscle and Pain Stimulators: Price ListDocument1 paginăMuscle and Pain Stimulators: Price ListSanthosh KumarÎncă nu există evaluări

- GSM DG11 4 5Document833 paginiGSM DG11 4 5gustavomoritz.aircom100% (2)

- Smart Parking and Reservation System For Qr-Code Based Car ParkDocument6 paginiSmart Parking and Reservation System For Qr-Code Based Car ParkMinal ShahakarÎncă nu există evaluări

- Compagne Di Collegio - I Racconti Erotici Migliori11Document169 paginiCompagne Di Collegio - I Racconti Erotici Migliori11kyleÎncă nu există evaluări

- S23 Autosampler ConfiguratonDocument10 paginiS23 Autosampler ConfiguratonUswatul HasanahÎncă nu există evaluări

- IBMSDocument24 paginiIBMSMagesh SubramanianÎncă nu există evaluări

- 6 - 8 October 2020: Bahrain International Exhibition & Convention CentreDocument9 pagini6 - 8 October 2020: Bahrain International Exhibition & Convention CentreSamer K.Al-khaldiÎncă nu există evaluări

- CSE 3D Printing ReportDocument18 paginiCSE 3D Printing ReportAksh RawalÎncă nu există evaluări

- GT-POWER Engine Simulation Software: HighlightsDocument2 paginiGT-POWER Engine Simulation Software: HighlightsIrfan ShaikhÎncă nu există evaluări

- Burning Arduino Bootloader With AVR USBASP PDFDocument6 paginiBurning Arduino Bootloader With AVR USBASP PDFxem3Încă nu există evaluări

- AB350M Pro4Document72 paginiAB350M Pro4DanReteganÎncă nu există evaluări

- OTM INtegration ShippingDocument21 paginiOTM INtegration Shippingkkathiresan4998100% (1)

- 4004 Installation & Operating Manual Rev CDocument36 pagini4004 Installation & Operating Manual Rev Cmannyb2000Încă nu există evaluări

- Booking of Expenses To Correct Account HeadDocument2 paginiBooking of Expenses To Correct Account HeadVinay SinghÎncă nu există evaluări

- IAL Mathematics Sample Assessment MaterialDocument460 paginiIAL Mathematics Sample Assessment MaterialMohamed Naaif100% (3)

- MB Gusset DesignDocument172 paginiMB Gusset DesignRamakrishnan Sakthivel100% (1)

- Unit 7 EditedDocument32 paginiUnit 7 EditedtadyÎncă nu există evaluări