Documente Academic

Documente Profesional

Documente Cultură

Reinforcement Learning

Încărcat de

Chandra Prakash MeenaDescriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Reinforcement Learning

Încărcat de

Chandra Prakash MeenaDrepturi de autor:

Formate disponibile

1

Reinforcement Learning

By:

Chandra Prakash

IIITM Gwalior

1

2 2

Outline

Introduction

Element of reinforcement learning

Reinforcement Learning Problem

Problem solving methods for RL

2

3 3

Introduction

Machine learning: Definition

Machine learning is a scientific discipline that is

concerned with the design and development of

algorithms that allow computers to learn based on

data, such as from sensor data or databases.

A major focus of machine learning research is to

automatically learn to recognize complex patterns and

make intelligent decisions based on data .

3

4

Machine learning Type:

With respect to the feedback type to learner:

Supervised learning : Task Driven (Classification)

Unsupervised learning : Data Driven (Clustering)

Reinforcement learning

Close to human learning.

Algorithm learns a policy of how to act in a given

environment.

Every action has some impact in the environment,

and the environment provides rewards that guides

the learning algorithm.

4

5

Supervised Learning Vs Reinforcement

Learning

Supervised Learning

Step: 1

Teacher: Does picture 1 show a car or a flower?

Learner: A flower.

Teacher: No, its a car.

Step: 2

Teacher: Does picture 2 show a car or a flower?

Learner: A car.

Teacher: Yes, its a car.

Step: 3 ....

5

6

Supervised Learning Vs Reinforcement

Learning (Cont...)

Reinforcement Learning

Step: 1

World: You are in state 9. Choose action A or C.

Learner: Action A.

World: Your reward is 100.

Step: 2

World: You are in state 32. Choose action B or E.

Learner: Action B.

World: Your reward is 50.

Step: 3 ....

6

7 7

Introduction (Cont..)

Meaning of Reinforcement: Occurrence of an

event, in the proper relation to a response, that tends to

increase the probability that the response will occur

again in the same situation.

Reinforcement learning is the problem faced by an

agent that learns behavior through trial-and-error

interactions with a dynamic environment.

Reinforcement Learning is learning how to act in

order to maximize a numerical reward.

7

8 8

Introduction (Cont..)

Reinforcement learning is not a type of neural

network, nor is it an alternative to neural networks.

Rather, it is an orthogonal approach for Learning

Machine.

Reinforcement learning emphasizes learning feedback

that evaluates the learner's performance without

providing standards of correctness in the form of

behavioral targets.

Example: Bicycle learning

8

9 9

Element of reinforcement learning

Agent

Environment

State Reward Action

Policy

Agent: Intelligent programs

Environment: External condition

Policy:

Defines the agents behavior at a given time

A mapping from states to actions

Lookup tables or simple function

9

10 10

Element of reinforcement learning

Reward function :

Defines the goal in an RL problem

Policy is altered to achieve this goal

Value function:

Reward function indicates what is good in an

immediate sense while a value function specifies

what is good in the long run.

Value of a state is the total amount of reward an agent can

expect to accumulate over the future, starting form that state.

Model of the environment :

Predict mimic behavior of environment,

Used for planning & if Know current state and

action then predict the resultant next state and next

reward.

10

11 11

Agent- Environment Interface

11

12 12

Steps for Reinforcement Learning

1. The agent observes an input state

2. An action is determined by a decision making

function (policy)

3. The action is performed

4. The agent receives a scalar reward or reinforcement

from the environment

5. Information about the reward given for that state /

action pair is recorded

12

13 13

Silent Features of Reinforcement

Learning :

Set of problems rather than a set of techniques

Without specifying how the task is to be achieved.

RL as a tool point of view:

RL is training by rewards and punishments.

Train tool for the computer learning.

The learning agents point of view:

RL is learning from trial and error with the world.

Eg. how much reward I much get if I get this.

13

14 14

Reinforcement Learning (Cont..)

Reinforcement Learning uses Evaluative Feedback

Purely Evaluative Feedback

Indicates how good the action taken is.

Not tell if it is the best or the worst action possible.

Basis of methods for function optimization.

Purely Instructive Feedback

Indicates the correct action to take, independently of

the action actually taken.

Eg: Supervised Learning

Associative and Non Associative:

14

15

Associative & Non Associative Tasks

Associative :

Situation Dependent

Mapping from situation to the actions that are best in that

situation

Non Associative:

Situation independent

No need for associating different action with different

situations.

Learner either tries to find a single best action when the task

is stationary, or tries to track the best action as it changes

over time when the task is non stationary.

15

16 16

Reinforcement Learning (Cont..)

Exploration and exploitation

Greedy action: Action chosen with greatest estimated

value.

Greedy action: a case of Exploitation.

Non greedy action: a case of Exploration, as it

enables us to improve estimate the non-greedy

action's value.

N-armed bandit Problem:

We have to choose from n different options or actions.

We will choose the one with maximum reward.

16

Bandits Problem

One-Bandit

arms

Pull arms sequentially so as to maximize the total

expected reward

It is non associative and evaluative

18 18

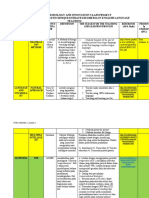

Action Selection Policies

Greediest action - action with the highest estimated

reward.

-greedy

Most of the time the greediest action is chosen

Every once in a while with a small probability , an

action is selected at random. The action is selected

uniformly, independent of the action-value estimates.

-soft - The best action is selected with probability (1

) and the rest of the time a random action is chosen

uniformly.

18

19 19

-Greedy Action Selection Method :

Let the a* is the greedy action at time t and Q

t

(a) is the

value of action a at time.

Greedy Action Selection:

greedy

19

e

e

y probabilit h action wit random

1 y probabilit with

t

a

{

a

t

=

a

t

= a

t

*

=argmax

a

Q

t

(a)

20

Action Selection Policies (Cont)

Softmax

Drawback of -greedy & -soft: Select random

actions uniformly.

Softmax remedies this by:

Assigning a weight with each actions, according

to their action-value estimate.

A random action is selected with regards to the

weight associated with each action

The worst actions are unlikely to be chosen.

This is a good approach to take where the worst

actions are very unfavorable.

20

21

Softmax Action Selection( Cont)

Problem with -greedy: Neglects action values

Softmax idea: grade action probs. by estimated values.

Gibbs, or Boltzmann action selection, or exponential

weights:

t is the computational temperature

At t 0 the Softmax action selection method become

the same as greedy action selection.

21

22

Incremental Implementation

Sample average:

Incremental computation:

Common update rule form:

NewEstimate = OldEstimate + StepSize[Target

OldEstimate]

The expression [ Target - Old Estimate] is an error in the

estimate.

It is reduce by taking a step toward the target.

In proceeding the (t+1)st reward for action a the step-size

parameter will be 1\(t+1).

22

23 23

Some terms in Reinforcement Learning

The Agent Learns a Policy:

Policy at step t, : a mapping from states to action

probabilities will be:

Agents changes their policy with Experience.

Objective: get as much reward as possible over a

long run.

Goals and Rewards

A goal should specify what we want to achieve, not

how we want to achieve it.

23

24

Some terms in RL (Cont)

Returns

Rewards in long term

Episodes: Subsequence of interaction between agent-

environment e.g., plays of a game, trips through a

maze.

Discount return

The geometrically discounted model of return:

Used to:

To determine the present value of the future

rewards

Give more weight to earlier rewards

24

25 25

The Markov Property

Environment's response at (t+1) depends only on State

(s) & Action (a) at t,

Markov Decision Processes

Finite MDP

Transition Probabilities:

Expected Reward

Transition Graph: Summarize dynamics of finite MDP.

25

26 26

Value function

States-action pairs function that estimate how good it is

for the agent to be in a given state

Type of value function

State-Value function

Action-Value function

26

27 27

Backup Diagrams

Basis of the update or backup operations

backup diagrams to provide graphical summaries of

the algorithms. (State value & Action value function)

Bellman Equation for a Policy

27

28

Model-free and Model-based Learning

Model-based learning

Learn from the model instead of interacting with the world

Can visit arbitrary parts of the model

Also called indirect methods

E.g. Dynamic Programming

Model-free learning

Sample Reward and transition function by interacting with

the world

Also called direct methods

28

29

Problem Solving Methods for RL

1) Dynamic programming

2) Monte Carlo methods

3) Temporal-difference learning.

29

30 30

1.Dynamic programming

Classical solution method

Require a complete and accurate model of the

environment.

Popular method for Dynamic programming

Policy Evaluation : Iterative computation of the value

function for a given policy (prediction Problem)

Policy Improvement: Computation of improved policy

for a given value function.

30

{ } ) ( ) (

1 t t t

s V r E s V

t

+

+

31

Dynamic Programming

T

T

T

T

s

t

r

t+1

s

t+1

T

T T

T

T T

T

T

T

31

{ } ) ( ) (

1 t t t

s V r E s V

t

+

+

32

33

34

NewEstimate = OldEstimate +

StepSize[Target

OldEstimate]

NewEstimate = OldEstimate + StepSize[Target OldEstimate]

35

Computation of improved policy for a given value function

36

Policy Evaluation and Policy improvement

together leads

Policy Iteration

Value Iteration:

37 37

Policy Iteration : Alternates policy evaluation and policy

improvement steps

37

38

Combines evaluation and improvement in one update

39 39 39

Value Iteration:

Combines evaluation and improvement in one update

40

Generalized Policy Iteration (GPI)

Consist of two iteration process,

Policy Evaluation :Making the value function

consistent with the current policy

Policy Improvement: Making the policy greedy

with respect to the current value function

40

41 41

2. Monte Carlo Methods

Features of Monte Carlo Methods

No need of Complete knowledge of environment

Based on averaging sample returns observed after visit

to that state.

Experience is divided into Episodes

Only after completing an episode, value estimates and

policies are changed.

Don't require a model

Not suited for step-by-step incremental computation

41

42 42

MC and DP Methods

Compute same value function

Same step as in DP

Policy evaluation

Computation of V and Q for a fixed arbitrary

policy ().

Policy Improvement

Generalized Policy Iteration

42

43 43

To find value of a State

Estimate by experience, average the returns observed

after visit to that state.

More the return, more is the average converge to

expected value

43

44 44

First-visit Monte Carlo Policy evaluation

44

45 45 45

46 46

In MCES, all returns for each state-action pair are accumulated

and averaged, irrespective of what Policy was in force when they

were observed

46

47 47 47

48 48

Monte Carlo Method (Cont)

All actions should be selected infinitely for

optimization

MC exploring starts can not converge to any

suboptimal policy.

Agents have 2 approaches

On-policy

Off-policy

48

49

50 50

On-policy MC algorithm

50

51

Off Policy MC algorithm

52 52

Monte Carlo and Dynamic Programming

MC has several advantage over DP:

Can learn from interaction with environment

No need of full models

No need to learn about ALL states

No bootstrapping

52

53 53

3. Temporal Difference (TD) methods

Learn from experience, like MC

Can learn directly from interaction with environment

No need for full models

Estimate values based on estimated values of next states,

like DP

Bootstrapping (like DP)

Issue to watch for: maintaining sufficient exploration

53

54 54

TD Prediction

| | ) V(s R + ) V(s ) V(s

t t t t

: d Carlometho Monte visit - every Simple

Policy Evaluation (the prediction problem):

for a given policy t, compute the state-value function

V

p

| | ) V(s ) V(s + r + ) V(s ) V(s

: ) (

t + t + t t t

1 1

0 TD method, TD simplest The

target: the actual return after time t

target: an estimate of the return

54

55

Simple Monte Carlo

T T T T T

T

T

T T T

s

t

T

T

T T

T T

T

T T T

55

56

Simplest TD Method

T T

T

T T

T

T

T T T

s

t+1

r

t+1

s

t

T T T T T

T T T T T

56

V(s

t

) V(s

t

) +o r

t +1

+ V(s

t+1

) V(s

t

)

| |

57

Dynamic Programming

T

T

T

T

s

t

r

t+1

s

t+1

T

T T

T

T T

T

T

T

57

{ } ) ( ) (

1 t t t

s V r E s V

t

+

+

58 58

TD methods bootstrap and sample

Bootstrapping: update involves an estimate

MC does not bootstrap

DP bootstraps

TD bootstraps

Sampling: update does not involve an expected

value

MC samples

DP does not sample

TD samples

58

59 59

Learning An Action-Value Function

Estimate Q

p

for the current behavior policy p .

59

( ) ( ) ( ) ( ) | |

0. then terminal, is If

: this do , state l nontermina a from sition every tran After

1 1

1 1

= ) a , Q(s s

a , s Q a , s gQ + r + a , s Q a , s Q

s

1 + t + t + t

t t 1 + t + t + t t t t t

t

60

Q-Learning: Off-Policy TD Control

60

One- step Q- learning:

Q s

t

, a

t

( )Q s

t

, a

t

( )+ o r

t +1

+ max

a

Q s

t+1

, a ( ) Q s

t

, a

t

( )

| |

61 61

Sarsa: On-Policy TD Control

S A R S A: State Action Reward State Action

61

Turn this into a control method by always updating the policy

to be greedy with respect to the current estimate:

62 62

Advantages of TD Learning

TD methods do not require a model of the

environment, only experience

TD, but not MC, methods can be fully incremental

You can learn before knowing the final outcome

Less memory

Less peak computation

You can learn without the final outcome

From incomplete sequences

Both MC and TD converge

62

63

References: RL

Univ. of Alberta

http://www.cs.ualberta.ca/~sutton/book/ebook/node

1.html

Sutton and barto,Reinforcement Learning an

introduction.

Univ. of South Wales

http://www.cse.unsw.edu.au/~cs9417ml/RL1/tdlear

ning.html

64 64

Thank You

64

S-ar putea să vă placă și

- Image Classification: Step-by-step Classifying Images with Python and Techniques of Computer Vision and Machine LearningDe la EverandImage Classification: Step-by-step Classifying Images with Python and Techniques of Computer Vision and Machine LearningÎncă nu există evaluări

- Supervised Learning 1 PDFDocument162 paginiSupervised Learning 1 PDFAlexanderÎncă nu există evaluări

- How to Design Optimization Algorithms by Applying Natural Behavioral PatternsDe la EverandHow to Design Optimization Algorithms by Applying Natural Behavioral PatternsÎncă nu există evaluări

- Reinforcement LearningDocument232 paginiReinforcement LearningCosme Fula NitoÎncă nu există evaluări

- Reinforcement Learning A Complete Guide - 2021 EditionDe la EverandReinforcement Learning A Complete Guide - 2021 EditionÎncă nu există evaluări

- Supervised LearningDocument19 paginiSupervised LearningSauki RidwanÎncă nu există evaluări

- A Comprehensive Survey of Graph Neural Networks PDFDocument22 paginiA Comprehensive Survey of Graph Neural Networks PDFgcÎncă nu există evaluări

- New Learning of Python by Practical Innovation and TechnologyDe la EverandNew Learning of Python by Practical Innovation and TechnologyÎncă nu există evaluări

- Association Rule Mining Lesson PDFDocument9 paginiAssociation Rule Mining Lesson PDFTushar BhonsleÎncă nu există evaluări

- Reinforcement LearningDocument46 paginiReinforcement LearningShagunÎncă nu există evaluări

- ML Lecture 11 (K Nearest Neighbors)Document13 paginiML Lecture 11 (K Nearest Neighbors)Md Fazle RabbyÎncă nu există evaluări

- Reinforcement Learning Sutton BookDocument172 paginiReinforcement Learning Sutton BookDom DeSicilia100% (1)

- ClassificationDocument105 paginiClassificationkarvamin100% (1)

- Unit - 5.1 - Introduction To Machine LearningDocument38 paginiUnit - 5.1 - Introduction To Machine LearningHarsh officialÎncă nu există evaluări

- Word2Vec Tutorial - The Skip-Gram Model Chris McCormick PDFDocument39 paginiWord2Vec Tutorial - The Skip-Gram Model Chris McCormick PDFVandCasaÎncă nu există evaluări

- Learning Path Machine LearningDocument7 paginiLearning Path Machine LearningRajÎncă nu există evaluări

- MEAP V02-Manning PublicationsDocument129 paginiMEAP V02-Manning PublicationsNguyen Xuan ThoÎncă nu există evaluări

- ML Course PDFDocument133 paginiML Course PDFPhuongÎncă nu există evaluări

- Natural Language Processing: All You Need To Know AboutDocument45 paginiNatural Language Processing: All You Need To Know AboutChaitanya SaiÎncă nu există evaluări

- Machine LearningDocument29 paginiMachine Learningbikram2128Încă nu există evaluări

- Supervised Vs Unsupervised LearningDocument9 paginiSupervised Vs Unsupervised LearningranamzeeshanÎncă nu există evaluări

- Notes On BackpropagationDocument14 paginiNotes On BackpropagationJun WangÎncă nu există evaluări

- Deep Reinforcement Learning in Action - Algorithms & Data StructuresDocument5 paginiDeep Reinforcement Learning in Action - Algorithms & Data StructuressosogynuÎncă nu există evaluări

- Generative Adversarial Networks (GANs) - Engine and Applications PDFDocument13 paginiGenerative Adversarial Networks (GANs) - Engine and Applications PDFKhaja Riazuddin Nawaz MohammedÎncă nu există evaluări

- ML Unit 1 PallavDocument22 paginiML Unit 1 Pallavravi joshiÎncă nu există evaluări

- Introduction To ML Partial 2 PDFDocument54 paginiIntroduction To ML Partial 2 PDFLesocrateÎncă nu există evaluări

- Machine Learning NotesDocument15 paginiMachine Learning NotesxyzabsÎncă nu există evaluări

- Lasoo RegressionDocument8 paginiLasoo RegressionMohsin AliÎncă nu există evaluări

- Deep Reinforcement LearningDocument47 paginiDeep Reinforcement LearningHarsh AroraÎncă nu există evaluări

- A Gentle Introduction To Neural Networks With PythonDocument85 paginiA Gentle Introduction To Neural Networks With Pythonsudha_scampyÎncă nu există evaluări

- A Gentle Introduction To Computer Vision and Opencv: Stephen PilliDocument11 paginiA Gentle Introduction To Computer Vision and Opencv: Stephen PilliStephenÎncă nu există evaluări

- Machine Learning Workflow EbookDocument22 paginiMachine Learning Workflow EbookNguyen Thi Hoang GiangÎncă nu există evaluări

- Deep LearningDocument58 paginiDeep LearningSirish Venkatagiri100% (5)

- Deep LearningDocument32 paginiDeep LearningSushma Nookala100% (1)

- A Survey On Generative Adversarial Networks (GANs)Document5 paginiA Survey On Generative Adversarial Networks (GANs)International Journal of Innovative Science and Research TechnologyÎncă nu există evaluări

- Deep Learning For Image ClassificationDocument75 paginiDeep Learning For Image ClassificationBartoszSowul100% (2)

- Machine Learning With Real Life Project: by - Rishabh GaurDocument26 paginiMachine Learning With Real Life Project: by - Rishabh GaurRishab Gaur100% (2)

- Statquest Gentle Introduction To Rna SeqDocument188 paginiStatquest Gentle Introduction To Rna SeqHoangHai100% (1)

- Gradient Descent - Linear RegressionDocument47 paginiGradient Descent - Linear RegressionRaushan Kashyap100% (1)

- MLDocument79 paginiMLkiranÎncă nu există evaluări

- Pattern Classification SlideDocument45 paginiPattern Classification SlideTiến QuảngÎncă nu există evaluări

- Artificial Intelligence ProjectDocument10 paginiArtificial Intelligence Projectapi-419285693Încă nu există evaluări

- Machine Learning,, Mcgraw Hill, 1997.: Tom MitchellDocument1 paginăMachine Learning,, Mcgraw Hill, 1997.: Tom MitchellIsaias Prestes0% (1)

- Deep LearningDocument207 paginiDeep Learningintelligence gateway100% (2)

- Graph Neural Networks: Aakash Kumar Arvind RamaduraiDocument22 paginiGraph Neural Networks: Aakash Kumar Arvind RamaduraiArvind RamaduraiÎncă nu există evaluări

- Kaspersky Lab Whitepaper Machine LearningDocument17 paginiKaspersky Lab Whitepaper Machine LearningCristian SalazarÎncă nu există evaluări

- Artificial Intelligence: Slide 6Document42 paginiArtificial Intelligence: Slide 6fatima hussainÎncă nu există evaluări

- Comprehensive Guide Transfer Learning Real World - PythonDocument47 paginiComprehensive Guide Transfer Learning Real World - Pythonashish.mukti223Încă nu există evaluări

- Domain Model TutorialDocument36 paginiDomain Model TutorialChomÎncă nu există evaluări

- Machine Learning: Presentation By: C. Vinoth Kumar SSN College of EngineeringDocument15 paginiMachine Learning: Presentation By: C. Vinoth Kumar SSN College of EngineeringLekshmiÎncă nu există evaluări

- Study Material BTech IT VIII Sem Subject Deep Learning Deep Learning Btech IT VIII SemDocument30 paginiStudy Material BTech IT VIII Sem Subject Deep Learning Deep Learning Btech IT VIII SemEducation VietCoÎncă nu există evaluări

- Machine Learning Digital Notes (R18a0526)Document118 paginiMachine Learning Digital Notes (R18a0526)Mr TejaÎncă nu există evaluări

- Reinforcement Learning and Dynamic Programming For ControlDocument111 paginiReinforcement Learning and Dynamic Programming For ControlVehid Tavakol100% (1)

- Nothing To Hide:: The Privacy Expert's Guide To Artificial Intelligence and Machine LearningDocument32 paginiNothing To Hide:: The Privacy Expert's Guide To Artificial Intelligence and Machine Learningmanuj_madÎncă nu există evaluări

- Scaling AI and MLDocument4 paginiScaling AI and MLNipun A BhuyanÎncă nu există evaluări

- Support Vector Machine: Name: Sagar KumarDocument13 paginiSupport Vector Machine: Name: Sagar KumarShinigamiÎncă nu există evaluări

- Machine LearningDocument81 paginiMachine LearningtelmaÎncă nu există evaluări

- Data Warehousing Architecture and Implementation by Mark W Humphries Michael W Hawkins Michelle C Dy 0130809020Document5 paginiData Warehousing Architecture and Implementation by Mark W Humphries Michael W Hawkins Michelle C Dy 0130809020Danny Dalmata0% (1)

- Artificial Intelligence: Chapter# 05 RoboticsDocument37 paginiArtificial Intelligence: Chapter# 05 Roboticszabih niazaiÎncă nu există evaluări

- Human Aided Text Summarizer "SAAR" Using Reinforcement LearningDocument31 paginiHuman Aided Text Summarizer "SAAR" Using Reinforcement LearningChandra Prakash Meena100% (1)

- Lab 4. IP Configuration and SubnettingDocument9 paginiLab 4. IP Configuration and SubnettingChandra Prakash MeenaÎncă nu există evaluări

- Lab 7. EIGRP & OSPF Routing ProtocolDocument2 paginiLab 7. EIGRP & OSPF Routing ProtocolChandra Prakash MeenaÎncă nu există evaluări

- Lab 6. - Dynamic Routing ProtocolDocument5 paginiLab 6. - Dynamic Routing ProtocolChandra Prakash MeenaÎncă nu există evaluări

- Lab 2. Execute CISCO IOS and Command Line Interface CommandsDocument1 paginăLab 2. Execute CISCO IOS and Command Line Interface CommandsChandra Prakash MeenaÎncă nu există evaluări

- Lab 3. Configure RouterDocument1 paginăLab 3. Configure RouterChandra Prakash MeenaÎncă nu există evaluări

- Lab 1 Introduction: Network AdministrationDocument1 paginăLab 1 Introduction: Network AdministrationChandra Prakash MeenaÎncă nu există evaluări

- Implementing Different Kind of Vlans On SwitchesDocument2 paginiImplementing Different Kind of Vlans On SwitchesChandra Prakash MeenaÎncă nu există evaluări

- Security Engg IntroductionDocument47 paginiSecurity Engg IntroductionChandra Prakash MeenaÎncă nu există evaluări

- Mobile and Adhoc Network IntroductionDocument55 paginiMobile and Adhoc Network IntroductionChandra Prakash MeenaÎncă nu există evaluări

- Artificial Intelligence: Chandra Prakash Assistant Professor LPUDocument65 paginiArtificial Intelligence: Chandra Prakash Assistant Professor LPUAayush ManubanshÎncă nu există evaluări

- The Four Resource Model PDFDocument1 paginăThe Four Resource Model PDFRogério TilioÎncă nu există evaluări

- Sentiment Analysis of Twitter Data: Radhi D. DesaiDocument4 paginiSentiment Analysis of Twitter Data: Radhi D. DesaiZainil AbidinÎncă nu există evaluări

- M.E. Cse.Document11 paginiM.E. Cse.Cibil.Rittu1719 MBAÎncă nu există evaluări

- (Frontiers in Artificial Intelligence and Applications) B. Goertzel-Advances in Artificial General Intelligence - Concepts, Architectures and Algorithms-IOS Press (2007)Document305 pagini(Frontiers in Artificial Intelligence and Applications) B. Goertzel-Advances in Artificial General Intelligence - Concepts, Architectures and Algorithms-IOS Press (2007)Costin Stan100% (2)

- Ai and Roboties Ch-11 Q&ADocument5 paginiAi and Roboties Ch-11 Q&AsamadÎncă nu există evaluări

- Unit 1Document22 paginiUnit 1Vishal ShivhareÎncă nu există evaluări

- An Executives Guide To AI PDFDocument12 paginiAn Executives Guide To AI PDFBrajesh KokkondaÎncă nu există evaluări

- Generative AI - Healthcare and Life ScienceDocument5 paginiGenerative AI - Healthcare and Life Sciencechaitanya0anandÎncă nu există evaluări

- Comparative Analysis of Time-Series Forecasting Algorithms For Stock Pice Prediction 2019Document6 paginiComparative Analysis of Time-Series Forecasting Algorithms For Stock Pice Prediction 2019nurdi afriantoÎncă nu există evaluări

- Logistic Regression Learning AnnotatedDocument77 paginiLogistic Regression Learning AnnotatedJuan Esteban Mejia VelasquezÎncă nu există evaluări

- Some Properties of Non-Linear Dynamic Systems AreDocument42 paginiSome Properties of Non-Linear Dynamic Systems AreKunalÎncă nu există evaluări

- Navigational Strategies of Mobile Robots: A Review: Dayal R. ParhiDocument21 paginiNavigational Strategies of Mobile Robots: A Review: Dayal R. ParhiSMITH CAMILO QUEVEDOÎncă nu există evaluări

- Devisetty U. Deep Learning For Genomics. Data-Driven Approaches... 2023Document270 paginiDevisetty U. Deep Learning For Genomics. Data-Driven Approaches... 2023Rodrigo RodriguezÎncă nu există evaluări

- Support Vector Machines & Kernels: David Sontag New York UniversityDocument19 paginiSupport Vector Machines & Kernels: David Sontag New York UniversityramanadkÎncă nu există evaluări

- Intelligent Agent: A Variety of DefinitionsDocument6 paginiIntelligent Agent: A Variety of DefinitionsNaveen KumarÎncă nu există evaluări

- Unit - 1 Part 2Document14 paginiUnit - 1 Part 2krushitghevariya41Încă nu există evaluări

- Artificial IntelligenceDocument49 paginiArtificial IntelligenceKristopher France PunioÎncă nu există evaluări

- Transfer Learning in Deep ReinforcementDocument20 paginiTransfer Learning in Deep Reinforcementhan zhouÎncă nu există evaluări

- The Coming AI Economic Revolution: by Rayan Swar Sulaiman SulaimanDocument6 paginiThe Coming AI Economic Revolution: by Rayan Swar Sulaiman SulaimanAbdulla DiyarÎncă nu există evaluări

- Power Bi Interview QuestionsDocument56 paginiPower Bi Interview Questionspkumar950811Încă nu există evaluări

- Generative Ai PrimerDocument4 paginiGenerative Ai PrimerMuhammad Fajrin DarutamaÎncă nu există evaluări

- SyllabusDocument11 paginiSyllabusraviÎncă nu există evaluări

- LUMIQ DataSheetDocument5 paginiLUMIQ DataSheetBhanuj VermaÎncă nu există evaluări

- AI Fundamentals Level 1 Quiz - Attempt Review - Jan 2024Document16 paginiAI Fundamentals Level 1 Quiz - Attempt Review - Jan 2024EFÎncă nu există evaluări

- ELT METHODOLOGY AND INNOVATION CLASS PROJECT (Fitra)Document9 paginiELT METHODOLOGY AND INNOVATION CLASS PROJECT (Fitra)Fitra AndanaÎncă nu există evaluări

- Artificial Intelligence Project IdeasDocument2 paginiArtificial Intelligence Project IdeasRahul PatraÎncă nu există evaluări

- IEEE Systems Man and Cybernetics Magazine-April 2021Document68 paginiIEEE Systems Man and Cybernetics Magazine-April 2021Gavril BogdanÎncă nu există evaluări

- 03192005214235Document0 pagini03192005214235Daniela Buse0% (2)

- Wood Textures v1 CatalogVPDocument53 paginiWood Textures v1 CatalogVPMatias LeboÎncă nu există evaluări