Documente Academic

Documente Profesional

Documente Cultură

Cs Os Chap 15

Încărcat de

NguyễnHoàngCầmDescriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Cs Os Chap 15

Încărcat de

NguyễnHoàngCầmDrepturi de autor:

Formate disponibile

-1.

1-

Chng 10: He Thong File

10.C

2

Chng 10: He Thong File

Ben trong a cng

Cac giai thuat nh thi truy cap a

nh dang, phan vung, raw disk

RAID (Redundant Arrays of Independent Disks)

3

To chc cua a cng

a cng trong he thong PC

Partition 1

Partition 2

Partition 3

Partition 4

Partition

Master Boot Record

(MBR)

Boot Block

4

Disk Anatomy

disk head array

1 12 platters

the disk spins around 7,200 rpm

track

5

Ben trong a cng

6

Cac tham so cua a

Thi gian oc/ghi d lieu tren a bao gom

Seek time: thi gian di chuyen au oc/ghi e nh v ung

track/cylinder, phu thuoc toc o/cach di chuyen cua au

oc/ghi

Rotational delay (latency): thi gian au oc ch en ung

sector can oc, phu thuoc toc o quay cua a

Transfer time: thi gian chuyen d lieu t a vao bo nh

hoac ngc lai, phu thuoc bang thong kenh truyen gia

a va bo nh

Disk I/O time = seek time + rotational delay + transfer time

7

Modern disks

Modern hard drives use zoned bit recording

Disks are divided into zones with more sectors on the outer zones

than the inner ones (why?)

Slides from Flynn?

8

Addressing Disks

What the OS knows about the disk

Interface type (IDE/SCSI/SATA), unit number, number of sectors

What happened to sectors, tracks, etc?

Old disks were addressed by cylinder/head/sector (CHS)

Modern disks are addressed using a linear addressing scheme

LBA = logical block address

As an example, LBA = 0..586,072,367 for a 300 GB disk

Who uses sector numbers?

File system software assign logical blocks to files

Terminology

To disk people, block and sector are the same

To file system people, a block is some fixed number of sectors

9

Disk Addresses vs Scheduling

Goal of OS disk-scheduling algorithm

Maintain queue of requests

When disk finishes one request, give it the best request (

performance metric)

e.g., whichever one is closest in terms of disk geometry

Goal of disk's logical addressing

Hide messy details of which sectors are located where

Oh, well

Older OS's tried to understand disk layout

Modern OS's just assume nearby sector numbers are close

Experimental OS's try to understand disk layout again

Next few slides assume old / experimental, not modern

10

Tang hieu suat truy cap a

Cac giai phap

Giam kch thc a

Tang toc o quay cua a

nh thi cac tac vu truy xuat a (disk scheduling)

Bo tr ghi d lieu tren a

cac d lieu co lien quan nam tren cac track gan nhau

interleaving

Bo tr cac file thng s dung vao v tr thch hp

Chon kch thc cua logical block

Read ahead

Speculatively read blocks of data before the application requests

them

11

Hieu suat truy cap a

Performance metric

Throughput

Disk utilization (the fraction of time the disks are actually transferring

data)

Maximum response time

12

nh thi truy cap a

Y tng chnh

Sap xep lai trat t cua cac yeu cau oc/ghi a sao cho

giam thieu thi gian di chuyen au oc (seek time)

Cac giai thuat nh thi truy cap a

First Come, First Served (FCFS)

Shortest-Seek-Time First (SSTF, SSF)

SCAN

C-SCAN (Circular SCAN)

C-LOOK

V du: nh thi chuoi yeu cau oc/ghi a tai

cylinder 98, 183, 37, 122, 14, 124, 65, 67

au oc ang cylinder so 53

13

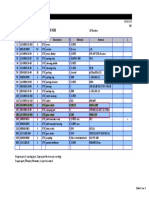

First Come First Served (FCFS)

Hang i: 98, 183, 37, 122, 14, 124, 65, 67

au oc ang cylinder so 53

14 37 53 65 67 98 122 124 183 199

Tong so cylinder

a duyet qua: 640

14

Shortest-Seek-Time First (SSTF)

15

SCAN (elevator algorithm)

and is moving toward cylinder 0

16

C-SCAN (Circular SCAN)

and is servicing on the way to cyl. 199

17

C-LOOK

and is servicing on the way to cyl. 199

18

So sanh giai thuat nh thi (1)

Disk utilization from

FCFS, SSF, and CSCAN

From Disk Scheduling Revisited 1990

19

So sanh giai thuat nh thi (2)

Maximum response time

from FCFS, SSF, and

CSCAN

20

Quan ly a: nh dang (formatting)

nh dang cap thap: nh dang vat ly, chia a

thanh nhieu sector

Moi sector co cau truc d lieu ac biet: header data

trailer

Header va trailer cha cac thong tin danh rieng cho disk

controller nh ch so sector va error-correcting code (ECC)

Khi controller ghi d lieu len mot sector, trng ECC c

cap nhat vi gia tr c tnh da tren d lieu c ghi

Khi oc sector, gia tr ECC cua d lieu c tnh lai va so

sanh vi tr ECC a lu e kiem tra tnh ung an cua d

lieu

Header Data Trailer

21

Quan ly a: Phan vung (partitioning)

Phan vung: chia a thanh nhieu vung (partition),

moi vung gom nhieu block lien tuc.

Moi partition c xem nh mot a luan ly rieng biet.

nh dang luan ly cho partition: tao mot he thong file

(FAT, ext2,)

Lu cac cau truc d lieu khi au cua he thong file len

partition

Tao cau truc d lieu quan ly khong gian trong va khong

gian a cap phat (DOS: FAT; UNIX: superblock va i-node list)

22

V du nh dang mot partition

MBR

23

Quan ly a: Raw disk

Raw disk: partition khong co he thong file

I/O len raw disk c goi la raw I/O

oc hay ghi trc tiep cac block

khong dung cac dch vu cua file system (buffer cache, file

locking, prefetching, cap phat khong gian trong, nh danh file,

va th muc)

V du

Mot so he thong c s d lieu chon dung raw disk

24

Quan ly khong gian trao oi (swap

space)

Swap space

khong gian a c s dung e m rong khong gian nh

trong ky thuat bo nh ao

Muc tieu quan ly: hieu suat cao cho he thong quan ly bo

nh ao

Hien thc

chiem partition rieng, vd swap partition cua Linux

hoac qua mot file system, vd file pagefile.sys cua MS

Windows

Thng kem theo caching hoac dung phng phap cap

phat lien tuc

25

RAID Introduction

Disks act as bottlenecks for both system performance and

storage reliability

A disk array consists of several disks which are organized to

increase performance and improve reliability

Performance is improved through data striping

Reliability is improved through redundancy

Disk arrays that combine data striping and redundancy are

called Redundant Arrays of Independent Disks, or RAID

There are several RAID schemes or levels

Slide cua CMPT 354

http://sleepy.cs.surrey.sfu.ca/cmpt/courses/archive/fall2005spring2006/cmpt354/notes

26

Data Striping

A disk array gives the user the abstraction of a single, large,

disk

When an I/O request is issued, the physical disk blocks to be

retrieved have to be identified

How the data is distributed over the disks in the array affects how

many disks are involved in an I/O request

Data is divided into equal size partitions called striping units

The size of the striping unit varies by the RAID level

The striping units are distributed over the disks using a

round robin algorithm

KEY POINT disks can be

read in parallel, increasing

the transfer rate

27

Striping Units Block Striping

Assume that a file is to be distributed across a 4 disk RAID

system and that

Purely for the sake of illustration, blocks are only one byte! [here

striping-unit size = block size]

25 26 27 28 29 30 31 32 57 58 59 60 61 62 63 64 89 90 91 92 93 94 95 96

9 10 11 12 13 14 15 16 41 42 43 44 45 46 47 48 73 74 75 76 77 78 79 80

1 2 3 4 5 6 7 8 33 34 35 36 37 38 39 40 65 66 67 68 69 70 71 72

17 18 19 20 21 22 23 24 49 50 51 52 53 54 55 56 81 82 83 84 85 86 87 88

1 2 3 4 5 6 7 8 9 10 11 12 13 12 15 16 17 18 19 20 21 22 23 24

Notional File a series of bits, numbered so that we can distinguish them

Now distribute these bits across the 4 RAID disks using BLOCK striping:

28

Striping Units Bit Striping

Now here is the same file, and 4 disk RAID using bit

striping, and again:

Purely for the sake of illustration, blocks are only one byte!

4 8 12 16 20 24 28 32 36 40 44 48 52 56 60 64 68 72 76 80 84 88 92 96

2 6 10 14 18 22 26 30 34 38 42 46 50 54 58 62 66 70 74 78 82 86 90 94

1 5 9 13 17 21 25 29 33 37 41 45 49 53 57 61 65 69 73 77 81 85 89 93

3 7 11 15 19 23 27 31 35 39 43 47 51 55 59 63 67 71 75 79 83 87 91 95

1 2 3 4 5 6 7 8 9 10 11 12 13 12 15 16 17 18 19 20 21 22 23 24

Notional File a series of bits, numbered so that we can distinguish them

Now distribute these bits across the 4 RAID disks using BIT striping:

29

Striping Units Performance

A RAID system with D disks can read data up to D times

faster than a single disk system

As the D disks can be read in parallel

For large reads* there is no difference between bit striping and block

striping

*where some multiple of D blocks are to be read

Block striping is more efficient for many unrelated requests

With bit striping all D disks have to be read to recreate a single

block of the data file

In block striping each disk can satisfy one of the requests,

assuming that the blocks to be read are on different disks

Write performance is similar but is also affected by the parity

scheme

30

Reliability of Disk Arrays

The mean-time-to-failure (MTTF) of a hard disk is around

50,000 hours, or 5.7 years

In a disk array the MTTF (of a single disk in the array)

increases

Because the number of disks is greater

The MTTF of a disk array containing 100 disks is 21 days (=

50,000/100 hours)

Assuming that failures occur independently and

The failure probability does not change over time

Pretty implausible assumptions

Reliability is improved by storing redundant data

31

Redundancy

Reliability of a disk array can be improved by storing

redundant data

If a disk fails, the redundant data can be used to reconstruct

the data lost on the failed disk

The data can either be stored on a separate check disk or

Distributed uniformly over all the disks

Redundant data is typically stored using a parity scheme

There are other redundancy schemes that provide greater reliability

32

Parity Scheme

For each bit on the data disks there is a related parity bit on

a check disk

If the sum of the bits on the data disks is even the parity bit is set to

zero

If the sum of the bits is odd the parity bit is set to one

The data on any one failed disk can be recreated bit by bit

33

0 1 1 0 0 0 1 0 0 1 0 1 0 0 1 0 0 1 0 1 0 0 1 1

1 0 1 0 1 0 1 0 1 0 1 0 1 0 0 1 1 0 0 1 0 1 0 0

0 1 1 0 1 1 1 1 0 0 1 1 0 0 1 0 1 1 0 1 1 0 0 1

0 0 0 1 1 1 0 1 0 0 1 1 0 0 0 1 1 0 1 1 1 0 0 1

1 0 1 1 1 0 1 0 1 1 1 1 1 0 0 0 1 0 1 0 0 1 1 1

Here is a fifth CHECK DISK with the parity data

Here is the 4 disk RAID system showing the actual bit values

34

Parity Scheme and Reliability

In RAID systems the disk array is partitioned into reliability

groups

A reliability group consists of a set of data disks and a set of check

disks

The number of check disks depends on the reliability level that is

selected

Given a RAID system with 100 disks and an additional 10

check disks the MTTF can be increased from 21 days to

250 years!

35

RAID Level 0: Nonredundant

Uses data striping to increase the transfer rate

Good read performance

Up to D times the speed of a single disk

No redundant data is recorded

The best write performance as redundant data does not have to be

recorded

The lowest cost RAID level but

Reliability is a problem, as the MTTF increases linearly with the

number of disks in the array

With 5 data disks, only 5 disks are required

36

Block 1

Block 21

Block 6

Block 16

Block 11

Block 2

Block 22

Block 7

Block 17

Block 12

Block 3

Block 23

Block 8

Block 18

Block 13

Block 4

Block 24

Block 9

Block 19

Block 14

Block 5

Block 25

Block 10

Block 20

Block 15

Disk 0 Disk 1 Disk 2 Disk 3 Disk 4

37

RAID Level 1: Mirrored

For each disk in the system an identical copy is kept, hence

the term mirroring

No data striping, but parallel reads of the duplicate disks can be

made, otherwise read performance is similar to a single disk

Very reliable but the most expensive RAID level

Poor write performance as the duplicate disk has to be written to

These writes should not be performed simultaneously in case

there is a global system failure

With 4 data disks, 8 disks are required

38

Block 1

Block 5

Block 2

Block 4

Block 3

Block 1

Block 5

Block 2

Block 4

Block 3

Disk 0

Disk 1

39

RAID Level 2: Memory-Style ECC

Not common because redundancy schemes such as bit-

interleaved parity provide similar reliability at better

performance and cost.

40

RAID Level 3: Bit-Interleaved Parity

Uses bit striping

Good read performance for large requests

Up to D times the speed of a single disk

Poor read performance for multiple small requests

Uses a single check disk with parity information

Disk controllers can easily determine which disk has failed, so the

check disks are not required to perform this task

Writing requires a read-modify-write cycle

Read D blocks, modify in main memory, write D + C blocks

41

Bit 1

Bit 129

Bit 33

Bit 97

Bit 65

Bit 2

Bit 130

Bit 34

Bit 98

Bit 66

Bit 3

Bit 131

Bit 35

Bit 99

Bit 67

P 1-32

P 129-160

P 33-64

P 97-128

P 65-96

Disk 0 Disk 1 Disk 2 Parity disk

42

RAID Level 4: Block-Interleaved Parity

Block-interleaved, parity disk array is similar to the bit-

interleaved, parity disk array except that data is interleaved

across disks in blocks of arbitrary size rather than in bits

43

RAID Level 5: Block-Interleaved Distributed Parity

Uses block striping

Good read performance for large requests

Up to D times the speed of a single disk

Good read performance for multiple small requests that can

involve all disks in the scheme

Distributes parity information over all of the disks

Writing requires a read-modify-write cycle

But several write requests can be processed in parallel as the

bottleneck of a single check disk has been removed

Best performance for small and large reads and large writes

With 4 disks of data, 5 disks are required with the parity

information distributed across all disks

44

Each square corresponds to a stripe unit. Each column of squares

corresponds to a disk.

P0 computes the parity over stripe units 0, 1, 2 and 3; P1 computes

parity over stripe units 4, 5, 6 and 7; etc.

Disk 0

Disk 4

S-ar putea să vă placă și

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5795)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- American Pile Driving Equipment Equipment CatalogDocument25 paginiAmerican Pile Driving Equipment Equipment CatalogW Morales100% (1)

- Sample Dewa Inspection CommentsDocument2 paginiSample Dewa Inspection Commentsrmtaqui100% (1)

- Industrial Revolution OutlineDocument8 paginiIndustrial Revolution OutlineGeraldine GuarinÎncă nu există evaluări

- MAP V6.3: Reference ManualDocument106 paginiMAP V6.3: Reference ManualGkou DojkuÎncă nu există evaluări

- Accessories 162-USDocument20 paginiAccessories 162-USعايد التعزيÎncă nu există evaluări

- Farmhouse Style Plans - Farm & CountryDocument6 paginiFarmhouse Style Plans - Farm & Countryhanif azriÎncă nu există evaluări

- Etl 213-1208.10 enDocument1 paginăEtl 213-1208.10 enhossamÎncă nu există evaluări

- North Central Mindanao College: Maranding, Lala, Lanao Del NorteDocument8 paginiNorth Central Mindanao College: Maranding, Lala, Lanao Del NorteAnalyn FielÎncă nu există evaluări

- Goal 6 Unesco Water SanatationDocument5 paginiGoal 6 Unesco Water Sanatationapi-644347009Încă nu există evaluări

- Mechanism Design: A SeriesDocument3 paginiMechanism Design: A Seriesamirmasood kholojiniÎncă nu există evaluări

- Pitman SolutionDocument190 paginiPitman SolutionBon Siranart50% (2)

- Temposonics: Absolute, Non-Contact Position SensorsDocument23 paginiTemposonics: Absolute, Non-Contact Position Sensorssorangel_123Încă nu există evaluări

- 4th Semester Electrical Engg.Document19 pagini4th Semester Electrical Engg.Bhojpuri entertainmentÎncă nu există evaluări

- Topik 3 - Hazard Di Air Selangor, Penilaian Risiko Langkah Kawalan Rev1 2020 090320Document59 paginiTopik 3 - Hazard Di Air Selangor, Penilaian Risiko Langkah Kawalan Rev1 2020 090320Nuratiqah SmailÎncă nu există evaluări

- Design of Cycle Rickshaw For School ChildrenDocument23 paginiDesign of Cycle Rickshaw For School ChildrenAditya GuptaÎncă nu există evaluări

- Bsi MD Ivdr Conformity Assessment Routes Booklet Uk enDocument15 paginiBsi MD Ivdr Conformity Assessment Routes Booklet Uk enGuillaumeÎncă nu există evaluări

- Second Advent Herald (When God Stops Winking (Understanding God's Judgments) )Document32 paginiSecond Advent Herald (When God Stops Winking (Understanding God's Judgments) )Adventist_TruthÎncă nu există evaluări

- 65 ActsDocument178 pagini65 ActsComprachosÎncă nu există evaluări

- Pediatric Gynecology BaruDocument79 paginiPediatric Gynecology BaruJosephine Irena100% (2)

- Campa Cola - WikipediaDocument10 paginiCampa Cola - WikipediaPradeep KumarÎncă nu există evaluări

- B.Pharm - Semester - III-10.07.2018Document16 paginiB.Pharm - Semester - III-10.07.2018SAYAN BOSEÎncă nu există evaluări

- ZW250-7 BROCHURE LowresDocument12 paginiZW250-7 BROCHURE Lowresbjrock123Încă nu există evaluări

- Lec22 Mod 5-1 Copper New TechniquesDocument24 paginiLec22 Mod 5-1 Copper New TechniquesAaila AkhterÎncă nu există evaluări

- Holowicki Ind5Document8 paginiHolowicki Ind5api-558593025Încă nu există evaluări

- Moldex Realty, Inc. (Linda Agustin) 2.0 (With Sound)Document111 paginiMoldex Realty, Inc. (Linda Agustin) 2.0 (With Sound)Arwin AgustinÎncă nu există evaluări

- MetDocument41 paginiMetadityaÎncă nu există evaluări

- Pantalla Anterior Bienvenido: Cr080vbesDocument3 paginiPantalla Anterior Bienvenido: Cr080vbesJuan Pablo Virreyra TriguerosÎncă nu există evaluări

- Digital ElectronicsDocument18 paginiDigital ElectronicsHarry BeggyÎncă nu există evaluări

- PT4115EDocument18 paginiPT4115Edragom2Încă nu există evaluări

- Diabetes in Pregnancy: Supervisor: DR Rathimalar By: DR Ashwini Arumugam & DR Laily MokhtarDocument21 paginiDiabetes in Pregnancy: Supervisor: DR Rathimalar By: DR Ashwini Arumugam & DR Laily MokhtarHarleyquinn96 DrÎncă nu există evaluări