Documente Academic

Documente Profesional

Documente Cultură

Machine Learning - Practitioner Basic - v1.0

Încărcat de

Hari AtharshTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Machine Learning - Practitioner Basic - v1.0

Încărcat de

Hari AtharshDrepturi de autor:

Formate disponibile

Machine Learning for Practitioners

Level Basic

What we plan to do today

Introduce

Data Science

Classification

Continuous

Clustering

Time Series

Analysis

Decision

Trees

Introduction to Data Science

Machine Learning - Introduction

Field of study that gives

computers the ability to

learn

without

being

explicitly programmed

Machine Learning Vs Reporting

Systematic Study and extraction of knowledge from data

Unlike

database

querying, which asks

What data satisfies this

pattern

(query)?

discovery asks What

patterns satisfy this

data?

Data Driven Insights

Till Now:

Implement an idea based on an established theory.

Collect data to validate the theory.

Future:

Look at the data and ask the right question to obtain

useful insights.

Keep in Mind.simple is not just good Its great!!

Skill Sets of a Data Scientist

Math &

Statistics

Data

Scientist

Domain

Knowledge &

Soft Skills

Programmi

ng &

Database

Communicati

on &

Visualization

The Machine Learning Process

2

Gather the

Data

Identify the

Business

Problem

Transform

and Sanitize

the Data

Explore the

Data

Interpret and

Improve the

Results

5

Perform

Statistical

Analysis

Business

Interpretati

on of

Results

Classification

Classification Problems- An Overview

Common Types of Classification Problems are as follows:

Classifying Email as SPAM or Not SPAM

Classifying tumor as Malignant or Benign

Classifying online transaction as Fraudulent or Not

Classifying Test Match Outcomes as Win, Loss or

Draw

The classification problem could be Binomial or Multinomial

Data sources and data types

There are all types of data sources and data types:

Data Sources:

We have websites, databases or files

Data Types:

Structured Numeric, Categories

Semi-Structured Books, Brochures/ Documents, E-Mails, etc.

Un-Structured Social Media, Images, Voice, etc.

We will look at only loading text and csv files during this course. These are the most

generic forms for a dataset.

Lets Look at a Case Study: Titanic

On April 15, 1912, during her maiden voyage,

the Titanic sank after colliding with an iceberg,

killing 1502 out of 2224 passengers and crew.

This

sensational

tragedy

shocked

the

international community and led to better

safety regulations for ships.

Although there was some element of luck involved in surviving the sinking, some groups of

people were more likely to survive than others, such as women, children, and the upperclass

In this challenge, we ask you to complete the analysis of what sorts of people were likely to

survive

How do we predict survival possibility for such an

incident?

Well boys, do your best for the

women and children, and look out

for yourselves

Probability Theory

Chance

Outcome

Conditional

Probability

of

an

Lets Look The Data

Passenge

r Id

Survived

PClas

s

Name

Sex

Age

SibSp

Parch

Ticket

Fare

Cabin

Embarked

Integer

Integer

Integer

Factor

Factor

Num

eric

Intege

r

Integer

Factor

Numeric

Factor

Factor

Braund, Mr. Owen Harris

male

22

A/5 21171

7.25

Cumings, Mrs. John Bradley

female

38

PC 17599

71.2833

Heikkinen, Miss. Laina

female

26

STON/O2. 3101282

7.925

Futrelle, Mrs. Jacques Heath

female

35

113803

53.1

SibS

p

No: of siblings/spouses aboard.

Parc

h

No: of parents/children aboard

Ticke

t

Ticket Number.

Passeng

erId

Id of the row. It's a reference

Survived

0 means Not Survived, 1 means Survived

PClass

Class of the passenger. 3 classes present. 1st

class carrying the value 1, 2nd class with value 2

and 3rd class with value 3.

Name

Name of the passenger

Fare

Passenger Fare.

Sex

Male/ Female

Age

Specifies the age of the individual

Cabi

n

Cabin Number.

Emb

arke

S

C85

C

S

C123

Port on which embarked. C = Cherbourg; Q = Queenstown;

S = Southampton

A brief look at different types of structured data

Data Types

Categorical

Numeric

Nominal

Ordinal

Discrete

Variables

with no

ranking

or order.

Variables

with

ordered

values.

Count of

somethin

g

Ex:

Gender

Ex:

Performa

nce

(Good,

Ok, Bad)

Ex: Parch

(Parents

and

Children)

Continuou

s

Informatio

n

measured

on some

scale

Ex: Age

Can take

decimal

values

Food For Thought

What is a dummy variable?

ADummy variableor IndicatorVariableis an artificialvariablecreated to represent

an attribute with two or more distinct categories/levels.

Why do we need this?

A category can be nominal or ordinal. Ordinal categories are ordered and nominal are

not. Dummy variables are needed when working with nominal data.

Dummy Variables in the Titanic Data Set

Which variables need to be converted into dummy among the

following?

Embarke

PClass

Sex

SibSp

Parch

How does a dummy variable look?

Before

Transformation

After Transformation

Sex

Embarked

Sexmale

Sexfemale

EmbarkedS

EmbarkedC

EmbarkedQ

male

female

female

female

Lets study the data

Intuitively which columns are relevant in predicting the survival of

Survived

the

passenger?

Pclass

Sex

Age

SibSp (Maybe)

Parch (Maybe)

Fare

Embarked (Maybe)

Are Any of These Columns Related?

PClass and Fare will be related to one another

Embarking and Pclass could have a combined impact on Fare

Some common descriptive statistics used to explain data

Mean

Sum of each value/Total No: of values Works when values are

not skewed

A mother 6 feet tall and a son 2 feet tall cross a river 3

feet deep.

They dont have a problem as on an average the pair

making the crossing are 4 feet tall.

(6 + 2)/2 = 8/2 = 4 feet

Some common descriptive statistics used to explain data

Median

Middle value of a dataset.

Odd No: of values : Pick the middle one

Even No: of values: Take an average of the two

values that lie in the middle

What is the average salary of the attendees in this

classroom?

What if Frank joins us in this classroom? !!

What should I do first with the dataset in order to

manually pick the median ??

Some common descriptive statistics used to explain data

Mode

The most frequent value in a dataset. Useful when the data is of

categorical nature

Food For Thought

What are the different ways to replace missing values?

Mean imputation

Median imputation

Mode imputation

Forward Propagation

Backward Propagation

Decision Tree based imputation

Looking closely at the age feature

The outlier concept

What is an outlier?

Anoutlieris an observation that lies an abnormal distance from other values in a

random sample from a population

How to handle an outlier?

1. Examine the data in the context of outcome

predicted

2. Remove unusual observations that are far removed

from general observations

Pick the outlier from the image in this

slide.

Understanding box plots Five Point Summary

Maximum Value

75th Percentile Value

Median (50th Percentile

Data)

25th Percentile Value

Lowest value

NULL Hypothesis and Alternate Hypothesis

NULL

Thenull

hypothesis(H0)

is

ahypothesiswhich

the researcher tries

Hypothesis

to disprove, reject or nullify. The 'null'

often refers to the common view of

something,

while

the

alternativehypothesisis

what

the

researcher really thinks is the cause of a

phenomenon.

Alternate

Thealternative hypothesis, denoted by

Hypothesis

H1or Ha, is thehypothesis that sample

observations are influenced by some nonrandom cause.

Mathematical understanding of variable importance

We have categorical data and continuous

data.

Lets look at the Titanic case study example:

Target Variable = Survived Categorical

Variable

Categorical Predictors:

Pclass ,Sex , SibSp ,Parch

,Embarked

Checking the importance of these variables:

Both the target variable and the predictor

variables can be any of these two types.

In order to mathematically establish the

importance of a categorical data in accurately

predicting values in the target variable

(continuous/categorical), we need

to perform

Right

Left Variable

one of Test

the following:

Variable

ANOVA

Continuous

Categorical

Chi-Square

Categorical

Categorical

Pearsons

Coeff

Continuous

Continuous

The Chi-Square Test

What is Chi-Square Test?

It is a test conducted across multiple samples to identify whether they are different.

Here the target variable and the independent variables should be discrete in nature.

What does Chi-Square test do?

It checks relationships between the samples and check how much they vary

between each other as a ratio of variance within each other. This metric tells us how

distinct/ homogeneous each of these samples are.

When do I use Chi-Square?

To understand the goodness of fit (or) association test between samples.

The concept of sample and population

Population

All possible data points that can be used to identify pattern for a specific objective

Example: All the job applications received by Cognizant

Sample

A representative subset of the population

Example: All the job applications received by Cognizant who have more than 4

years of experience

Why are we doing this??

Sampling Techniques

Simple Random Sample

A random selection of a subset of data. Each element from the population has an

equal chance of being selected while choosing a sufficiently large sample.

Stratified Random Sampling

Selection of elements based on the distributions observed in the critical

parameters, maintaining the ratios between both the sample and the population.

Cluster Sampling

Withcluster samplingone should

divide the population into groups (clusters).

obtain a simple random sample of so many clusters from all possible clusters.

obtain data on every sampling unit in each of the randomly selected clusters.

Simple Random Sampling

Simple random samplingis thebasic samplingtechnique where we select a group of

subjects (asample) for study from a larger group (a population). Each individual is

chosen entirely by chance and each member of the population has an equal chance of

being included in the sample.

Stratified Sampling

Stratified sampling is a divide-and-conquer sampling strategy. The population is divided

into groups called strata. The strata are chosen so that similar cases are grouped

together, then a second sampling method, usually simple random sampling, is employed

within each stratum. Stratified sampling is especially useful when the cases in each

stratum are very similar with respect to the outcome of interest

33

Derived from https://www.openintro.org/stat/

Cluster Sampling

A cluster sample is much like a two-stage simple random sample. We break up the

population into many groups, called clusters. Then we sample a fixed number of

clusters and collect a simple random sample within each cluster. This technique is

similar to stratified sampling in its process, except that there is no requirement in

cluster sampling to sample from every cluster. Stratified sampling requires

observations be sampled from every stratum

Derived from https://www.openintro.org/stat/

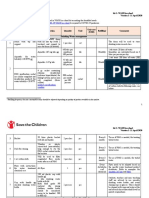

Model Validation Process

Titanic Data

Training Data

Data for Training

the model 60%

of overall data

available

Cross Validation

Data

Data for

validating the

model 20% of

the overall data

available

Test Data

Data for testing

the model 20%

of the overall

data available

Applying stratified sampling to Titanic dataset

Original

Population

:

891 Data

Points

65% Males

35% Females

lin

p

am

S

ed

fi

i

t

ra ble

t

S ria

va

sin

u

g

ex

S

g

Training ~

534

65%

Males

35%

Females

Cross

Validation

~ 178

65%

Males

35%

Females

Test ~

178

65%

Males

35%

Females

36

Types of Models

There are many ways to perform classification in R:

Logistic Regression (Basic Algorithm)

Decision Trees

Random Forest

Support Vector Machines

Neural Networks

Lets take at logistic regression.

Model Diagnostics Reduction In Variance

Actual Result/ Classification

Predictive

Result/

Classification

Yes

No

Yes

True Positive

False Positive

No

False Negative

True Negative

Scoring the model

Area Under the Curve (AUC)

A graphical representation of the logistic

regression accuracy in predicting values for

the target variable.

Continuous

Continuous Data Prediction A Case Study

Singapore House Price Prediction

Singapore has a well developed house

leasing market, where the features of house,

plays a key role in determining its price.

We are going look at features like size of the house,

no: of bedrooms house type, etc. to predict the right

lease price

In this challenge, we ask you to predict the right price

point for a house in Singapore

As usual, lets look at the data

Area

Block

Address

Floor

SQMS

Lease Commence

Date

Approval

Date

Flat Type

Resale

Price

lat

long

Bedok

520

Bedok Nth Ave 1

11 to 15

67

1979

4/1/2013

New Generation

370000

1.3213587

103.931801

Bedok

105

Bedok Nth Ave 4

06 to 10

67

1977

4/1/2013

New Generation

361000

1.3213587

103.931801

Bedok

74

Bedok Nth Rd

06 to 10

70

1978

4/1/2013

Improved

358000

1.3213587

103.931801

Bedok

77

Bedok Nth Rd

11 to 15

60

1986

4/1/2013

Improved

323000

1.3213587

103.931801

Area

Location of the property

Block

Pin Code of the property location

Approval

Date

Latest approval date for the property

Address

Street Name for the property

Flat Type

Types of construction

Floor

A floor group within which property lies

Price to be predicted. The target variable

SQMS

Area/ Size of the property

Resale

Price

Lease Commence

Date

lat

Latitude of the building location

Start date of the current lease contract

long

Longitude of the building location

In Class Exercise

Data Types

Categorical

Nominal

Place the variables in the right

bucket

Numeric

Ordinal

Discrete

Area

Continuou

s

Block

Address

Floor

SQMS

Flat Type

Lease

Commen

ce Date

Resale

Price

Approval

Date

long

lat

Food For Thought

What is feature engineering?

Feature engineeringis the process of using domain knowledge of the

data to createfeaturesthat make machine learning algorithms work.

Identify a couple of features for the Singapore Price Prediction

Problem

1. Lease Period Value <- (Typical Lease Period Lease Period Expired)/

Typical Lease Period

2. Approved Period <- (Approval Period Time Expired Since Approval)

ANOVA Analysis of Variance

What is ANOVA?

It is a test conducted across multiple samples to identify whether they are different.

Here the target variable should be continuous in nature and the independent variables

should be discrete in nature.

What does ANOVA do?

Total Variation

It checks relationships between the samples and check how

much they vary between each other as a ratio of variance within

each other. This metric tells us how distinct/ homogeneous each

of these samples are.

When do I use ANOVA?

Between Group

Variation

Within

Group

Variation

When I want to find out whether the discrete independent variable is significant in

determining the continuous variable value.

ANOVA Visual Explanation

Homogeneous Groups Significant

Group 1

Group 2

Group 3

Hetrogeneous Groups Not

Significant

Group 1

Group 2

Group 3

Pearsons Coefficient

Pearsons Coefficient?

Pearsons coefficient is a measure of linear relationship between two continuous

variables.

What values will it take?

It can take any values between -1 and +1.

What is the interpretation of the values?

+1 : Perfect positive correlation

0 : No correlation

-1 : Perfect Negative Correlation

Handling Multicollinearity

What is multicollinearity?

Multicollinearity(also collinearity) is a phenomenon

in which two or more predictor variables in a multiple

regression model are highly correlated, meaning that

one can be linearly predicted from the others with a

substantial degree of accuracy

Why should I worry about this?

Severe multicollinearity is a problem because it can increase

the variance of the coefficient estimates and make the

estimates very sensitive to minor changes in the model. The

result is that the coefficient estimates are unstable and

difficult to interpret.

Visualizing the output RMSE

Clustering

Clustering An Overview

What is clustering?

Clusteranalysis orclusteringis the task of grouping

a set of objects in such a way that objects in the same

group (called acluster) are more similar (in some

sense or another) to each other than to those in other

groups (clusters).

Why do I need clustering?

Cluster analysis serves as a tool to gain insight into

the distribution of data to observe characteristics of

each cluster.

Types of clustering analysis

Following are the most popular methods of

clustering

K-means clustering

Hierarchical clustering

A short description of k-means clustering

K-means clusters data through distances measured using Euclidean formula. It works

best on continuous variables. In the scenario of categorical variables a special

approach has to be adopted that converts the categorical values of the variable into

dummy variables.

A short description of hierarchical clustering

A method which builds a hierarchy of clusters like a tree structure.

K-means clustering

Input:

K No: of clusters to be formed

Centroids: Anchor points for the cluster groups to be

created

Methodology:

The k-means clustering technique will calculate the centroid

of the clusters through an iterative process.

The iterative process calculates new means (centroid value)

in each iteration. The iterations stop once the centroid values

freeze.

Output:

Each row (record) will be classified to a specific cluster

Scaling and Weighing

What is scaling ?

Determining ability to service a debt based on Income

and No: of children:

The Income data is bigger than No: of children data in

Table 1, by order of thousands. Is Income more

important than, No: of children by that factor?

Income

No: of

children

10000

12000

No: of cards

No: of cars

What is weighing?

Determining whether to offer a credit card or not based

on No: of cards currently held by the client and No: of

cars

owned:

Are the

cards and cars variable equally important in

determining the outcome?

CPU Cycles

K-Means Process

2

3

Memory Consumption

CPU Cycles

Finding Shortest distance for C1

c

1

c

2

D2: distance :

15

D1: distance :

10

2

c

3

D3: distance :

20

Memory Consumption

Closest

Centroi

d

c

1

CPU Cycles

Finding Shortest distance for C2

c

2

Closest

Centroi

d

c

1

D1: distance :

30

D2: distance :

10

2

c

3

D3: distance :

15

Memory Consumption

c

1

c

2

CPU Cycles

Finding Shortest distance for C3

c

2

c

1

2

c

3

D3: distance :

15

Memory Consumption

Closest

Centroi

d

c

1

c

2

c

3

c

1

c

2

c

3

CPU Cycles

Memory Consumption

Average of all the

Data Points to

reposition c1

Average of all the

Data Points to

reposition c2

Average of all the

Data Points to

reposition c3

c

1

c

2

c

3

CPU Cycles

Memory Consumption

Average of all the

data points

results in the

reposition of

c1,c2 and c3

c

1

CPU Cycles

1

c

2

c

1

c

2

c

3

2

c

3

Memory Consumption

Repositioning the centroids

CPU Cycles

c

1

Iteration

continues

till the point the

centroids reach the

optimal position

where cost is

minimum

Cost = 1/m

c

2

2

c

3

3

Memory Consumption

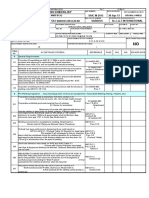

Choosing the optimal cluster set

The plot on the left is the Total

The plot on the left is the Total

Sum of Squares captured for

Sum of Squares captured for

increasing number of centroids

increasing number of centroids

X axis No: of centroids

X axis No: of centroids

Y axis Total Sum of Squares within

Y axis Total Sum of Squares within

each cluster, summed across all

each cluster, summed across all

clusters

clusters

The trajectory changes from a steep

The trajectory changes from a steep

drop to a moderate decline past 5

drop to a moderate decline past 5

clusters.

clusters.

Hence, choosing 5 despite a tradeoff

Hence, choosing 5 despite a tradeoff

where a better minimum is possible

where a better minimum is possible

at 9 clusters.

at 9 clusters.

Time Series Analysis

Time Series Analysis

What is time series analysis?

Atime seriesis a phenomenon observed overtime

measured as a sequence ofdata points, typically

consisting of successive measurements made over a

time interval.

Time seriesanalysiscomprises methods for analyzing

time series data in order to extract meaningful

statistics and other characteristics of the data.

Why do I need time series analysis?

To identify inherent trend and seasonal patterns in

data and predict outcomes accordingly.

Decomposing a time series data

Time series data usually consists of 3 components:

Seasonal

A seasonal pattern exists when a series is influenced

by seasonal factors (e.g., the quarter of the year,

the month, or day of the week). Seasonality is

always of a fixed and known period.

Trend

A trend exists when there is a long-term increase or

decrease in the data. It does not have to be linear.

Cyclic

A cyclic pattern exists when data exhibit rises and

falls that arenot of fixed period

Additionally random fluctuations may also occur.

Time series analysis techniques

Smoothing Methods

Moving Average

Moving average/Centered moving average - Average of the most recent n data values

in the time series

The n-period moving average builds a forecast by averaging the observations in the

most recent n periods:

where xt represents the observation made in period t, and At denotes the moving

average calculated

after making the observation in period t.

Exponential

Smoothing

Average of pervious values, with recent values weighted more strongly:

ARIMA Modelling

ARIMA Overview:

ARIMA stands for Auto-Regressive Integrated Moving Average.

Key

Requirement

for

ARIMA

Modeling:

Stationarity

A time series exhibits Stationarity if the mean and variance of the series is constant over

time.

ARIMA Process:

Auto Regressive: Next term is a sum of some past terms

Integrated: (1/0) The need to smoothen the time series to ensure Stationarity

Moving Average: A sum of random shocks over time

Order and seasonality are specified which looks back the number of data points

mentioned.

Decision Trees

Decision Trees An Overview

What is a decision tree ?

Adecision treeis adecisionsupport tool that uses atree-like graph or model

ofdecisionsand their possible consequences, including chance event outcomes,

resource costs, and utility.

What are its advantages?

1.

2.

3.

4.

Requires minimal data preparation

Missing value problems are handled

Key predictor variables are identified

Easy to interpret and explain to business stakeholders

The Decision Tree The Building Process

Grow the

Tree the

Grow

tree

until

criteria

is

met

Find the

Split the

Choose

best split

Generate Rule

Set

Interpret

output

Prune the

Create

Treesubtrees

and

choose

Random Forest An Overview

What is a random forest?

Random forests is a notion of the general technique of random decision

forests that are an ensemble learning method for classification, regression and

other tasks, that operate by constructing a multitude of decision trees at

training time.

Why

is

random

technique?

forest

powerful

A decision tree consists of a structured approach in identifying the

characteristics in data that help in identifying values for the target variable

By building a bag of trees, most of the noise is eliminated and the probability

of identifying the optimal values increases.

Variable Importance

Random forests identifies variables of highest significance in calculating the

outcomes

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- (M) Obsolescence of Modern ManagementDocument10 pagini(M) Obsolescence of Modern ManagementHari AtharshÎncă nu există evaluări

- Vocabulary List PDFDocument42 paginiVocabulary List PDFGatoneneÎncă nu există evaluări

- Vocabulary List PDFDocument42 paginiVocabulary List PDFGatoneneÎncă nu există evaluări

- Rob EllisDocument7 paginiRob EllisHari AtharshÎncă nu există evaluări

- Corporate Finance:Project Help Manual: Submission Required. No PDF SubmissionDocument4 paginiCorporate Finance:Project Help Manual: Submission Required. No PDF SubmissionHari AtharshÎncă nu există evaluări

- Dynamic PrommingDocument5 paginiDynamic PrommingHari AtharshÎncă nu există evaluări

- Stats ProjectDocument9 paginiStats ProjectHari Atharsh100% (1)

- R HandbookDocument4 paginiR HandbookHari AtharshÎncă nu există evaluări

- Securing Your Big Data Environment WPDocument8 paginiSecuring Your Big Data Environment WPMickhy PSÎncă nu există evaluări

- Data Analysys StatsDocument116 paginiData Analysys StatsHari AtharshÎncă nu există evaluări

- Afonina.O. MSCDocument57 paginiAfonina.O. MSCHari AtharshÎncă nu există evaluări

- Social Media MiningDocument10 paginiSocial Media MiningHari AtharshÎncă nu există evaluări

- J.R.D Tata: A Visionary LeaderDocument31 paginiJ.R.D Tata: A Visionary LeaderHari AtharshÎncă nu există evaluări

- CC Anbcc FD 002 Enr0Document5 paginiCC Anbcc FD 002 Enr0ssierroÎncă nu există evaluări

- Tecplot 360 2013 Scripting ManualDocument306 paginiTecplot 360 2013 Scripting ManualThomas KinseyÎncă nu există evaluări

- Spine Beam - SCHEME 4Document28 paginiSpine Beam - SCHEME 4Edi ObrayanÎncă nu există evaluări

- ODF-2 - Learning MaterialDocument24 paginiODF-2 - Learning MateriallevychafsÎncă nu există evaluări

- COVID Immunization Record Correction RequestDocument2 paginiCOVID Immunization Record Correction RequestNBC 10 WJARÎncă nu există evaluări

- SDM Case AssignmentDocument15 paginiSDM Case Assignmentcharith sai t 122013601002Încă nu există evaluări

- Charlemagne Command ListDocument69 paginiCharlemagne Command ListBoardkingZeroÎncă nu există evaluări

- Lps - Config Doc of Fm-BcsDocument37 paginiLps - Config Doc of Fm-Bcsraj01072007Încă nu există evaluări

- Unit 10-Maintain Knowledge of Improvements To Influence Health and Safety Practice ARDocument9 paginiUnit 10-Maintain Knowledge of Improvements To Influence Health and Safety Practice ARAshraf EL WardajiÎncă nu există evaluări

- 990-91356A ACRD300 CE-UL TechnicalSpecifications Part2Document25 pagini990-91356A ACRD300 CE-UL TechnicalSpecifications Part2Marvin NerioÎncă nu există evaluări

- Opel GT Wiring DiagramDocument30 paginiOpel GT Wiring DiagramMassimiliano MarchiÎncă nu există evaluări

- Fire and Life Safety Assessment ReportDocument5 paginiFire and Life Safety Assessment ReportJune CostalesÎncă nu există evaluări

- Sappi Mccoy 75 Selections From The AIGA ArchivesDocument105 paginiSappi Mccoy 75 Selections From The AIGA ArchivesSappiETCÎncă nu există evaluări

- TNCT Q2 Module3cDocument15 paginiTNCT Q2 Module3cashurishuri411100% (1)

- 1 s2.0 S0304389421026054 MainDocument24 pagini1 s2.0 S0304389421026054 MainFarah TalibÎncă nu există evaluări

- Expected MCQs CompressedDocument31 paginiExpected MCQs CompressedAdithya kesavÎncă nu există evaluări

- TRX Documentation20130403 PDFDocument49 paginiTRX Documentation20130403 PDFakasameÎncă nu există evaluări

- Kit 2: Essential COVID-19 WASH in SchoolDocument8 paginiKit 2: Essential COVID-19 WASH in SchooltamanimoÎncă nu există evaluări

- 11 TR DSU - CarrierDocument1 pagină11 TR DSU - Carriercalvin.bloodaxe4478100% (1)

- SKF LGMT-2 Data SheetDocument2 paginiSKF LGMT-2 Data SheetRahul SharmaÎncă nu există evaluări

- 254 AssignmentDocument3 pagini254 AssignmentSavera Mizan ShuptiÎncă nu există evaluări

- Ewellery Ndustry: Presentation OnDocument26 paginiEwellery Ndustry: Presentation Onharishgnr0% (1)

- Chapter03 - How To Retrieve Data From A Single TableDocument35 paginiChapter03 - How To Retrieve Data From A Single TableGML KillÎncă nu există evaluări

- Analysis of Brand Activation and Digital Media On The Existence of Local Product Based On Korean Fashion (Case Study On Online Clothing Byeol - Thebrand)Document11 paginiAnalysis of Brand Activation and Digital Media On The Existence of Local Product Based On Korean Fashion (Case Study On Online Clothing Byeol - Thebrand)AJHSSR JournalÎncă nu există evaluări

- DevelopersDocument88 paginiDevelopersdiegoesÎncă nu există evaluări

- 8524Document8 pagini8524Ghulam MurtazaÎncă nu există evaluări

- Options Trading For Beginners Aug15 v1Document187 paginiOptions Trading For Beginners Aug15 v1Glo BerriÎncă nu există evaluări

- CENT - Company Presentation Q1 2020 PDFDocument22 paginiCENT - Company Presentation Q1 2020 PDFsabrina rahmawatiÎncă nu există evaluări

- Ahakuelo IndictmentDocument24 paginiAhakuelo IndictmentHNNÎncă nu există evaluări

- Saic-M-2012 Rev 7 StructureDocument6 paginiSaic-M-2012 Rev 7 StructuremohamedqcÎncă nu există evaluări